Die routinemäßige Verwendung von Patienten‐berichteten Endpunkt‐Maßen zur Verbesserung der Behandlung häufiger, psychischer Störungen bei Erwachsenen

Abstract

Background

Routine outcome monitoring of common mental health disorders (CMHDs), using patient reported outcome measures (PROMs), has been promoted across primary care, psychological therapy and multidisciplinary mental health care settings, but is likely to be costly, given the high prevalence of CMHDs. There has been no systematic review of the use of PROMs in routine outcome monitoring of CMHDs across these three settings.

Objectives

To assess the effects of routine measurement and feedback of the results of PROMs during the management of CMHDs in 1) improving the outcome of CMHDs; and 2) in changing the management of CMHDs.

Search methods

We searched the Cochrane Depression Anxiety and Neurosis group specialised controlled trials register (CCDANCTR‐Studies and CCDANCTR‐References), the Oxford University PROMS Bibliography (2002‐5), Ovid PsycINFO, Web of Science, The Cochrane Library, and International trial registries, initially to 30 May 2014, and updated to 18 May 2015.

Selection criteria

We selected cluster and individually randomised controlled trials (RCTs) including participants with CMHDs aged 18 years and over, in which the results of PROMs were fed back to treating clinicians, or both clinicians and patients. We excluded RCTs in child and adolescent treatment settings, and those in which more than 10% of participants had diagnoses of eating disorders, psychoses, substance use disorders, learning disorders or dementia.

Data collection and analysis

At least two authors independently identified eligible trials, assessed trial quality, and extracted data. We conducted meta‐analysis across studies, pooling outcome measures which were sufficiently similar to each other to justify pooling.

Main results

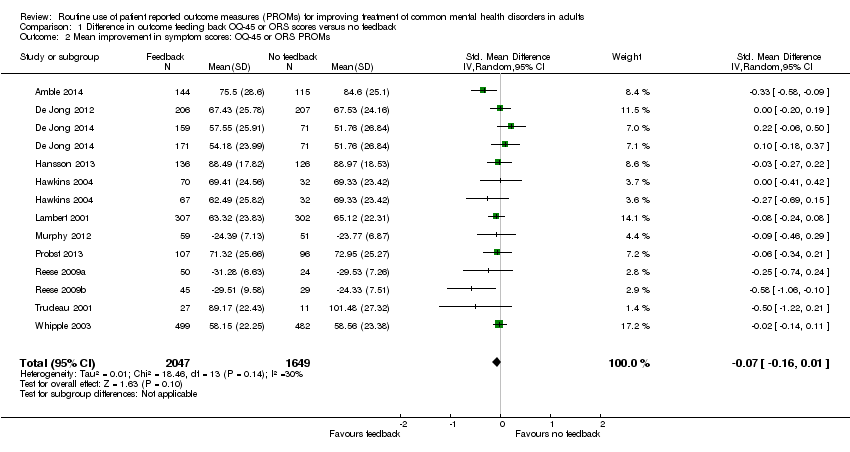

We included 17 studies involving 8787 participants: nine in multidisciplinary mental health care, six in psychological therapy settings, and two in primary care. Pooling of outcome data to provide a summary estimate of effect across studies was possible only for those studies using the compound Outcome Questionnaire (OQ‐45) or Outcome Rating System (ORS) PROMs, which were all conducted in multidisciplinary mental health care or psychological therapy settings, because both primary care studies identified used single symptom outcome measures, which were not directly comparable to the OQ‐45 or ORS.

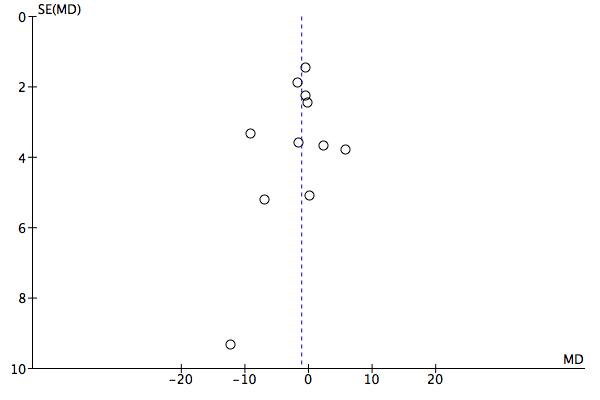

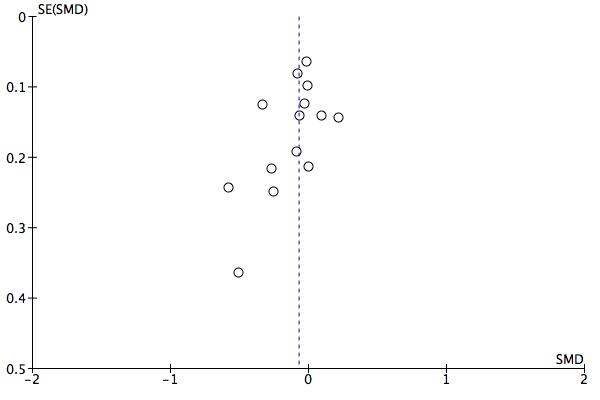

Meta‐analysis of 12 studies including 3696 participants using these PROMs found no evidence of a difference in outcome in terms of symptoms, between feedback and no‐feedback groups (standardised mean difference (SMD) ‐0.07, 95% confidence interval (CI) ‐0.16 to 0.01; P value = 0.10). The evidence for this comparison was graded as low quality however, as all included studies were considered at high risk of bias, in most cases due to inadequate blinding of assessors and significant attrition at follow‐up.

Quality of life was reported in only two studies, social functioning in one, and costs in none. Information on adverse events (thoughts of self‐harm or suicide) was collected in one study, but differences between arms were not reported.

It was not possible to pool data on changes in drug treatment or referrals as only two studies reported these. Meta‐analysis of seven studies including 2608 participants found no evidence of a difference in management of CMHDs between feedback and no‐feedback groups, in terms of the number of treatment sessions received (mean difference (MD) ‐0.02 sessions, 95% CI ‐0.42 to 0.39; P value = 0.93). However, the evidence for this comparison was also graded as low quality.

Authors' conclusions

We found insufficient evidence to support the use of routine outcome monitoring using PROMs in the treatment of CMHDs, in terms of improving patient outcomes or in improving management. The findings are subject to considerable uncertainty however, due to the high risk of bias in the large majority of trials meeting the inclusion criteria, which means further research is very likely to have an important impact on the estimate of effect and is likely to change the estimate. More research of better quality is therefore required, particularly in primary care where most CMHDs are treated.

Future research should address issues of blinding of assessors and attrition, and measure a range of relevant symptom outcomes, as well as possible harmful effects of monitoring, health‐related quality of life, social functioning, and costs. Studies should include people treated with drugs as well as psychological therapies, and should follow them up for longer than six months.

PICOs

Laienverständliche Zusammenfassung

Verwendung von Patienten‐berichteten Endpunkt‐Maßen, zur Beobachtung des Fortschritts bei Erwachsenen mit häufigen psychischen Störungen

Warum ist dieser Review wichtig?

Eine von sechs Personen leidet an einer häufigen psychischen Störungen (common mental health disorders (CMHD)), wozu auch Depressionen und Angststörungen gehören. Patienten‐berichtete Endpunkt‐Maße (PROMs) sind Fragebögen zu Symptomen, Funktionsfähigkeit und Beziehungen der Patienten. Die Verwendung von PROMs, um damit Fortschritte bei Menschen mit CMHDs zu beobachten, könnte sowohl Behandlungsendpunkte als auch die Handhabung von CMHDs verbessern.

Zielgruppe des Reviews

Menschen mit CMHDs; Gesundheitsexperten aus der medizinischen Grundversorgung, der Psychotherapie und psychologischen Gesundheitsdiensten; Gesundheitsbeauftragte.

Welche Fragen soll dieser Review beantworten?

Verbessert die Verwendung von PROMs zur Beobachtung von Fortschritten bei Menschen mit CMHDs gesundheitsrelevante Endpunkte einschließlich Symptomen, Lebensqualität und sozialer Fähigkeiten?

Ändert die Verwendung von PROMs bei Menschen mit CMHD die Art und Weise mit der ihren Problemen begegnet wird, einschließlich medikamentöser Therapie und Überweisungen an Spezialisten?

Welche Studien wurden in diesen Review eingeschlossen?

Studien‐Datenbanken wurden durchsucht, um alle Studien mit hoher Qualität zu finden, in denen PROMs verwendet wurden, um die Behandlung von CMHDs zu beobachten und die bis Mai 2015 publiziert wurden. Es wurden nur randomisierte, kontrollierte Studien an Erwachsenen eingeschlossen, bei denen mehrheitlich eine CMHD diagnostiziert wurde.

17 Studien mit insgesamt 8.787 Teilnehmern wurden in den Review eingeschlossen, 9 aus dem Bereich psychische Gesundheit, 6 aus der Psychotherapie und 2 aus der medizinischen Grundversorgung.

Die Qualität der Studien wurde von ‘niedrig’ bis ‘moderat’ bewertet.

Was besagt die Evidenz aus diesem Review?

Ob die routinemäßige Beobachtung von CMHDs mit PROMs hilfreich ist, konnte in der Analyse, welche die Studienergebnisse entweder bezüglich der Verbesserung des Endpunkts Patientensymptome (12 Studien) oder der Veränderung der Behandlungsdauer für die jeweiligen Beschwerden (7 Studien) zusammenfasste, nicht eindeutig gezeigt werden. Änderungen der medikamentösen Therapie oder bezüglich Überweisungen an Spezialisten für eine weiterführende Therapie konnten nicht analysiert werden, weil nur 2 Studien davon berichteten. Ebenso wurden gesundheitsbezogene Lebensqualität, soziale Funktionsfähigkeit, unerwünschte Ereignisse und Kosten in sehr wenigen Studien berichtet.

Was sollte als Nächstes passieren?

Mehr Forschung von besserer Qualität ist nötig, vor allem in der Grundversorgung, wo die meisten CMHDs behandelt werden. In die Studien sollten Menschen mit eingeschlossen werden, die entweder mit Medikamenten oder mit Psychotherapie behandelt werden und sie sollten länger als sechs Monate lang beobachtet werden. Ebenso wie Symptome und Behandlungsdauer sollten Studien auch mögliche Schäden, Lebensqualität, soziale Fähigkeiten und die Kosten der Beobachtung messen.

Authors' conclusions

Summary of findings

| Feedback of PROM scores for routine monitoring of common mental health disorders | |||||

| Patient or population: People with common mental health disorders1 Intervention: Feedback of PROM scores to clinician, or both clinician and patient Comparator: No feedback of PROM scores | |||||

| Outcomes and length of follow‐up | Illustrative risk | Number of participants | Quality of the evidence | Comments | |

| Assumed risk (range of means in no‐feedback groups) | Relative effect (95% CI) in feedback groups | ||||

| Mean improvement in symptom scores Follow‐up: 1‐6 months2 | Mean scores in no‐feedback groups ranged from 51.8 to 101.5 points for OQ‐45 and from 23.8 to 29.5 points for ORS. Standard deviations ranged from 17.8 to 28.6 points for OQ‐45 and from 7.1 to 9.6 points for ORS | Standard mean difference in symptom scores at end of study in feedback groups was 0.07 standard deviations lower | 3696 | ⊕⊕⊝⊝ | Neither study in the primary care setting used the OQ‐45 or ORS PROMs, and so could not be included in this meta‐analysis |

| Health‐related quality of life Follow‐up: 1‐5 months2 Medical Outcomes Study (SF‐12) physical and mental subscales). Scale from 0‐100 Follow‐up: 0‐1 year | Study results could not be combined in a meta‐analysis as data were not available in an appropriate format Mathias 1994 reported no significant differences between feedback and control groups on all nine domains of the SF‐36 Scheidt 2012 reported no significant differences between feedback and no‐feedback groups in physical or mental sub‐scale scores | 583 587 (1 study) | ⊕⊕⊕⊝ moderate7 | ||

| Adverse events Follow‐up: 6 months | Chang 2012 reported no immediate suicide risk across both feedback and no‐feedback groups combined. Number per group not given | 642 | ⊕⊕⊕⊝ moderate7 | ||

| Social functioning Follow‐up: 0‐1 year2 | Data for the social functioning subscale of the OQ‐45 were considered separately in Hansson 2013 and no difference was found | 262 (1 study) | ⊕⊕⊝⊝ low9 | ||

| Costs | Not estimable | 0 (0 studies) | No study assessed the impact of the intervention on direct or indirect costs | ||

| Changes in the management of CMHDs Changes in drug therapy and referrals for specialist care Follow‐up: 1‐6 months2 | Study results could not be combined in a meta‐analysis as data were not available in an appropriate format Chang 2012 and Mathias 1994 both reported no significant differences in changes in drug therapy between study arms Mathias 1994 reported mental health referrals were significantly more likely in the feedback group (OR 1.73, 95% CI 1.11 to 2.70) | 1215 | ⊕⊕⊕⊝ moderate7 | ||

| Changes in the management of CMHDs Follow‐up: 1‐6 months2 | Mean in no‐feedback groups ranged from 3.7 to 33.5 treatment sessions | Mean difference in number of treatment sessions in feedback groups was 0.02 lower | 2608 | ⊕⊕⊝⊝ | Post‐hoc analysis. Changes in medication and referrals for additional therapy were not assessed by any of these studies |

| CI: Confidence interval | |||||

| GRADE Working Group grades of evidence | |||||

| 1Studies were included if the majority of people diagnosed had CMHDs and no more than 10% had diagnoses of psychotic disorders, learning difficulties, dementia, substance misuse, or eating disorders 2Duration of therapy was variable in all studies and determined by the clinician or the patient, or both 6Downgraded two levels due to risk of bias (all included studies were judged at high risk of bias in at least two domains, in particular blinding of participants and outcome assessment, and attrition), and indirectness (although symptom scores were compared between feedback and non‐feedback groups, wider social functioning and quality‐of‐life measurements were not assessed in nearly all studies) 7Downgraded one level due to risk of bias (judged at high risk of bias in at least two domains, in particular blinding of participants and outcome assessment, and attrition) 8Number of PHQ‐9 questionnaires which contained reports of self‐harming thoughts 9Downgraded two levels due to risk of bias and imprecision, as total participant numbers were less than 400 10Downgraded two levels due to risk of bias and for imprecision: estimate of effect includes no effect and incurs very wide confidence intervals | |||||

Background

Description of the condition

Common mental health disorders (CMHDs) are prevalent, often very disabling and very costly. They include depression (including major depression, dysthymia and minor or mild depression); mixed anxiety and depression; and specific anxiety disorders, namely generalised anxiety disorder (GAD), phobias, obsessive‐compulsive disorder (OCD), panic disorder and post‐traumatic stress disorder (PTSD) (McManus 2009). Katon and Schulberg estimated in 1992 that depression fulfilling the criteria for major depression in the American Psychiatric Association Diagnostic and Statistical Manual, 4th edition (DSM‐IV) (APA 2000) occurred in 2% to 4% of people in the community, 5% to 10% of primary care patients, and 10% to 14% of medical inpatients; but in each setting there were two to three times as many people with depressive symptoms that were short of the major depression criteria (Katon 1992). Prevalence rates of major depression of 13.9% in women and 8.5% in men, and of anxiety disorders of 10% and 5% respectively, have been found in family practice attendees across Europe (King 2008). The estimated one‐week prevalence of CMHDs among adults in England in 2007, according to the criteria of the World Health Organization's International Classification of Diseases (ICD‐10) (WHO 1992) was found to be 17.6%, including mixed anxiety and depression in 9.7%; GAD in 4.7%; depressive episode in 2.6%; phobia in 2.6%; OCD in 1.3%; and panic disorder in 1.2% (McManus 2009). In the US National Comorbidity Survey, lifetime prevalence estimates were 16.6% for DSM‐IV major depression; 6.8% for PTSD; 5.7% for GAD; 4.7% for panic disorder; 2.5% for dysthymia; 1.6% for OCD; and 1.4% for agoraphobia (Kessler 2005).

Depression is often chronic and relapsing, resulting in high levels of disability and poor quality of life (Wells 1989), generally high levels of health service use and associated economic costs (Simon 1997), and death from suicide in between 2% and 8% of cases (Bostwick 2000). Major depressive disorder appears to be increasing in prevalence (Compton 2006) and in the Global Burden of Disease Study 2010 (Murray 2010) has moved up to 11th from 15th in the ranking of disorders according to burden in terms of disability adjusted life years (a 37% increase), becoming the second leading cause of years lived with disability, due to population growth and ageing (Ferrari 2013).

The King’s Fund estimated that in the UK 1.45 million people would have depression by 2026, and the total cost to the nation would exceed GBP 12 billion per year, including prescriptions, inpatient and outpatient care, supported accommodation, social services and lost employment (McCrone 2008). The total medical and productivity costs per person with any anxiety disorder were estimated to be around USD 6500 in the USA in 1999 (Marciniak 2004), and across Europe the annual costs of anxiety disorders, including health service costs, welfare benefits and lost productivity, were estimated to exceed USD 40 billion in 2004 (Andlin‐Sobocki 2005).

Depression is usually treated in primary care with selective serotonin reuptake inhibitor (SSRI) antidepressant drugs (in around 80% of cases), psychological treatments (in around 20%), or both (Kendrick 2009); and in one‐third to one‐half of people with major depression, the symptoms persist over a six to 12‐month period (Gilchrist 2007; Katon 1992). Evidence‐based guidelines recommend psychological treatments such as cognitive‐behaviour therapy (CBT) as first‐line treatment for anxiety disorders (NICE 2011a) but SSRIs are also frequently prescribed for their treatment, often because psychological treatments are not available. It is recommended that people prescribed antidepressants are seen for regular follow‐up during treatment. For example, the UK National Institute for Health and Care Excellence (NICE) 2009 guideline on the management of depression in adults recommended that people started on antidepressants who were not considered to be at increased risk of suicide should normally be seen after two weeks, then at intervals of two to four weeks in the first three months, and then at longer intervals if their response to treatment was good (NICE 2009). At each visit clinicians were recommended to evaluate response (symptoms and functioning), adherence to treatment, drug side‐effects and suicide risk (NICE 2009). This evaluation is usually based on clinical judgement alone, but in recent years clinicians have been advised to consider using patient reported outcome measures (PROMs) to augment their clinical judgement. NICE guidance states all staff carrying out the assessment of common mental health disorders should be competent in the use of formal assessment measures and routine outcome measures (NICE 2011a).

Description of the intervention

PROMs assess patients’ experiences of their symptoms, their functional status and their health‐related quality of life. So they can help to determine the outcome of care in terms of these aspects from the patient’s perspective as an expert in the lived experience of their own health. PROMs are different to measures of patients’ experience of, or satisfaction with, the care they receive (Black 2013). PROMs are often self‐report measures that should therefore be free of observer rating bias, but they can also be interview‐based measures that involve the interviewer in interpreting the patients' responses to questions.

The treatment of CMHDs has been augmented in a number of studies by administering PROMs measuring symptoms of depression or anxiety, social functioning or health‐related quality of life, and feeding the results back to the treating clinician or both the treating clinician and the patient. Feedback of the results is the essential element. The intervention will usually include education of the clinician, or both the clinician and patient, about the measures used and their interpretation. It may or may not also include specific instructions on action to take in light of the results, which may be in the form of an algorithm.

How the intervention might work

Carlier 2012 identifies two main theories concerning the links between the use of PROMs, the process of care and outcomes for patients, Feedback Intervention Theory (FIT) and Therapeutic Assessment (TA). FIT suggests that feedback of the results of PROMs to healthcare professionals influences them to adjust treatment or refer for alternative interventions, improving care when measured against best practice guidelines; while TA focuses on the potential therapeutic effects of feeding back the test results to patients.

Greenhalgh 2005 pointed out that feedback to the clinician may initiate specific changes in management, including ordering further tests, referring to other professionals, changing treatments, and giving advice and education to the patient on better control or management of the problem. Feeding the results back to the patient as well as to the clinician can potentially further improve the process of care, as patients often like to be more involved in their own care, which may be beneficial in itself. This may promote better communication and a greater understanding of the patient's personal circumstances, enabling joint decision‐making between clinician and patient, increasing concordance and patient adherence to treatment through agreeing shared goals, and increasing patient satisfaction, all of which in turn can potentially improve the outcome for the patient.

Observational studies suggest that general practitioner (GP) treatment decisions (to prescribe antidepressants, to subsequently change prescriptions, or refer patients for specialist treatment) might be influenced by the results of patient‐completed depression symptom questionnaires at diagnosis (Kendrick 2009) and follow‐up (Moore 2012), in line with the predictions of FIT. A trial of feeding back depression symptom questionnaire scores to primary care physicians and patients in the USA led to increased rates of response to treatment and remission among patients in the intervention arm (Yeung 2012) although this was despite an apparent lack of significant changes in the physicians' management of the patients' depression (Chang 2012). The authors suggested that frequent symptom measurement might have increased patients' symptom awareness and their ability to report relevant symptoms to their physicians, or made them feel more supported, contributing to a lower medication discontinuation rate in the intervention group. Qualitative research suggests that patients with depression do value the use of symptom questionnaires to assess their condition (Dowrick 2009) and the effectiveness of their treatment (Malpass 2010). It might be that if patients feel that they have been assessed more thoroughly and become more involved in the care of their disorder through the completion of PROMs, together with feedback of the significance of the results, this can help them to improve more quickly even in the absence of significant changes in management, in line with the predictions of TA.

Why it is important to do this review

The use of PROMs has been promoted in recent years as a way for patients to become more involved in their own care and to help health professionals make better decisions about their treatments (Black 2013; Black 2015; Fitzpatrick 2009).

In particular, the use of PROMs in depression has been promoted in important policy pronouncements. The US Federal Health Resources and Services Administration (HRSA) Collaborative on Depression included quality standards for the proportion of patients assessed using the self‐complete Patient Health Questionnaire (PHQ‐9) depression symptom measure (Spitzer 1999) at diagnosis and follow‐up (HRSA 2005). The NICE 2009 depression guideline recommended that clinicians should consider using a validated measure (for example for symptoms, functions and disability) to inform and evaluate treatment (NICE 2009). The subsequent NICE quality standard on assessment of depression recommended that practitioners delivering interventions for people with depression should record the results of validated health outcome measures at each treatment contact and use the findings to adjust their delivery of interventions (NICE 2011b). In 2009 a performance indicator was added to the UK National Health Service (NHS) GP pay for performance scheme (the Quality and Outcomes Framework or QOF), financially incentivising the follow‐up assessment of depression with symptom questionnaires five to 12 weeks after diagnosis (BMA & NHS Employers 2009). The UK NHS Increasing Access to Psychological Therapies (IAPT) programme, extending the provision of psychological treatments for CMHDs nationwide, adopted an information standard with an instruction to record PROMs at every visit, including the PHQ‐9 for depression, the self‐complete Generalised Anxiety Disorder questionnaire (GAD‐7) for anxiety (Spitzer 2006), and the Work and Social Adjustment Scale (WSAS, Mundt 2002) for social functioning (IAPT 2011).

The potential for PROMs to improve the care and self‐care of CMHDs cannot be assumed however. The administration of symptom, social functioning, or quality‐of‐life questionnaires to each and every patient with a CMHD adds up to a significant investment of resources in terms of professionals' time given the high numbers of patients with CMHDs, especially in primary care. Following the introduction of the QOF performance indicator financially incentivising the follow‐up assessment of depression with symptom questionnaires, GPs in the UK reported completing more than 1.1 million follow‐up assessments between April 2009 and March 2013 (74% of 1.5 million eligible cases identified in those five years) (QOF Database 2013). The cost to the NHS of those assessments added up to more than GBP 25 million per year in terms of GP time and the incentive payments. Therefore, even such relatively simple quality improvement strategies should be supported by evidence of clinical benefit and cost‐effectiveness.

There have been a number of previous systematic reviews related to this question including studies in different sectors of health care: one of studies in non‐psychiatric settings (Gilbody 2002); one of studies in clinical psychology practice (Lambert 2003), updated in 2010 (Shimokawa 2010); two combining studies in multidisciplinary mental health care (which we previously referred to as 'specialist psychiatric practice', see section on Differences between protocol and review) and clinical psychology practice (Davidson 2014; Knaup 2009); and one limited to studies in primary care (Shaw 2013). The review by Gilbody and colleagues failed to show an impact of patient‐centred outcome instruments assessing patient needs or measuring quality of life in non‐psychiatric settings (Gilbody 2002). However, Knaup and colleagues' systematic review of studies in specialist psychological and multidisciplinary mental health care settings, which included the studies previously reviewed by Lambert and colleagues (Lambert 2003), was more positive, demonstrating benefits of routine outcome measurement for a range of mental health problems (Knaup 2009). Outcomes were found to be improved with an effect size of between 0.1 and 0.3 standard deviations, being improved more when patients were involved in rating their own problems and received feedback on their progress in addition to feedback to the practitioner (Knaup 2009). However, this review included studies of people with more severe mental illnesses as well as CMHDs. Conversely, the 2013 review (Shaw 2013) had a narrow focus as it was limited to studies of the assessment and monitoring of depression in primary care using questionnaires recommended in the NHS GP contract QOF, namely the PHQ‐9, Hospital Anxiety and Depression Scale (HADS) (Zigmond 1983) and Beck Depression Inventory (BDI) (Beck 1961) or BDI‐II (Beck 1996). Other systematic reviews and meta‐analyses have included studies of the use of PROMs as screening or diagnostic tools together with studies of their use as follow‐up monitoring measures (Carlier 2012; Poston 2010) or have included studies of the use of PROMs in the management of physical disorders together with studies in mental health care (Boyce 2013; Marshall 2006; Valdera 2008). One recent systematic review included only studies which evaluated feeding back the results of PROMs in terms of changes in the particular PROM score rather than other relevant outcome measures (Boyce 2013).

There has been no systematic review of the use of PROMs in the routine outcome monitoring of CMHDs in adults across primary care, psychological therapy, and multidisciplinary mental health care settings. Given the high prevalence of CMHDs, the current policy drive promoting routine outcome monitoring across these settings, and the likely significant cost of such widespread monitoring of highly prevalent conditions, there is an urgent need for evidence to guide further developments in policy and clinical practice. We therefore aimed to conduct a comprehensive, up‐to‐date systematic review of the use of PROMs in CMHDs, including studies across primary care, multidisciplinary mental health care, and psychological therapy settings. We aimed to include measures of social functioning and health‐related quality of life (QoL) as well as measures of symptoms of depression and anxiety, because functioning and QoL measures may also influence clinician treatment decisions or patient involvement in their own care, or both, and therefore outcomes for patients.

PROMs can be used as a tool to identify patients with CMHDs whose problems would otherwise be missed, but in this review we were not concerned with the use of PROMs as a screening tool. This was the subject of a previous review, Gilbody 2008. In this review we were concerned with the use of PROMS in monitoring patients' progress and response to treatment, which requires feedback and assessment of the results at follow‐up, after a period of treatment, rather than screening or assessment only before diagnosis or at the point of diagnosis.

We conducted this review according to the methods set out in the protocol (Kendrick 2014).

Objectives

To assess the effects of routine measurement and feedback of the results of PROMs during the management of CMHDs in 1) improving the outcome of CMHDs; and 2) in changing the management of CMHDs.

Methods

Criteria for considering studies for this review

Types of studies

We included randomised controlled trials (RCTs), including cluster RCTs and RCTs randomised at the level of individual participants. We excluded non‐randomised trials.

We planned to include cluster trials where clusters were allocated to intervention or control arms using a quasi‐randomised method, such as minimisation, to avoid significant imbalance between arms arising by chance when the number of clusters is relatively small, but planned to exclude quasi‐randomised trials where allocation was at the level of individual participants. We planned to exclude cross‐over trials because of the very high risk of carry‐over of the intervention into the control arm after participating clinicians or patients cross over. We also planned to exclude uncontrolled before and after trials, and observational studies. However, none of these types of studies was identified.

Types of participants

Participant characteristics

We selected studies which included participants with common mental health disorders (CMHDs) aged 18 years and over, of both genders and all ethnic groups. We excluded studies in child and adolescent treatment settings, as the diagnostic categories included within the group recognised as CMHDs are limited to adults, and in addition the presence of a parent or other carer accompanying a child or adolescent patient complicates the issues of who is providing responses to PROMs administered to monitor the outcome of treatment, and to whom feedback of the results is given.

Diagnosis

We included adult patients with any CMHD, including both those with formal diagnoses according to the criteria of the DSM (APA 2000) or ICD (WHO 1992), and those diagnosed through clinical assessment only, unaided by formal reference to specific diagnostic criteria. The specific disorders included were:

-

depression (including major depression, dysthymia, and minor or mild depression);

-

mixed anxiety and depression;

-

generalised anxiety disorder (GAD);

-

phobias;

-

obsessive‐compulsive disorder (OCD);

-

panic disorder;

-

post‐traumatic stress disorder (PTSD);

-

adjustment reaction.

We included studies in which the diagnoses of the majority of participants were reported as CMHDs, even if a proportion of participants were not given a specific diagnosis, or were reported as having relationship or interpersonal difficulties, 'somatoform disorders', 'other' diagnoses not further specified, or 'administrative codes'. This was a change from the protocol as we planned originally to include only studies with participants specifically diagnosed with one of the disorders listed above, but after discussion within the review study group we decided to include these studies in order to be able to include studies which had a majority of participants diagnosed with CMHDs (see section on Differences between protocol and review).

We excluded studies with more than 10% of patients diagnosed specifically with psychoses, substance use disorders, learning disorders or dementia. We also excluded studies with more than 10% of participants diagnosed with eating disorders, as they are a separate group of disorders not usually included within the group recognised as CMHDs, and the PROMs used for eating disorders are less generic and specifically concentrate on eating habits and weight control measures. This was also a change from the protocol as we planned to exclude studies with any participants at all in these categories, but again, after discussion within the review study group we decided to include studies with fewer than 10% of participants with these diagnoses, in order once again to be able to include studies which had a majority of participants with CMHDs (see section on Differences between protocol and review).

Where studies did not report the diagnoses of participants, we attempted to contact the authors to request information on the participant diagnoses, and whether they would have met the review inclusion and exclusion criteria. This was an addition to the protocol (see section on Differences between protocol and review).

We carried out sensitivity analyses omitting studies which did not report specific diagnoses of CMHDs for 20% or more of their participants, to determine whether these decisions affected the findings.This was an addition to the protocol agreed once again after discussion within the review study group (see section on Differences between protocol and review).

Co‐morbidities

Participants diagnosed with or without co‐morbid physical illnesses were included to ensure as representative a sample as possible.

Setting

Three settings were included: primary care (where the clinicians were all primary care physicians and available treatments post‐assessment included either drug therapy or referral for psychological therapy); multidisciplinary mental health care (where the clinicians included psychiatrists, psychologists, mental health social workers or mental health nurses, and available treatments included drugs, psychological therapies, and physical treatments); and psychological therapies (where the clinicians were psychologists, social workers or nurses and available treatments were all psychological).

Subset data

We planned to include trials that provided data on a relevant subset of their participants, for example studies which compared usual care in one arm with routine outcome monitoring in another, even if there was a third arm with a more complex intervention, but we did not identify any such trials. We also planned to include trials that included a subset of participants who met our criteria for the review, for example in terms of the types of disorder or age range, if the data for those participants could be extracted separately from the rest of the trial sample, but again we did not identify any such trials.

Types of interventions

Experimental intervention

The intervention consisted of augmenting the assessment and management of CMHDs by both of the following.

-

Measuring patient reported outcomes (PROMs), including self‐complete or administered measures of:

-

depressive symptoms, for example the PHQ‐9 (Spitzer 1999). We planned to include the HADS depression subscale (HAD‐D) (Zigmond 1983); BDI (Beck 1961) and BDI‐II (Beck 1996), but found no relevant studies which used them as PROMs;

-

anxiety symptoms, for example the Beck Anxiety Inventory (BAI) (Wetherall 2005). We planned to include the GAD‐7 (Spitzer 2006) but no trials used it;

-

health‐related QoL, for example with the Medical Outcomes Study Short Form SF‐36 (Wells 1989) or SF‐12 (Ware 1996). We planned to include the EuroQol five item EQ‐5D questionnaire (Dolan 1997) but no trials used it;

-

symptoms, individual functioning, and social functioning as composite measures, for example the 45‐item Outcomes Questionnaire (OQ‐45) (Lambert 2004), and the Outcome Rating Scale (ORS) (Miller 2003). We planned to include the Clinical Outcomes in Routine Evaluation Outcome Measure (CORE‐OM) (Barkham 2006) but no trials used it

-

-

Feeding the results back to the treating clinician, to both the clinician and the patient, or to the patient only.

We also planned to include studies using the following as PROMs but found no relevant studies:

-

measures of depression and anxiety combined, for example the self‐complete General Health Questionnaire (GHQ‐28) (Goldberg 1972) or the administered Mini‐International Neuropsychiatric Interview (MINI) (Sheehan 1998); and

-

measures of social functioning, for example the WSAS (Mundt 2002) or the Social Adjustment Scale (SAS) (Cooper 1982)).

Comparator intervention

The comparator was usual care for CMHDs without feeding back the results of PROMs. Routine care includes usual patient‐clinician interaction with non‐standardised history‐taking, investigation, referral, intervention and follow‐up. Trials were excluded if the comparator interventions involved the use of feedback of the results of PROMs as a clinical tool to inform management of the participants. Measures of depression, anxiety, social functioning and quality of life may have been assessed independently by researchers in both the intervention and control conditions to determine the effects of the intervention, but the active component, which was the feeding back of this information to the clinician, or to the patient, or to both clinician and patient, had to occur only in the intervention arm.

Excluded interventions

We excluded studies where the intervention arm was subject to additional components over and above the feedback of PROM results, including pharmacological or psychological treatments that were not available to both the intervention and control groups. A number of more complex interventions have been advocated to improve the quality of care of people with CMHDs including case management (Simon 2004) and collaborative care (Archer 2012), and these usually include feeding back the results of PROMs at initial assessment and follow‐up to inform treatment. However, this review was limited to the effects of feedback of the results of PROMs alone, rather than their use as a component of complex interventions which also enhanced the process of care through case management, collaborative care, active outreach or other systems or processes over and above usual care. It would not have been possible to distinguish the effects of outcome monitoring from other active components in such studies.

Types of outcome measures

Studies that met the above inclusion criteria were included regardless of whether they reported on the following outcomes.

Primary outcomes

1. Mean improvement in symptom scores

Mean improvement in symptom scores (and standardised effect size) from baseline to follow‐up on a symptom‐specific scale, which was either:

-

an interviewer‐rated measure; or

-

a self‐complete questionnaire measure.

Measures used included:

-

interviewer‐rated measures of depression and anxiety including the Diagnostic Interview Schedule (DIS) for DSM‐III disorders (Robins 1981); and

-

self‐complete measures including the PHQ‐9 (Spitzer 1999); BDI (Beck 1961) and BDI‐II (Beck 1996) for depression; the BAI (Wetherall 2005) for anxiety; and the Hopkins symptom checklist SCL‐90 (Derogatis 1974; Derogatis 1983) for both anxiety and depression.

We also planned to include, but found no relevant studies which used the following as primary outcome measures:

-

the interviewer‐rated Hamilton Depression Rating Scale (HDRS or HAMD) (Hamilton 1960); Montgomery‐Asberg Depression Rating Scale (MADRS) (Montgomery 1979); Structured Clinical Interview for DSM‐IV disorders (SCID) (First 1997); and the interviewer‐rated version of the Quick Inventory of Depressive Symptomatology (QIDS) (Trivedi 2004);

-

the self‐complete Community Epidemiologic Survey Depression (CES‐D) scale for DSM‐III depression (Radloff 1997); Zung depression scale (SDI) (Zung 1965); GAD‐7 anxiety scale (Spitzer 2006); GHQ (Goldberg 1972); HADS (Zigmond 1983); Hopkins symptom checklist (Derogatis 1974; Derogatis 1983); Clinical Interview Schedule, Revised (CIS‐R) for ICD‐10 disorders (Lewis 1992); and the self‐complete version of the Quick Inventory of Depressive Symptomatology (QIDS) (Trivedi 2004).

2. Health‐related quality of life

Health‐related quality of life, assessed using specific measures at baseline and follow‐up, including the SF‐36 (Wells 1989). We also planned to include the EQ‐5D (Dolan 1997) but identified no relevant trials which used it.

3. Adverse events, including:

-

numbers and types of antidepressant drug side‐effects;

-

numbers of incidences of self‐harm, and

-

numbers of suicides.

Secondary outcomes

4. Changes in the management of CMHDs

Changes in the management of CMHDs following administration and feedback of the results of PROMs, including:

-

number of changes in drug prescribing (a new prescription, a change in dose or type of drug, or the ending of a prescription);

-

number of referrals for psychological assessment or treatment;

-

number of referrals for psychiatric assessment or treatment.

These are relevant secondary outcomes, as they indicate more proactive care, which might lead to more positive outcomes, although a change in management cannot by itself be regarded as necessarily a positive outcome.

5. Social functioning

Social functioning assessed using specific measures at baseline and follow‐up, for example the WSAS (Mundt 2002). We also planned to include the SAS (Cooper 1982) but identified no relevant trials which used it.

6. Costs, including:

-

the direct costs of administering PROMs and delivering feedback of the results;

-

costs to the health service, including consultations, prescriptions, outpatient attendances and hospital admissions; and

-

societal costs, including costs to the patient and to society in terms of loss of employment and costs of sickness benefits.

Timing of outcome assessment

We planned to divide the reporting of research outcomes into:

-

short‐term, up to six months after baseline assessment; and

-

long‐term, beyond six months.

Hierarchy of outcome measures

We planned to select self‐complete research outcome measures in preference to interviewer‐rated measures of symptoms, social functioning or health‐related quality of life as they are less prone to detection bias due to unblinding of the researcher assessing the outcome. In completing an interviewer‐rated measure the researcher filters all patient reported responses, while for self‐complete measures only those responses which the patient chooses to discuss with the researcher can be influenced by an unblinded researcher.

Search methods for identification of studies

The Cochrane Depression, Anxiety and Neurosis Review Group's Specialised Register (CCDANCTR)

The Cochrane Depression, Anxiety and Neurosis Group (CCDAN) maintain two clinical trials registers at their editorial base in Bristol, UK: a references register and a studies‐based register. The CCDANCTR‐References Register contains over 39,000 reports of RCTs in depression, anxiety and neurosis. Approximately 50% of these references have been tagged to individual, coded trials. The coded trials are held in the CCDANCTR‐Studies Register and records are linked between the two registers through the use of unique Study ID tags. Coding of trials is based on the EU‐Psi coding manual using a controlled vocabulary (please contact the CCDAN Trials Search Co‐ordinator for further details). Reports of trials for inclusion in the Group's registers are collated from routine (weekly), generic searches of Ovid MEDLINE (1950 ‐), EMBASE (1974 ‐) and PsycINFO (1967 ‐); quarterly searches of the Cochrane Central Register of Controlled Trials (CENTRAL); and review specific searches of additional databases. Reports of trials are also sourced from international trials registers through the World Health Organization's trials portal (the Clinical Trials Registry Platform (ICTRP)) and the handsearching of key journals, conference proceedings and other (non‐Cochrane) systematic reviews and meta‐analyses.

Details of CCDAN's generic search strategies (used to identify RCTs) can be found on the Group's website.

Electronic searches

1. The CCDANCTR (References and Studies Register) was initially searched to 30 May 2014 using the following terms:

#1 ("affective disorder*" or “common mental disorder*” or “mental health” or "acute stress" or adjustment or anxi* or compulsi* or obsess* or OCD or depressi* or dysthymi* or neurosis or neuroses or neurotic or panic or *phobi* or PTSD or posttrauma* or "post trauma*" or “stress disorder*” or trauma* or psychotrauma*):ti,ab,kw,ky,emt,mh,mc

#2 PROMS

#3 (“patient reported outcome*” or “patient reported assessment*” or “patient reported symptom*”)

#4 “patient outcome*”

#5 ((patient* or client* or tailored) NEAR2 feedback)

#6 (patient* NEXT ("self assess*" or "self report" or "self monitor*"))

#7 (patient* NEAR2 progress*)

#8 "client report*"

#9 ((active or routine* or regular*) NEAR2 (feedback or measurement* or monitor*))

#10 (monitor* and feedback*)

#11 (“feedback to” or "feed back to" or "fed back to"):ab

#12 ((symptom* or treatment) NEXT monitor*)

#13 (monitor* NEAR2 (“common mental disorder*” or anxi* or compulsi* or obsess* or OCD or depressi* or neurosis or neuroses or neurotic or panic or *phobi* or PTSD or posttrauma* or "post trauma*" or "acute stress" or “stress disorder*” or trauma*))

#14 ((follow‐up* or "follow up*") and assess*):ti

#15 (needs NEAR3 assess*)

#16 (outcome* NEAR (clinical or feedback or manag* or monitor*)):ti

#17 “severity questionnaire*”

#18 severity:ti,kw,ky and (assess* or measure* or outcome* or questionnaire* or score*):ti

#19 (“case management” or “enhanced care”)

#20 (#2 or #3 or #4 or #5 or #6 or #7 or #8 or #9 or #10 or #11 or #12 or #13 or #14 or #15 or #16 or #17 or #18 or #19)

#21 (#1 and #20)

[Key. ab:abstract; emt:EMTREE headings; kw:CRG keywords; ky:other keywords; mc:MesH check words; mh:MeSH headings]

Due to the nature of the intervention (patient reported outcome measures) the search strategy was designed to favour specificity (precision) over sensitivity (recall of all potentially relevant reports). A sensitive search would retrieve too much noise as most of the measures and questionnaires under review are much more frequently used to assess symptom severity or quality of life as research outcomes in treatment trials in patients with CMHDs than as PROMs used for clinical assessment.

2. Complementary searches were conducted on the following bibliographic databases using relevant subject headings (controlled vocabularies) and search syntax that were appropriate to each resource. Searches initially performed to 5 June 2014:

(i) Ovid PsycINFO (all years)

Although PsycINFO is routinely searched to inform the CCDANCTR, we conducted an additional search of this database to increase the sensitivity of our search methods, adding wait‐list control and treatment‐/care‐as‐usual to CCDAN's standard RCT filter. The search strategy is described in Appendix 1.

(ii) PROM Bibliography database (all years to 2005)

The PROM Bibliography was searched for RCTs in mental health. This database, which is available through The Patient‐Reported Outcomes Measurement Group at the University of Oxford, was first published in 2002 with funding from the Department of Health (DH). It was further developed with DH funding to 2005 and contains over 16,000 records relating to patient reported outcome measures.

(iii) Web of Science (WoS): Science Citation Index (cited reference search, all years as appropriate)

3. International trial registries were also searched on 19 February 2015 and 9 April 2015 via the World Health Organization's trials portal (ICTRP) and ClinicalTrials.gov to identify unpublished or ongoing studies. We searched for depression OR depressive OR mental OR psychiatric OR anxiety OR PTSD OR phobia OR OCD AND feedback.

There were no restrictions on date, language or publication status applied to the searches.

4. Update searches 2015

An update search was performed on 18 May 2015 to identify additional RCTs eligible for inclusion. At this time we thought it appropriate to validate the 2014 searches by checking the (a) the provenance of included studies (to date) and (b) information contained in the title,abstract and subject heading fields of study reports in MEDLINE, EMBASE and PsycINFO. This exercise revealed that eight of the eleven included studies (>70%) were only identified from screening reference lists or from the Web of Science citation search and four of these studies made no mention of the patients' mental health condition. The searches were overhauled and the PsycINFO and CCDANCTR databases re‐searched all years to 18 May 2015, together with a search of the Cochrane Library (Appendix 2). A further citation search of WoS was also conducted, to 27 May 2015.

5. Update searches 2016

In compliance with MECIR conduct standard 37 we ran an update search within 12 months of publication (on 25 May 2016), including the following databases: PsycINFO, CCDANCTR, CENTRAL, Web of Science, and the ICTRP/ClinicalTrials.gov international trial registries. These results have not yet been incorporated into the review.

Searching other resources

Grey literature

Google Scholar (top 100 hits) and Google.com were searched (verbatim) for: "Patient Reported Outcome Measures" and "mental health" and (randomised or randomized). Search results were screened for relevant reports and reviews.

Reference lists and correspondence

We screened reference lists (of trial reports and systematic reviews) to identify additional studies missed from the original electronic searches (including unpublished or in‐press citations); used the related articles feature in PubMed; and contacted other experts and trialists in the field for information on unpublished or ongoing studies, or to request additional trial data. 'Patient reported outcome measures' and 'PROMs' are relatively recently adopted terms in the literature. For earlier studies, where the terminology used may be ambiguous, we had to rely more on these informal methods of discovery.

Data collection and analysis

Selection of studies

Two review authors (TK and ME‐G) independently screened titles and abstracts for inclusion of all the potential studies identified as a result of the search, coded as 'retrieve' (eligible or potentially eligible or unclear) or 'do not retrieve'. We resolved disagreements through discussion and consultation with a third author (MM). We retrieved the full‐text study reports or publications and the same two review authors independently screened the full texts, identified studies for inclusion, and identified and recorded reasons for exclusion of the ineligible studies. Again, disagreements were resolved through discussion and consultation with the third author MM. We excluded duplicate records and collated multiple reports that related to the same study so that each study rather than each report became the unit of interest in the review. We recorded the selection process in sufficient detail to complete a PRISMA flow diagram (Moher 2009) and 'Characteristics of excluded studies' table.

Data extraction and management

We designed and used a data collection form which was piloted on one study in the review to extract study characteristics and outcome data. Five review authors (TK, ME‐G, AB, LA, ALB) independently extracted study characteristics and outcome data from the included studies. We extracted the following study characteristics.

-

Methods: study design (cluster or individual randomisation), total duration of study, number of study centres and location, study setting, withdrawals, and dates of study.

-

Participants: n, mean age, age range, gender, severity of condition, diagnostic criteria (clinical only, DSM or ICD, etc.), inclusion criteria, exclusion criteria, and co‐morbidities.

-

Interventions: intervention including the specific instrument(s) used and whether the results were fed back to the treating clinician only or also to the participant; whether education about interpretation and an algorithm were also provided; and details of treatment as usual provided to the comparison group.

-

Outcomes: primary and secondary outcomes specified and collected, and time points reported.

-

Notes: funding for trial, and notable conflicts of interest of trial authors.

We noted in the 'Characteristics of included studies' table if outcome data were not reported in a usable way. We resolved disagreements by consensus and also by involving a third person (MM). Two review authors (TK, ME‐G) transferred data into Review Manager (RevMan) (RevMan 2014), and double‐checked that data were entered correctly by comparing the data presented in the systematic review with the study reports. Another two review authors (BS, AG) spot checked the accuracy of data extracted, against the original study reports.

Main comparison

-

Treatment informed by feedback of patient reported outcome measures compared with treatment as usual.

Assessment of risk of bias in included studies

Two review authors (TK and ME‐G) independently assessed the risk of bias for each study using the criteria outlined in the Cochrane Handbook for Systematic Reviews of Interventions (Higgins 2011). We resolved any disagreements by discussion and by involving other authors (MM, BS, RC, SG). We assessed the risk of bias according to the following domains:

-

Random sequence generation.

-

Allocation concealment.

-

Blinding of participants and clinicians (performance bias, which will be high due to the nature of the intervention).

-

Blinding of researchers conducting outcome assessments (detection bias).

-

Incomplete outcome data.

-

Selective outcome reporting.

-

Other bias.

We judged each potential source of bias as high, low or unclear and provided supporting quotations from the study report where available, together with a justification for our judgment in the 'Risk of bias' table. We summarised the risk of bias judgements across different studies for each of the domains listed. We considered blinding separately for different key outcomes where necessary. Where information on risk of bias related to correspondence with a trialist, we noted this in the 'Risk of bias' table.

When considering treatment effects, we took into account the risk of bias for the studies that contributed to that outcome.

Measures of treatment effect

Continuous data

We calculated mean differences (MD) and the associated 95% confidence interval (CI) for continuous outcomes where there was a common measure across studies, and standardised mean differences (SMD) and the associated 95% CI where different scales were used to measure the same underlying construct. We entered the data presented as a scale with a consistent direction of effect.

Dichotomous data

We carried out a narrative analysis to describe categorical outcomes. See Differences between protocol and review.

Unit of analysis issues

Cluster randomised trials

Clustering by clinician, clinic, practice or service would be the preferred design over randomising individual participants since a clustered design reduces the risk of contamination between arms, as the PROMs are not routinely available in the control settings and are therefore much less likely to be used inadvertently in control patients. However, failure to account for intra‐class correlation in clustered studies is commonly encountered in primary research and leads to a 'unit of analysis' error (Divine 1992) whereby P values are spuriously low, CIs unduly narrow, and statistical significance overestimated, causing type I errors (Bland 1997; Gulliford 1999). For studies that employed a cluster randomisation, we sought evidence that clustering was accounted for by the authors in their analyses.

Studies with multiple treatment groups

Where multiple trial arms were reported in a single trial, we included all relevant arms that compared treatment as usual with routine outcome monitoring.

Where we found three‐armed trials that compared PROMs fed back to the clinician only, versus PROMs fed back to both the clinician and patient, versus treatment as usual, we divided the control group between the two comparisons so as not to use the same data twice, which would constitute a unit of analysis error. However, we also performed a sensitivity analysis excluding any trials with this three‐arm design from the subgroup analysis (see below) to see whether this significantly affected the results of the subgroup analysis.

Dealing with missing data

We contacted investigators in order to verify key study characteristics and obtain missing numerical outcome data, where possible. We documented all correspondence with trialists and report which trialists responded below. (If standard deviations were missing, we planned to calculate them, if possible, from the available information reported (including 95% CIs and P values) or impute standard deviations from similar studies using the same instruments, but in the event we did not need to do this).

Assessment of heterogeneity

Between‐study heterogeneity was assessed using the I2 statistic (Higgins 2003), which describes the percentage of total variation across studies that is due to heterogeneity rather than chance. A rough guide to interpretation is as follows: 0% to 40% might not be important; 30% to 60% may represent moderate heterogeneity; 50% to 90% may represent substantial heterogeneity; and 75% to 100% considerable heterogeneity. We investigated the sources of heterogeneity as described below where the I2 value was greater than 50%. Where I2 was below 50% but the direction and magnitude of treatment effects suggested important heterogeneity, we also investigated the potential sources.

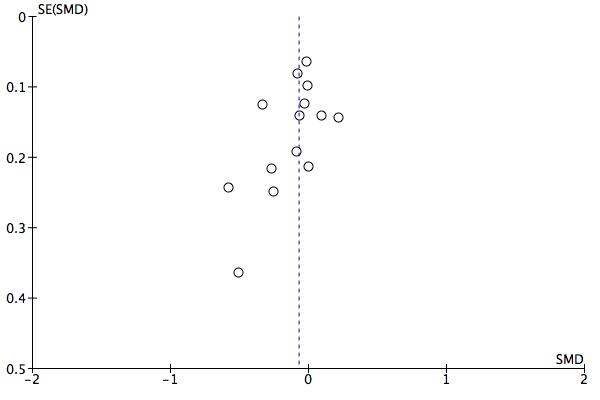

Assessment of reporting biases

We created funnel plots where feasible and where there were sufficient studies (that is 10) (Egger 1997) to investigate possible publication bias. Funnel plot tests for asymmetry were separately conducted in STATA (StataCorp. 2015), using the metabias command.

Data synthesis

We undertook meta‐analyses only where it was meaningful, that is where the PROM feedback interventions, participants and the underlying clinical question were similar enough for pooling to make sense. We pooled change scores as a first preference where these were available, checking assumptions about the approximate normality of data by ensuring that the difference between the mean and lowest or highest possible value divided by the standard deviation was greater than two. Less than two would indicate some skew and less than one would indicate substantial skew. We planned not to attempt pooling for data that were substantially skewed and where the skew could not be reduced by transforming the data. We planned to describe skewed data as medians and interquartile

ranges.

We anticipated significant heterogeneity between studies (I2 value of over 50%) as we were including a range of CMHDs, a range of settings, and both self‐complete and administered outcome measures. Therefore we used a random‐effects model when combining data to minimise the effect of heterogeneity between studies. Where studies were combined which used outcome measures that scored treatment effects in opposite directions, the mean values of one set of studies were multiplied by ‐1 to ensure the scales identified benefited in the same direction, in accordance with section 9.2.3.2 of the Cochrane Handbook (Deeks 2011).

Where cost data were presented and a formal cost‐effectiveness analysis had been undertaken, we planned simply to describe the methods and results. We did not plan to attempt formal statistical pooling of cost data because studies often adopt different perspectives; account for different types of cost data; use different methods of discounting future healthcare costs and benefits; are conducted at different points in time; and are conducted in different countries with varying funding and reimbursement systems, making international comparisons difficult.

Subgroup analysis and investigation of heterogeneity

We planned to conduct the following six subgroup analyses, which should be regarded as exploratory since they are observational and not based on randomised comparisons. We planned to restrict these six subgroup analyses to the three primary outcomes (namely improvement in symptom scores, health‐related quality of life, and adverse effects).

-

Whether the setting of the study (primary care, multidisciplinary mental health services, or psychological therapies) influenced the success of the strategy.

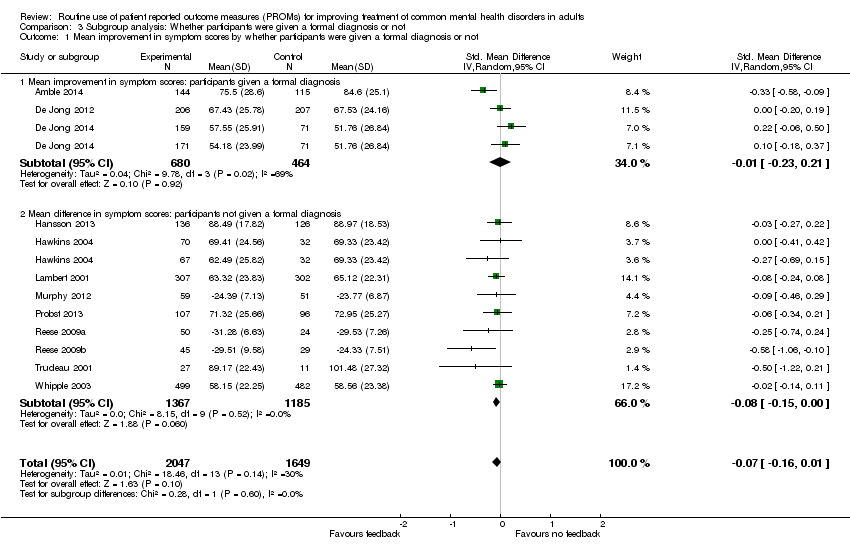

-

Studies in which a formal diagnosis (according to DSM or ICD criteria) was made prior to treatment using a validated assessment, versus studies of participants diagnosed on clinical assessment only, as the formally diagnosed group were likely to be more homogeneous and more alike in their responses to PROMs.

-

Studies of participants aged 18 to 65 years versus those with participants aged over 65 years, as the older age group may have more complex disorders with co‐morbid cognitive changes and it is plausible that recovery follows a different pathway.

-

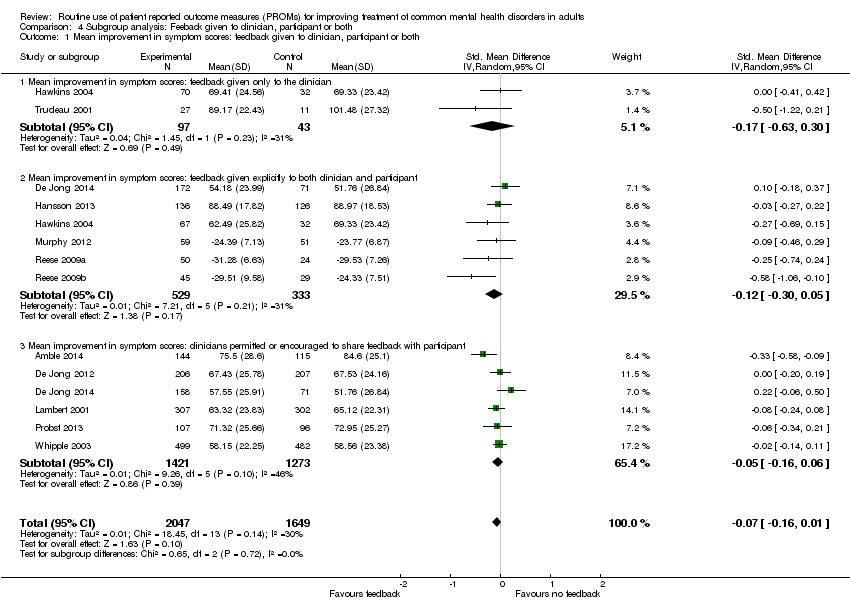

Studies where feedback of the results of PROMs was given only to the clinician versus studies where feedback was given to both clinician and participant, as the previous review by Knaup 2009 showed a greater effect when patients were also given feedback.

-

Studies where feedback of the results of PROMs was given only to the participating patient versus studies where feedback was given to the clinician only, or to both clinician and patient, if any such studies were identified (we thought this was unlikely given the results of previous systematic reviews of outcome monitoring in mental health, which have not identified any studies of feedback to patients alone).

-

Studies where feedback to the clinician included treatment instructions or an algorithm for actions to be taken for particular results, compared to studies where feedback was limited to the results of the PROM alone, to determine whether treatment recommendations in addition to PROM results influenced the results.

Post‐hoc subgroup analyses

We decided post‐hoc to conduct an additional subgroup analysis, comparing studies involving Michael Lambert, the originator and owner of the OQ‐45 system, with studies not involving him, to explore whether potential benefits of the system were identified in independent evaluations. This was because the OQ‐45 was the PROM used in the large majority of studies in the meta‐analyses, and Michael Lambert was author or co‐author of a significant proportion of those studies (see section on Differences between protocol and review).

We also decided during the course of the review to meta‐analyse results for subgroups of participants within studies who were identified as being at higher or lower risk for treatment failure, which was determined by the trajectory of their initial response to therapy. The low risk group was described as 'on‐track' (OT) for a good clinical response, and the high risk group as 'not on track' (NOT). This was a post‐hoc change to the methods which we agreed due to the fact that several identified studies reported potentially important findings in analyses of outcomes for subgroups of OT and NOT participants. One comparison included only the NOT subgroup, comparing outcomes in terms of symptom scores between feedback and non‐feedback arms. The second comparison included both the OT and NOT subgroups, comparing the number of treatment sessions received between feedback and non‐feedback arms, and including a formal test for subgroup differences to look for evidence of differences between OT and NOT subgroups. This was a further change from the protocol, as the number of treatment sessions was a secondary outcome, and originally we planned to conduct subgroup analyses restricted to the three primary outcomes, namely symptoms, health‐related quality of life, and adverse effects (see section on Differences between protocol and review).

Sensitivity analysis

We planned to conduct the following sensitivity analyses to explore their effects on the results obtained in the review, and to test the robustness of decisions made in the review process:

-

Whether the mode of administration (self‐complete versus clinician‐rated) influenced the success of the strategy, by re‐analysing after removing studies using clinician‐rated PROMs and seeing whether the result was significantly different.

-

Whether cluster randomised studies produced a different result from non‐clustered studies, to see whether possible contamination between arms in non‐clustered designs reduced the difference between arms, by re‐analysing after removing non‐clustered studies.

-

Within cluster RCTs, whether adjustment for unit of analysis error influenced the results, to test the robustness of the results arising from non‐adjusted analyses.

-

Whether the inclusion of quasi‐randomised cluster trials significantly affected the results, by re‐analysing after removing quasi‐randomised cluster trials.

-

Whether losing the data from three‐arm trials that compared PROMS fed back to the clinician only, versus PROMS fed back to both the clinician and patient, versus treatment as usual, made a significant difference to the results of the subgroup analysis (4 above), by excluding such trials from the subgroup analysis.

'Summary of findings' tables

We developed 'Summary of findings' tables to summarise the key findings of the review, for the populations in primary care, multidisciplinary mental health care, and psychological therapy settings. We tabulated the comparisons between PROMs and usual care in terms of effects on participant outcomes including symptoms, social functioning, quality of life and adverse effects; and on the process of care including drug prescriptions and referrals. Decisions on which measurements to incorporate into the 'Summary of findings' table were based on those most relevant to clinical practice, taking into consideration the specific nature of the scale and also the time points at which measurements were made. We used the GRADE criteria to assess the body of evidence for each comparison.

Results

Description of studies

Seventeen studies met our inclusion criteria: Amble 2014; Berking 2006; Chang 2012; De Jong 2012; De Jong 2014; Hansson 2013; Hawkins 2004; Lambert 2001; Mathias 1994; Murphy 2012; Probst 2013; Reese 2009a; Reese 2009b; Scheidt 2012; Simon 2012; Trudeau 2001; and Whipple 2003

Results of the search

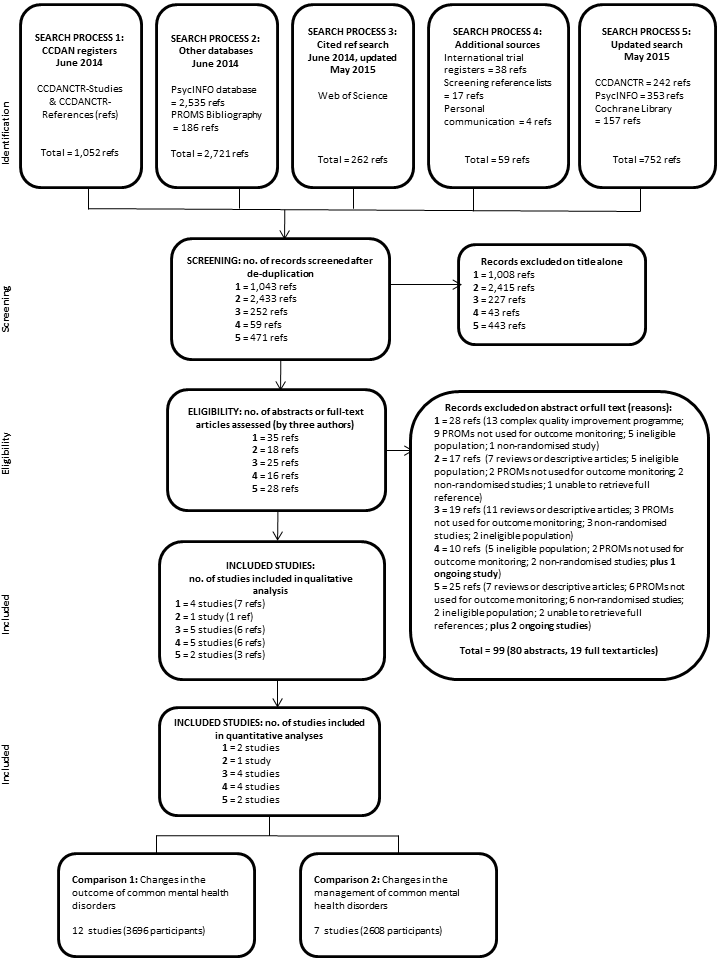

The initial searches of CCDANCTR, OVID PsycINFO and PROM bibliographies (to 30 May 2014) yielded 1052, 2535, and 186 references respectively (see PRISMA diagram, Figure 1). The WoS citation search to 5 June 2014 yielded 262 references, and we identified a further 59 references through searching the international trial registers, screening reference lists, and personal communication with trial authors. An updated search (to 18 May 2015) was conducted to validate identified references by re‐searching PsycINFO and CCDANCTR along with The Cochrane Library, which yielded a further 752 references. Following de‐duplication, we screened a total of 4258 references obtained through these searches, of which we excluded 4136 on assessment of the title alone. Of the remaining 122, 99 were excluded on the basis of reading and discussing the abstract (80) or full‐text article (19), including 25 reviews or descriptive articles, 22 where PROMs were not used for outcome monitoring, 19 with ineligible populations (adolescents, severe mental illness, eating disorders, or substance misuse), 14 non‐randomised studies, 13 which included complex quality improvement programmes, three because we were unable to retrieve full references, and three ongoing studies (NCT01796223; NCT02023736; NCT02095457); see PRISMA diagram (Figure 1). Further information is given below on the 19 studies excluded on the basis of reading and discussing the full‐text articles (see Excluded studies).

PRISMA flow diagram

In compliance with MECIR conduct standard 37 we ran an update search within 12 months of publication (on 25 May 2016), including the following databases: PsycINFO (which identified 72 references), CCDANCTR (29 references), CENTRAL (37), Web of Science (139), and the ICTRP/ClinicalTrials.gov international trial registries (28): in total 305, and de‐duplicated 281 references. This update search identified two additional completed studies (Gibbons 2015 and Rise 2016) which are awaiting classification, and four additional ongoing studies (Metz 2015; NCT02656641; NTR5466; and NTR5707). These results will be fully incorporated into the review at the next update (as appropriate).

The remaining 23 references described 17 included studies, of which 13 (Amble 2014; De Jong 2012; De Jong 2014; Hansson 2013; Hawkins 2004; Lambert 2001; Murphy 2012; Probst 2013; Reese 2009a; Reese 2009b; Simon 2012; Trudeau 2001; and Whipple 2003) were included in quantitative meta‐analyses as they used comparable outcome measures (either the Outcome Questionnaire (OQ‐45, Lambert 2004) or Outcome Rating System (ORS, Miller 2003), see interventions below), and the remaining four (Berking 2006; Chang 2012; Mathias 1994; Scheidt 2012) were included in the qualitative assessment (see PRISMA flow diagram, Figure 1).

The results of attempts to clarify study details through contacting authors are given in the table below. Contact details were unobtainable for the authors of Mathias 1994. Of those contacted seven authors responded (with regard to De Jong 2012; De Jong 2014; Haderlie 2012; Hansson 2013; Hawkins 2004; Puschner 2009; Reese 2009a; Reese 2009b; and Trudeau 2001;), and the remainder failed to respond (with regard to Chang 2012; Lambert 2001; Probst 2013; Simon 2012; and Whipple 2003).

Included studies

The individual studies are described in detail in the Characteristics of included studies table below.

Design

Thirteen studies were randomised at the individual level and four were cluster randomised (Chang 2012; Mathias 1994; Reese 2009b; Scheidt 2012). Fourteen studies had one intervention arm in which feedback of patient reported outcomes was given, and one control arm in which patients completed the measures but the results were not fed back. De Jong 2014; Hawkins 2004; and Trudeau 2001 included three arms: De Jong 2014 and Hawkins 2004 included two intervention arms, one in which feedback was given to the clinician only and one where feedback was given to both clinician and patient; and Trudeau 2001 included an additional control arm in which patients were not asked to complete the measures at all.

Sample sizes

The number of participants per study ranged from 96 to 1629 with a total of 8787 participants. A substantial proportion of participants were not used in data analysis due to withdrawal or loss to follow‐up, with all but two studies (De Jong 2012; Hansson 2013) utilising only a per protocol analysis. This number totaled 2650 (30.1%).

Setting

The majority of the studies (nine) were carried out in the USA. The remainder were carried out in Germany (three), The Netherlands (two), Sweden (one), Norway (one) and Ireland (one). Fifteen studies were conducted exclusively in outpatient settings, and two, Berking 2006 and Probst 2013, were inpatient studies. One study (Amble 2014) included both inpatients and outpatient clinics. Seven studies were multi‐centre with the remainder confined to one site.

Two studies were based in primary care settings (Chang 2012; Mathias 1994); nine in multidisciplinary mental health care settings (Amble 2014; Berking 2006; De Jong 2012; De Jong 2014; Hansson 2013; Hawkins 2004; Probst 2013; Simon 2012; Trudeau 2001); and six in psychological therapy settings (Lambert 2001; Murphy 2012; Reese 2009a; Reese 2009b; Scheidt 2012; Whipple 2003).

Participants

The 17 included studies comprised 8787 randomised participants (pre‐attrition total), of whom 6137 (69.9%) provided follow‐up data and were included in the study analyses. The age of participants ranged between 18 to 75 years, but in several studies the range was not reported. The median age across the studies was 35.1 years. The proportion of women among participants ranged from 58% to 73%, although there was inconsistency in reporting, with some studies providing the proportion of women among participants randomised, and some the proportion among participants included in the analysis. Reporting of demographic details was quite variable between studies, with marital status and employment being the most commonly recorded demographics. In studies which reported on ethnicity, the majority of participants were white.

Fourteen studies reported specific diagnoses for their participants, of which three used ICD diagnostic criteria (Amble 2014; Berking 2006; Scheidt 2012), and three used DSM criteria (De Jong 2012; De Jong 2014; Mathias 1994). The remaining studies characterised participants on the basis of clinical diagnoses rather than diagnostic criteria. Three studies did not report the specific diagnoses of their participants (Reese 2009a; Reese 2009b; Trudeau 2001), and five did not assign a specific diagnosis of a CMHD to 20% or of their participants, reporting that they had interpersonal or relationship difficulties, other diagnoses including personality or behavioural disorders, or were given administrative codes (Amble 2014; De Jong 2014; Lambert 2001; Murphy 2012; Whipple 2003).

Interventions

Feedback was usually given in the form of scores on the PROMs, together with information on whether this meant the participant had improved or not. Feedback was given only to the clinician in six studies: Chang 2012; Hawkins 2004 (one arm); Mathias 1994; Probst 2013; Scheidt 2012; and Trudeau 2001. Feedback was given explicitly to both the clinician and participant in seven: De Jong 2014 (one arm); Hansson 2013; Hawkins 2004 (one arm); Murphy 2012; Reese 2009a; Reese 2009b; and Simon 2012. In the other seven studies clinicians were permitted or encouraged to share feedback with the participant: Amble 2014; Berking 2006; De Jong 2012; De Jong 2014 (one arm); Lambert 2001; Probst 2013; and Whipple 2003.

Eight different PROMs were used across the studies, the most common being the Outcome Questionnaire‐45 (OQ‐45, Lambert 2004), a compound measure of psychiatric symptoms, individual functioning, interpersonal relations, and performance in social roles, which was used in 10 studies (Amble 2014; De Jong 2012; De Jong 2014; Hansson 2013; Hawkins 2004; Lambert 2001; Probst 2013; Simon 2012; Trudeau 2001; Whipple 2003). As well as the OQ‐45 scores, feedback was colour coded to allow quick appreciation of the extent of change during a busy clinic. In three of these studies (Probst 2013; Simon 2012; Whipple 2003) additional interventions were applied in the 'not on‐track' (NOT) groups, giving clinicians specific instructions on whether or not to change treatment according to the results of the outcome measure, and what further treatments to apply, known as the 'Assessment of Signal Cases' (ASC), and 'Clinical Support Tool' (CST) respectively.

Three studies (Murphy 2012; Reese 2009a; and Reese 2009b) used a shorter measure derived from the OQ‐45, known as the Outcome Rating System (ORS, Miller 2003) which includes the same domains as the OQ‐45.

The duration of the treatment period was variable, being determined by the clinician or patient terminating treatment in most studies, and so the duration of follow‐up was also variable, as the final measure of outcome was usually collected at the last treatment session.

Outcomes

Our primary outcome (mean change in symptom score) was reported by all studies, but of the remaining two primary outcomes health‐related quality of life was assessed by only two of the trials (Mathias 1994; Scheidt 2012), and adverse effects (including suicide and self‐harm) were also assessed by only one (Chang 2012). Changes in the management of the CMHD (pharmacological treatment and referral to secondary care) were reported by two studies (Chang 2012; Mathias 1994), and eight studies reported effects on the number of treatment sessions received by participants (Amble 2014; De Jong 2014; Hawkins 2004; Lambert 2001; Reese 2009a; Reese 2009b; Simon 2012; Whipple 2003).

Timing of outcome assessment

All but two of the studies reported research outcomes only in the short‐term, up to six months after baseline assessment. De Jong 2014 and Scheidt 2012 also reported longer‐term outcomes, after 35 weeks and 12 months respectively.

'On track' and 'not on track' participants

In 10 studies (De Jong 2012; De Jong 2014; Hansson 2013; Hawkins 2004; Lambert 2001; Murphy 2012; Reese 2009a; Reese 2009b; Simon 2012; Whipple 2003) results were reported for subgroups of participants according to whether they were identified early in their treatment as 'on‐track' (OT) or 'not on track' (NOT) for a good clinical response. The NOT group were also sometimes labelled as 'at risk', 'signal cases', or 'signal alert cases'.

Excluded studies

After obtaining and assessing the full text of the report we excluded 19 studies. Six studies were non‐randomised, six did not use the PROM for outcome monitoring or did not report patient outcomes, five included an ineligible population, and two involved the use of a PROM as part of a more complex quality improvement programme. See Characteristics of excluded studies for further details.

Ongoing studies