Impacto da divulgação pública de informação do desempenho no comportamento de consumidores e prestadores de saúde

Abstract

Background

It is becoming increasingly common to publish information about the quality and performance of healthcare organisations and individual professionals. However, we do not know how this information is used, or the extent to which such reporting leads to quality improvement by changing the behaviour of healthcare consumers, providers, and purchasers.

Objectives

To estimate the effects of public release of performance data, from any source, on changing the healthcare utilisation behaviour of healthcare consumers, providers (professionals and organisations), and purchasers of care. In addition, we sought to estimate the effects on healthcare provider performance, patient outcomes, and staff morale.

Search methods

We searched CENTRAL, MEDLINE, Embase, and two trials registers on 26 June 2017. We checked reference lists of all included studies to identify additional studies.

Selection criteria

We searched for randomised or non‐randomised trials, interrupted time series, and controlled before‐after studies of the effects of publicly releasing data regarding any aspect of the performance of healthcare organisations or professionals. Each study had to report at least one main outcome related to selecting or changing care.

Data collection and analysis

Two review authors independently screened studies for eligibility and extracted data. For each study, we extracted data about the target groups (healthcare consumers, healthcare providers, and healthcare purchasers), performance data, main outcomes (choice of healthcare provider, and improvement by means of changes in care), and other outcomes (awareness, attitude, knowledge of performance data, and costs). Given the substantial degree of clinical and methodological heterogeneity between the studies, we presented the findings for each policy in a structured format, but did not undertake a meta‐analysis.

Main results

We included 12 studies that analysed data from more than 7570 providers (e.g. professionals and organisations), and a further 3,333,386 clinical encounters (e.g. patient referrals, prescriptions). We included four cluster‐randomised trials, one cluster‐non‐randomised trial, six interrupted time series studies, and one controlled before‐after study. Eight studies were undertaken in the USA, and one each in Canada, Korea, China, and The Netherlands. Four studies examined the effect of public release of performance data on consumer healthcare choices, and four on improving quality.

There was low‐certainty evidence that public release of performance data may make little or no difference to long‐term healthcare utilisation by healthcare consumers (3 studies; 18,294 insurance plan beneficiaries), or providers (4 studies; 3,000,000 births, and 67 healthcare providers), or to provider performance (1 study; 82 providers). However, there was also low‐certainty evidence to suggest that public release of performance data may slightly improve some patient outcomes (5 studies, 315,092 hospitalisations, and 7502 providers). There was low‐certainty evidence from a single study to suggest that public release of performance data may have differential effects on disadvantaged populations. There was no evidence about effects on healthcare utilisation decisions by purchasers, or adverse effects.

Authors' conclusions

The existing evidence base is inadequate to directly inform policy and practice. Further studies should consider whether public release of performance data can improve patient outcomes, as well as healthcare processes.

PICOs

Resumo para leigos

Pode a divulgação pública de informação do desempenho nos cuidados de saúde influenciar o comportamento dos consumidores, prestadores de cuidados de saúde, e organizações?

Qual é o objetivo desta revisão?

O objetivo foi averiguar se divulgar publicamente informação sobre o desempenho de prestadores de cuidados de saúde (como hospitais e profissionais individuais) tem influência mensurável na alteração do comportamento de consumidores, prestadores e compradores de cuidados. Também procurámos determinar se tal afetava o desempenho de prestadores de cuidados de saúde, resultados dos doentes e motivação da equipa.

Mensagens‐chave

A divulgação pública de informação sobre o desempenho pode levar a uma ligeira ou nenhuma diferença nas escolhas dos cuidados de saúde (realizadas quer pelos consumidores quer pelos prestadores), ou no desempenho dos prestadores. No entanto, pode melhorar ligeiramente os resultados dos doentes.

O que foi estudado nesta revisão?

Espera‐se cada vez mais que os prestadores de cuidados de saúde informem o público sobre o quão bom é o seu desempenho. No entanto, ainda não se sabe se a divulgação pública de informação sobre o desempenho tem uma influência mensurável nas escolhas dos pacientes de serviços de cuidados de saúde, ou se tal pode verdadeiramente conduzir a melhorias na qualidade dos cuidados de saúde.

Quais são os principais resultados da revisão?

Os autores pesquisaram na literatura por estudos que avaliassem os efeitos da divulgação pública de informação sobre o desempenho em cuidados de saúde, e encontraram 12 estudos relevantes que analisaram informação de mais de 7570 prestadores, e ainda 3,333,386 encontros clínicos, como por exemplo pacientes individuais.

Existe evidência de baixo grau de certeza que a divulgação pública de informação sobre o desempenho possa levar a ligeira ou nenhuma diferença nos serviços que os pacientes optam por aceder, nas decisões tomadas pelos prestadores de cuidados de saúde, ou no desempenho global dos prestadores. Existe evidência de baixo grau de certeza sugerindo que alguns resultados dos doentes possam ligeiramente melhorar após a divulgação pública de informação sobre o desempenho, mas isto poderá ter menos efeito no comportamento de populações desfavorecidas. Não há evidência relacionada com decisões sobre utilização de cuidados de saúdes por parte de compradores, ou efeitos adversos.

Apesar de alguns estudos terem sido individualmente bem conduzidos, existiram limitações: em particular, o conjunto de evidência variou substancialmente em termos de contexto (por exemplo Estados Unidos ou Coreia), condição de saúde (por exemplo enfarte ou substituição de anca), e o modo de publicação de informação (por exemplo envio de correio em massa ou poster). As suas conclusões foram também inconsistentes, com alguns reportando mudanças atribuídas à divulgação pública de informação, e outros reportando nenhuma mudança semelhante.

Quão atualizada é esta revisão?

Os autores desta revisão pesquisaram estudos que foram publicados até Junho de 2017.

Authors' conclusions

Summary of findings

| People: Insurance plan beneficiaries, birthing mothers, GPs Settings (countries and clinical settings): United States, Canada, South Korea, Netherlands, China / Community, primary care and hospitals Intervention: Public release of performance data Comparison: No public reporting | |||

| Outcomes | Impact | No of clinical encounters | Certainty of the evidence |

| Changes in healthcare utilisation by consumers | Public release of performance data may make little or no difference to long‐term healthcare utilisation by consumers. However, two studies (one cNRT and one ITS) found that some population subgroups might be influenced by public release of performance data. | 18,294 insurance plan beneficiariesa (3: 1 cRT, 1 cNRT, 1 ITS) | ⊕⊕⊝⊝ |

| Changes in healthcare decisions taken by healthcare providers (professionals and organisations) | Public release of performance data may make little or no difference to decisions taken by healthcare professionals. Two studies (2 cRTs) found that some decisions might be affected by public release of performance data. One study (ITS) found that decisions might be influenced by the initial release of data, but that subsequent releases might have less impact. | 3,000,000 birthsb and 67 healthcare providers (4: 2 RTs, 2 ITS) | ⊕⊕⊝⊝ |

| Changes in the healthcare utilisation decisions of purchasers | No studies reported this outcome. | ‐ | ‐ |

| Changes in provider performance | Public release of performance data may make little or no difference to objective measures of provider performance. | 82 healthcare providers (1 cRT) | ⊕⊕⊝⊝ |

| Changes in patient outcome | Public release of performance data may slightly improve patient outcomes. | 315,092 hospitalisations and 7503 healthcare providers (5: 1 RT, 3 ITS, 1 CBA) | ⊕⊕⊝⊝ |

| Adverse effects | No studies reported this outcome. | ‐ | ‐ |

| Impact on equity | Public release of performance data may have a greater effect on provider choice among advantaged populations. | Unknown (1 ITS) | ⊕⊕⊝⊝ |

| EPOC adapted statements for GRADE Working Group grades of evidence † Substantially different = a large enough difference that it might affect a decision | |||

| a Number was based only on Farley 2002a and Farley 2002b studies, as the total number of cases analysed in Romano 2004 was unclear b Number of participants in Jang 2011 (3,000,000) estimated from data presented in Chung 2014 c Downgraded one level for inconsistency as effect shown by Zhang 2016, but not IkkersheJang 2011, Ikkersheim 2013, or Flett 201511 d Downgraded two levels for risk of bias, as there was attrition of participating hospitals, evidence of contamination of the intervention across intervention and control hospitals, and blinding was not possible given the nature of the intervention e Downgraded two levels for inconsistency, as there was marked disagreement between studies, with two showing improvements in patient outcome (LiuTu 2009; Liu 20179), and three showing no such improvements (DeVoRinke 2015; DeVore 2016; Joynt 201615) | |||

| cluster‐randomised trial (cRT); cluster‐non‐randomised trial (cNRT); controlled before‐after (CBA) study; interrupted time series (ITS) study; randomised trial (RT) | |||

Background

It is becoming increasingly common to release information about the performance of healthcare systems into the public domain. In the present era of accountability, cost‐effectiveness, quality improvement, and demand‐driven healthcare systems, decision makers such as governments, regulators, purchaser and provider organisations, health professionals, and consumers of health care are becoming more interested in measuring performance (Smith 2009). Such measurements may be presented in consumer reports, provider profiles, or report cards. It is not always clear who the information users are or what the release of data is expected to achieve. However, it is often assumed that the information will influence the behaviours of various stakeholders, and so ultimately lead to health system improvements (Berwick 2003; Smith 2009; Campanella 2016).

One study has conceptualised public reporting of performance data as (1) supporting patient choice, (2) improving accountability, and (3) allowing providers to benchmark their performance against others (Greenhalgh 2018).

Publication of performance data can support patient choice by helping them to identify the highest performing providers. However, there are many barriers to patient use of performance data (Canaway 2017). These include the complexity of the performance data (Hibbard 2010), lack of skills to comprehend and use performance data (Hibbard 2007; Canaway 2017; Canaway 2018), and the way data are presented (Damman 2010; Canaway 2017; Canaway 2018). Such barriers might negate the impact of choice, and even reduce equity in health care. Consumers from poorer backgrounds and with lower educational levels may be less able to choose, and less able to afford travel to better performing, but more distant, providers (Aggarwal 2017; Moscelli 2017). There is also evidence that patients often do not use published performance data when making healthcare choices (Greenhalgh 2018).

Improved accountability may be achieved by encouraging providers to focus on quality issues, as they know that performance measures will be published (Fung 2008; Hendriks 2009). This in turn, may stimulate quality improvements, particularly as providers can see their own performance against that of other clinicians and hospitals. Similarly, patients who preferentially choose high‐quality health care might help drive improvements, by concentrating resources with the best performing providers (Hibbard 2009; Kolstad 2009; Werner 2009).

Other proposed goals for performance measurements have been linked to controlling costs (Berwick 1990; Sirio 1996), regulating the overall healthcare system (Rosenthal 1998; Schut 2005), and influencing the decisions of healthcare purchasers (Brook 1994; Hibbard 1997; Mukamel 1998).

Professional concerns to public release of performance data often relate to the validity of both the performance measures themselves, and comparisons between health providers (Sherman 2013; Kiernan 2015; Burns 2016;). There are concerns that failure to adequately adjust for case mix differences might lead to providers that treat higher‐risk patients being labelled as poor performers, or to providers preferentially selecting lower‐risk patients (Wasfy 2015; Burns 2016; Shahian 2017; Wadhera 2017). In healthcare systems where providers charge for their services, the 'better' performing providers might feel empowered to increase charges, thereby restricting access to better care (Mukamel 1998). An additional risk is that publication of performance data may lead to improved reporting, without necessarily improving performance. It has been argued that the care processes that are easiest to measure are often those that are least important in a quality improvement context, and can result in the de‐prioritisation of other tasks (Loeb 2004).

Description of the intervention

Public release of performance data is the release of information about the quality of care, so that patients and consumers can better decide what health care they wish to select, and healthcare professionals and organisations can better decide what to provide, improve, or purchase. This mechanism excludes the use of auditing and feedback as a tool for improving professional practice and healthcare outcomes, which has been reviewed elsewhere (Ivers 2012).

How the intervention might work

Public release of performance data may change individual or organisational behaviour through a number of mechanisms. The goal of improving quality of health care can be achieved through a selection pathway or a change pathway (Berwick 2003). Consumers, patients, and purchaser organisations that are in a position to do so, can select the best healthcare professionals and organisations. This type of selection will not change the quality of the delivered care by itself, but it can be a stimulus for quality improvement. Importantly, such changes might be attenuated by the limited choice that patients have in many cases, e.g. in the case of emergencies, the need to access specialised care that is only available in few centres, or because of resource limitations (Aggarwal 2017; Moscelli 2017). In a change pathway, healthcare professionals and organisations can improve performance by changing their work procedures or professional culture, and organisations can make structural changes.

Why it is important to do this review

Some systematic reviews have suggested positive effects of publicly releasing performance data, but included a broad range of study designs (Marshall 2000; Shekelle 2008; Fung 2008; Faber 2009). This study (which is the first update of Ketelaar 2011) aimed to review the evidence for the impact of such interventions using more stringent selection criteria.

Objectives

To estimate the effects of publicly releasing performance data on changing the healthcare utilisation behaviour of healthcare consumers, providers (professionals and organisations), and purchasers of care. In addition, we sought to estimate the effects on healthcare provider performance, patient outcomes, and staff morale.

Methods

Criteria for considering studies for this review

Types of studies

-

Randomised trials, including cluster‐randomised trials

-

Non‐randomised trials, including cluster‐non‐randomised trials, which use non‐random methods of allocation, such as alternation or allocation by case note number

-

Controlled before‐after studies, with at least two intervention sites and two control sites that are chosen for similarity of main outcome measures at baseline

-

Interrupted time series studies, with at least three data points before and three data points after the intervention

We included non‐randomised studies in anticipation of a lack of randomised trials, but also because some interventions might not be appropriate for a trial (e.g. randomising participants to not receive important information that might affect their healthcare choices), and others might have a variable effect over time that is best observed by an alternative study design, such as an interrupted time series.

Types of participants

Patients or other healthcare consumers and healthcare providers, including organisations (e.g. hospitals), without any restriction by type of healthcare professional, provider, setting, or purchaser.

Types of interventions

We included interventions that contained the following elements:

-

Performance data about any aspect of the healthcare organisations or individuals, including process measures (e.g. waiting times), healthcare outcomes (e.g. mortality), structure measures (e.g. presence of waiting rooms), consumer or patient experiences (e.g. Consumer Assessment of Healthcare Providers and System (CAHPS) data), with or without expert or peer‐assessed measures, e.g. certification, accreditation, and quality ratings given by colleagues. Performance data were included if prepared and released by any organisation, such as the government, insurers, consumer organisations, or providers. We excluded studies that did not evaluate publication of performance data concerning process measures, healthcare outcomes, structure measure, consumer or patient experiences, or expert or peer‐assessed measures.

-

The release of performance data into the public domain in written or electronic form without regard to any minimum degree of accessibility. For example, this could include a report available in a publicly accessible library, as well as active dissemination directly to consumers through personal mailings.

Comparators

The following comparisons were planned:

-

Public release of performance data compared to settings in which data were not released to the public

-

Different modes of releasing performance data to the public

Types of outcome measures

Primary outcomes

We planned the primary outcome measures according to two key aims of publicly releasing performance data.

1. Improvement by selection

-

Changes in healthcare utilisation by consumers

-

Objective measures of changing consumer behaviour, such as increased use of a specific healthcare provider

-

-

Changes in healthcare decisions taken by healthcare providers (professionals and organisations)

-

Objective measures of changing healthcare provider behaviour, such as changes to drug prescribing

-

-

Changes in the healthcare utilisation decisions of purchasers

-

Objective measures of changing purchaser behaviour, such as increased or decreased funding for services

-

2. Improvement by changes in care

-

Changes in provider performance

-

Objective changes, such as reaching the correct diagnosis or time to treatment

-

Including measures that were made both public and others that were not

-

-

Changes in patient outcome

-

Objective changes, such as mortality or patient‐reported outcome measures

-

-

Changes in staff morale

-

Using a previously validated assessment tool

-

Secondary outcomes

We considered unintended and adverse effects or harms, and any potential impact on equity (e.g. differential effects between advantaged and disadvantaged populations), and awareness, knowledge, attitude, or costs.

We excluded studies that reported awareness, attitude, perspectives, and knowledge of performance data and cost data in the absence of objective measures of decision behaviour, provider performance or patient outcomes.

Search methods for identification of studies

Electronic searches

We searched the Database of Abstracts of Reviews of Effects (DARE) for primary studies included in related systematic reviews. We searched the following databases on 26 June 2017:

-

Cochrane Central Register of Controlled Trials (CENTRAL; 2017, Issue 5) in the Cochrane Library;

-

MEDLINE Ovid (including Epub Ahead of Print, In‐Process & Other Non‐Indexed Citations and Versions);

-

Embase Ovid.

The Cochrane Effective Practice and Organisation of Care (EPOC) Information Specialist developed the search strategies in consultation with the authors. Search strategies are comprised of keywords and controlled vocabulary terms. We applied no language or time limits. We searched all databases from database start date to 26 June 2017.

Searching other resources

Trial Registries

-

International Clinical Trials Registry Platform (ICTRP), Word Health Organization (WHO) www.who.int/ictrp/en/ (searched 26 June 2017)

-

ClinicalTrials.gov, US National Institutes of Health (NIH) clinicaltrials.gov/ (searched 26 June 2017)

We manually searched the reference lists of all included studies.

We provided all search strategies used in Appendix 1.

Data collection and analysis

Selection of studies

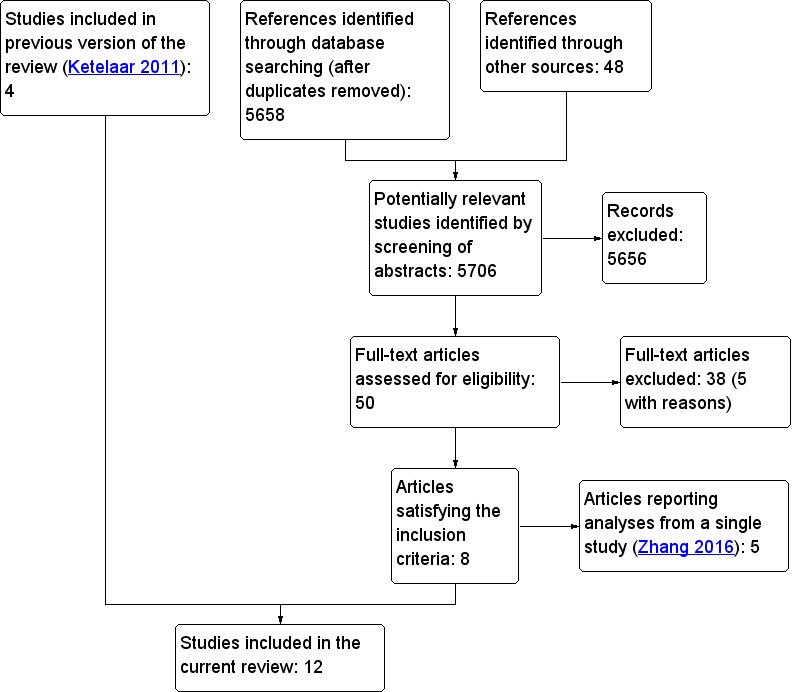

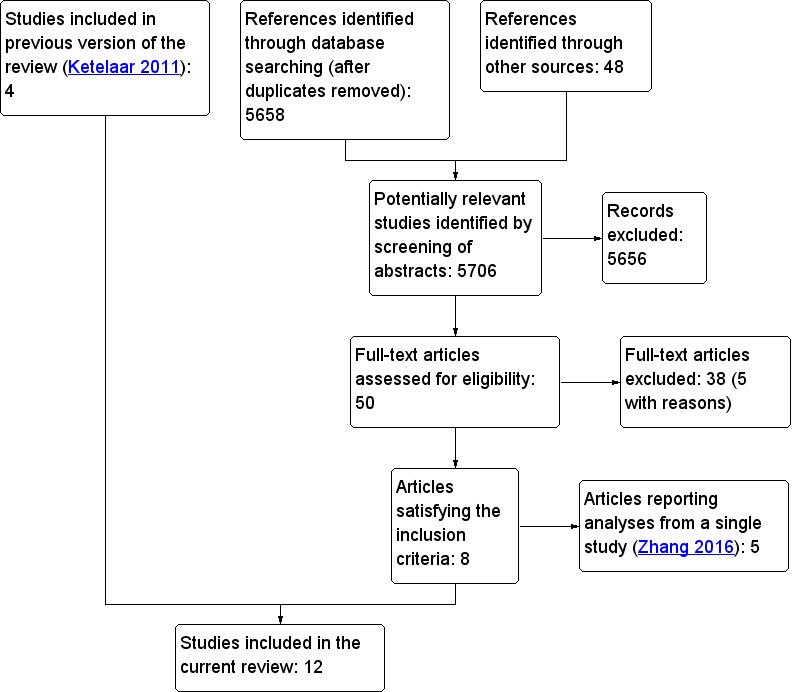

We downloaded all titles and abstracts retrieved in the electronic search to a reference management database. We removed the duplicates, and two review authors then independently examined the remaining references. All review authors recorded their assessments of abstracts with points: ‘0’ for exclusion, ‘1’ for doubtful and ‘2’ for inclusion. Two review authors (DM, ARD) independently rated each abstract; therefore, a minimum score of zero, and a maximum score of four was possible. Abstracts with a combined score of zero or one were excluded. Studies with a combined score of three or four were included. Two review authors resolved the fate of studies with a combined score of two by discussion. A third review author (OO) adjudicated on any disagreements that remained unresolved. Figure 1 shows the PRISMA flow diagram that accounts for exclusion of all items received by the search strategy.

Flowchart for study selection

Data extraction and management

Two authors (DM, OO) independently extracted the data about the study design, patient and provider characteristics, interventions, outcome measures, and healthcare choices to a form specially designed for our review. Disagreements were resolved by discussion, and we accepted the judgement of a third author (ARD) in the event of continued disagreement.

Assessment of risk of bias in included studies

We assessed risk of bias by applying the guidance from the Cochrane Handbook for Systematic Reviews of Interventions, which recommends using the following items: (i) adequate sequence generation, (ii) concealment of allocation, (iii) blinding, (iv) incomplete outcome data, (v) selective reporting, and (vi) no risk of bias from other sources (Higgins 2011). However, we deviated from this guidance: we used three additional criteria that are specified by the Cochrane Effective Practice and Organisation of Care Group: (vii) baseline characteristic similarity, (viii) baseline outcome similarity, and (ix) adequate protection against contamination (EPOC 2013). We used these nine standard criteria for randomised trials, non‐randomised trials, and controlled before‐after studies. We used seven criteria for interrupted time series studies, and applied these as recommended by EPOC 2013: (i) the intervention is independent of other changes, (ii) the shape of the intervention effect is pre‐specified, (iii) the intervention is unlikely to affect data collection, (iv) knowledge of the allocated interventions is adequately prevented during the study, (v) the outcome data are incomplete, (vi) reporting is not selective, and (vii) there is no risk of bias from other sources. Two review authors (DM, ARD) independently reached judgements about risk of bias using the guidance provided by Higgins 2011 and EPOC 2013, and resolved disagreements by discussion. A third review author (OO) dealt with any disagreements that the two review authors could not resolve.

Measures of treatment effect

In order to standardise reporting of effect sizes, we re‐analysed data from individual studies to ensure that randomised trials and controlled before‐after studies could be reported as relative effects. Interrupted time series were reported as change in level and change in slope. We described the methods used for re‐analysing and presenting these data in Data synthesis.

Unit of analysis issues

We noted whether randomised trials randomised patients or healthcare providers. If analysis did not allow for clustering of patients within healthcare providers, we recorded a unit of analysis error, because such analyses tend to overestimate the precision of the treatment effect. In the event of a unit of analysis error and insufficient data to account for clustering, we did not report P values or confidence intervals.

Dealing with missing data

In the event of important missing data, contacted the authors of individual studies. As described in Data synthesis, we electronically extracted missing interrupted time series data that were presented in graphs.

Assessment of heterogeneity

There were substantial differences between the policies and interventions described. There were also differences between the settings, in terms of culture and health system delivery. Although some studies evaluated similar interventions, there were still important clinical and methodological differences. As statistical tests for heterogeneity lack power when few studies are included, we elected not to calculate average effects across studies, or to estimate statistical heterogeneity (Schroll 2011).

Assessment of reporting biases

We did not present funnel plots as we did not undertake a meta‐analysis and there were not more than 10 studies contributing to any individual analysis (Higgins 2011).

Data synthesis

We followed the EPOC recommendations with regard to analysing data from individual studies and meta‐analysis (EPOC 2013). We expressed the findings from controlled before‐after studies as relative effects. To achieve this, we reported continuous variables as relative change in outcome measures, adjusted for baseline differences. We undertook absolute difference‐in‐difference analyses that were adjusted for differences in the postintervention control group using: ((postintervention intervention group ‐ postintervention control group) ‐ (preintervention intervention ‐ pre‐intervention control))/postintervention control. For ease of comparison with the findings of controlled before‐after studies, we reported the findings of randomised and non‐randomised trials using the same difference‐in‐difference analysis.

Interrupted time series are typically reported using regression analysis, such as autoregressive integrated moving average (ARIMA) analysis. Pursuant to the EPOC recommendations, we present outcomes along two dimensions: change in level and change in slope (EPOC 2013). The former represents the immediate effect of the intervention as measured by the difference between the fitted value for the first post‐intervention time point and the predicted outcome at the same point, based only on an extrapolation of the pre‐intervention slope. Change in slope is an expression of any longer‐term effect of the intervention. We decided to use a similar method to the change in level, but a later follow‐up period, e.g. six months.

In the event that appropriate interrupted time series analyses were not reported but that data were presented graphically, we read values from graphs using Plot Digitizer v2.6.8 (Huwaldt 2004). We extracted 'actual' data points from all studies and only planned to use lines of best fit in the event that true points were not available. A segmented time series model (Y(t) = B0 + B1*preslope + B2*postslope + B3* intervention + e(t)) was specified, in which Y(t) was the outcome in month t. Preslope is a continuous variable that indicates time from the beginning of the study until the end of the pre‐intervention phase, after which it was coded as a constant. Postslope is assigned the value 0 until after the intervention takes place, after which it is coded sequentially from 1 (i.e. 1, 2, 3). Intervention is assigned the value 0 pre‐intervention and 1 in the postintervention time period. In this model, B1 estimates the pre‐intervention slope, B2 the postintervention slope, and B3 the change in level, i.e. the difference between the first postintervention time point and the extrapolated first postintervention time point had the pre‐intervention line continued into the postintervention period. The difference in slope was determined using B2 ‐ B1.

We reported effects at 3, 6, 9, 12, and 24 months postintervention when the data were available. Given the substantial degree of clinical and methodological heterogeneity between the studies, we presented the findings for each policy in a structured format, but did not undertake a meta‐analysis.

Summary of findings

We summarised the findings of the main intervention comparisons in a 'Summary of findings' table to illustrate the certainty of the evidence. One review author (DM) categorised the certainty of the evidence as high, moderate, low, or very low, using the five GRADE domains (study limitations, consistency of effect, imprecision, indirectness, and publication bias (Guyatt 2011)). We undertook this pursuant to Chapter 12 of the Cochrane Handbook for Systematic Reviews of Interventions and worksheets created by EPOC (Higgins 2011; EPOC 2013). All other co‐authors checked these judgments, and resolved disagreements through discussion. When ratings were up‐ or down‐graded, we justified these decisions using footnotes in Appendix 2 and summary of findings Table for the main comparison. Standardised statements for reporting effects and certainty of evidence were selected, based on the GRADE assessments for each outcome, and used throughout the review (EPOC 2017). The seven outcomes reported in summary of findings Table for the main comparison are:

-

Changes in healthcare utilisation by consumers

-

Changes in healthcare decisions taken by healthcare providers (professionals and organisations)

-

Changes in the healthcare utilisation decisions by healthcare purchasers

-

Changes in provider performance

-

Changes in patient outcome

-

Adverse effects

-

Impact on equity

Subgroup analysis and investigation of heterogeneity

As described in Data synthesis, we presented the findings of individual studies in a structured format rather than attempting meta‐analysis, given the substantial heterogeneity between the studies. Therefore, it was not possible to undertake subgroup analyses.

Sensitivity analysis

In the absence of a formal meta‐analysis, we did not undertake any sensitivity analyses.

Results

Description of studies

The included studies are summarised in Table 1 and described fully in Characteristics of included studies. A number of studies that narrowly failed to satisfy our selection criteria are described in Characteristics of excluded studies.

| Study detailsa | Improvement by selection | Improvement by changes in care | Data available | ||||||

| Study | Design, setting, and participants | Intervention | Consumers | Providers | Purchasers | Provider performance | Patient outcome | Staff morale | |

| cRT; USA; 13,077 insurance plan beneficiaries | Consumer Assessment of Healthcare Providers and Systems (CAHPS) report | X | ‐ | ‐ | ‐ | ‐ | ‐ | X | |

| cNRT; USA; 5217 insurance plan beneficiaries | Consumer Assessment of Healthcare Providers and Systems (CAHPS) report | X | ‐ | ‐ | ‐ | ‐ | ‐ | X | |

| ITS; USA; ‐ | Report cards with risk‐adjusted patient outcomes produced by state agencies | X | ‐ | ‐ | ‐ | ‐ | ‐ | b | |

| ITS; USA; 21 hospitals | State‐based mandatory public reporting of healthcare‐associated infections | ‐ | X | ‐ | ‐ | ‐ | ‐ | X | |

| CBA; USA; 3207 hospitals | Mandatory public reporting of healthcare‐associated infections | ‐ | ‐ | ‐ | ‐ | X | ‐ | X | |

| ITS; USA; 315,092 hospitalisations | Online reporting of risk‐adjusted 30‐day re‐admission rates (Hospital Compare) | ‐ | ‐ | ‐ | ‐ | X | ‐ | b | |

| ITS; USA; 3970 hospitals | Online reporting of risk‐adjusted 30‐day mortality rates (Hospital Compare) | ‐ | ‐ | ‐ | ‐ | X | ‐ | X | |

| ITS; USA; 244 hospitals | Mandatory public reporting of healthcare‐associated infections | ‐ | ‐ | ‐ | ‐ | X | ‐ | ‐c | |

| cRT; Canada; 82 hospital organisations | Report cards with risk‐adjusted patient outcomes and a press conference | ‐ | ‐ | ‐ | X | X | ‐ | X | |

| ITS; South Korea; 3,000,000 live births | Repeated public release of information (online, press releases) on hospital caesarean rates | ‐ | X | ‐ | ‐ | ‐ | ‐ | X | |

| cRT; The Netherlands; 26 general practitioners | Report cards with risk‐adjusted patient outcomes sent to GPs for discussion with patients | ‐ | X | ‐ | ‐ | ‐ | ‐ | b | |

| cRT; China; 20 primary care providers | Public display of prescription information on outpatient department bulletin boards | ‐ | X | ‐ | ‐ | ‐ | ‐ | X | |

controlled before‐after (CBA) study; cluster‐randomised trial (cRT); cluster‐non‐randomised trial (cNRT); Consumer Assessment of Healthcare Providers and Systems (CAHPs); general practitioners (GPs); interrupted time series (ITS) study

Column headers: changes in healthcare utilisation by consumers (Consumers); changes in healthcare decisions taken by healthcare providers (professionals and organisations; (Providers)); changes in healthcare decisions of purchasers (Purchasers); changes in provider performance (Provider performance); changes in patient outcome (Patient outcome); changes in staff morale (Staff morale); impact on equity (Equity)

Order of studies: listed in chronological order USA, then chronological order for other countries of study

a Studies grouped by intervention, i.e. mode of public release of performance data

b No change in slope and so re‐analysis of the ITS data was uninformative

c Presented derived data (e.g. outputs of regression models) that were insufficient for re‐analysis

Results of the search

The electronic searches for this update retrieved 5658 individual items; a further 48 were identified from other sources, e.g. manual searching of reference lists. We excluded 5656 items because the titles and abstracts did not meet our inclusion criteria. We retrieved the full‐text versions of the remaining 50 articles; 38 of these did not satisfy the inclusion criteria; five with reasons, see (Characteristics of excluded studies). Five of the remaining 16 articles reported separate analyses of a single cluster randomised trial, and so we treated them as a single study for the purposes of this review (Zhang 2016). Therefore, we included 12 studies in the review. As described in Data synthesis, we did not undertake formal meta‐analyses due to substantial inter‐study heterogeneity. We presented the study flow chart in Figure 1 (Moher 1999).

Included studies

We included 12 studies that comprised more than 7570 providers (e.g. professionals and organisations) and a further 3,333,386 clinical encounters (e.g. patient referrals, prescriptions). There were four cluster randomised trials (Farley 2002a; Tu 2009; Ikkersheim 2013; Zhang 2016), one cluster‐non‐randomised trial (Farley 2002b), six interrupted time series studies (Romano 2004; Jang 2011; Flett 2015; DeVore 2016; Joynt 2016; Liu 2017), and one controlled before‐after study (Rinke 2015). Eight were conducted in the USA (Farley 2002a; Farley 2002b; Romano 2004; Flett 2015; Rinke 2015; DeVore 2016; Joynt 2016; Liu 2017), and one each in Canada (Tu 2009), the Netherlands (Ikkersheim 2013), Korea (Jang 2011), and China (Zhang 2016).

Three studies focused on changes in the healthcare utilisation decisions of consumers (Farley 2002a; Farley 2002b; Romano 2004), four of providers (Jang 2011; Ikkersheim 2013; Flett 2015; Zhang 2016), and none of purchasers. Two studies reported data on changes to provider performance (Tu 2009; Rinke 2015), five on patient outcomes (Tu 2009; Flett 2015; DeVore 2016; Joynt 2016; Liu 2017), and none on staff morale. No study explicitly reported adverse events as a separate outcome, or gave particular consideration to effects on equitable health care.

Three US studies examined the effect of a single suite of interventions (i.e. laws mandating public reporting of healthcare‐associated infections in the United States), which were introduced by some state legislatures between 2006 and 2009 (Flett 2015; Rinke 2015; Liu 2017). Liu 2017 examined the effect of mandatory reporting on central line‐associated bloodstream infection rates in adult intensive care units. They undertook an interrupted time series study using data from hospitals contributing to the National Healthcare Safety Network between 2006 and 2012. States that did not introduce mandatory reporting were used to control for secular trends through a difference‐in‐difference analysis. The other two studies focused their analyses on healthcare‐associated infections in paediatric inpatients (Flett 2015; Rinke 2015). Rinke 2015 sought to determine whether mandatory central line‐associated bloodstream infection public reporting was associated with a reduction in a specific paediatric safety indicator (PDI12, i.e. selected infections due to medical care), which is defined using diagnosis codes on hospital discharge. They undertook a controlled before‐after study using the Kids' Inpatient Database, which is one of a suite of administrative healthcare databases coordinated by the Healthcare Cost and Utilization Project at the US Agency for Healthcare Research and Quality. Flett 2015 did not examine patient outcomes, but aimed to test the hypothesis that clinicians in hospitals that are required to report central line‐associated bloodstream infections would modify their behaviour by sending fewer blood culture tests or prescribing longer courses of antibiotics. They undertook an interrupted time series using data from the Pediatric Health Information System, which is a collaborative venture between children's hospitals that is used for clinical audit and quality improvement. The data were analysed using generalised linear mixed‐effects models with auto‐correlated residuals to compare central line‐associated bloodstream infections adjusted rate ratios before and after implementation of mandatory reporting laws.

Two US studies studied the effect of providing information about plan performance on choice of insurance plan by new Medicaid beneficiaries (Farley 2002a; Farley 2002b). Farley 2002a was a cluster‐randomised trial, using data from new Medicaid beneficiaries in Iowa. Under Iowa Medicaid, new enrollees were automatically assigned, by default, to one of four private health maintenance organisations or the Medicaid primary care case management programme. They were sent a packet of information about their specific health plan and benefits under Medicaid. The control group received the standard packet of information and the intervention group received this, plus an additional report that described the performance of each health plan, along domains such as 'overall health care rating', and 'personal doctor rating'. The authors used multinomial logistic regression to model the odds of new beneficiaries electing to continue with or change their allocated plan. In Farley 2002b, the same author team undertook a cluster‐non‐randomised trial to evaluate the same performance reports on beneficiary choice within the New Jersey Medicaid programme. The study design was very similar to Farley 2002a, in terms of control and intervention groups, although this was technically an non‐randomised trial, because participants were allocated according to the last digit of their Medicaid case ID number. The objective outcome measure reported was the effect of performance reports on Medicaid beneficiary plan choices.

The other three US studies each examined the impact of different public reporting initiatives on patient outcomes (Romano 2004; DeVore 2016; Joynt 2016). Two used Medicare claims data, and so confined their analyses to the Medicare population, i.e. those aged 65 years or older (DeVore 2016; Joynt 2016). DeVore 2016 undertook an interrupted time series to study the effect on 30‐day re‐admissions, of publicly reporting risk‐adjusted hospital re‐admission rates for patients with selected conditions (acute myocardial infarction, heart failure, and pneumonia) on the Hospital Compare website. Joynt 2016 reported an interrupted time series with a similar study design to DeVore 2016, but examined the impact on mortality rates, of public reporting of mortality (for patients with the same three selected conditions) on the Hospital Compare website. They used hierarchical modelling to compare 30‐day mortality in the pre‐ and postreporting periods. The final US study presented an interrupted time series based on the California Hospital Outcomes Project in California and the Cardiac Surgery Reporting System in New York (Romano 2004). This study evaluated the effects of publishing report cards on trends in hospital volumes for specific diagnoses, i.e. coronary artery bypass surgery mortality in New York, and both acute myocardial infarction and postdiscectomy complications in California. The interrupted time series examined hospital case volumes, determined using administrative data sets in each state (the California Patient Discharge Data Set and the New York Statewide Planning and Research Cooperative System) before and after the publication of reports that identified hospitals as performance outliers. These reports were published by the California Hospital Outcomes Project and the New York Cardiac Surgery Reporting System.

There were three cluster‐randomised trials outside the US; one each in Canada (Tu 2009), the Netherlands (Ikkersheim 2013), and China (Zhang 2016). In Canada, Tu 2009 evaluated the public release of performance data about 12 care quality indicators for acute myocardial infarction and six for congestive heart failure in 86 hospitals. Participating hospitals were randomised to either early (January 2004) or delayed (September 2005) publication of performance report cards. The performance data were provided to individual hospitals, and then publicised both online and through popular media, with coverage achieved through television, radio, and newspapers. The outcomes reported by this study were any change in hospital performance, measured using the 18 care quality indicators. The cluster‐randomised trial in the Netherlands randomised 26 GPs to receive either individualised hospital report cards (65.4%), or to a control group (34.6%) that did not receive this information (Ikkersheim 2013). The study then captured individual patient referrals (for breast cancer, cataract surgery, and hip or knee replacement) to one of four hospitals in the region, using an electronic referral system. Zhang 2016 undertook a cluster‐randomised trial in Hubei Province, south central China. They matched 20 primary care providers within a single city, based on similar organisational characteristics. In this matched‐pair cluster‐randomised trial, half the providers were randomised to public reporting of injection prescribing, by way of league tables that were posted on outpatient bulletin boards. Performance data were also disseminated to both local health authorities and the leaders of hospitals in the intervention group. The outcomes were the percentage of prescriptions requiring antibiotics, percentage requiring intravenous antibiotics, and the average expenditure per prescription.

Finally, a single interrupted time series study was undertaken in Seoul, South Korea by Jang 2011. In this study, the intervention was public release of data (online and in media releases) about caesarean section rates for 1194 institutions across the country. These rates were publicised as part of a series of public releases, which were not described in detail. The outcome was change in risk‐adjusted institutional caesarean section rates over the whole study period, and after each public release of data.

Excluded studies

In total, we excluded 38 studies after assessing full copies of the papers. The main reasons for exclusion were: ineligible study design (24), interventions did not contain process measures, health care outcomes, structure measures, consumer or patient experiences, expert‐ or peer‐assessed measures (8), no objective outcome data were recorded or available for one or both arms (3), the study was about hypothetical choices (3). We listed selected studies that readers might reasonably have expected to find included in this review in the 'Characteristics of excluded studies' table.

Risk of bias in included studies

The included studies were rated on different risk of bias items as appropriate for each study design (randomised trial, non‐randomised trial, controlled before‐after, or interrupted time series). We described this in Assessment of risk of bias in included studies, but in summary, we rated randomised trials, non‐randomised trials, and controlled before‐after studies using the same nine criteria, and used seven criteria for interrupted time series studies. We showed the results of these risk of bias assessments in the 'Characteristics of included studies' tables and summarised them in both Figure 2 and Figure 3.

Risk of bias graph: review authors' judgements about each risk of bias item, presented as percentages across all included studies. The blank spaces represent risk of bias criteria that were not applicable to the study design.

Risk of bias summary: review authors' judgements about each risk of bias item for each included study. The blank cells represent risk of bias criteria that were not applicable to the study design.

Allocation

The extent of possible selection bias due to the random sequence generation process was unclear in two studies, because the precise method of random sequence generation was not described (Farley 2002a; Ikkersheim 2013). Two studies were at high risk, as Rinke 2015 was a controlled before‐after study, and Farley 2002b was a cluster‐non‐randomised trial, and so used a non‐random method of sequence generation. We judged risk of selection bias as low for Zhang 2016 who 'flipped a coin to randomly assign' paired primary care institutions, and Tu 2009 who employed a dedicated study statistician to implement a stratified randomisation process.

We made the same judgements for allocation concealment as for random sequence generation, except for Zhang 2016, which was judged to be at high risk for allocation concealment given their use of a coin flip.

Blinding

Although hospitals and healthcare providers could not be blinded to their allocated groups, individual participants were unlikely to have been aware that a study was taking place. No study explicitly contacted individual patients or members of the public to inform them about the research question, intervention, or measured outcomes. For this reason, two studies were considered to be at unclear risk, as it was not stated whether individuals in those trials were informed that a study was taking place (Farley 2002a; Farley 2002b). Four studies were at high risk, because providers were likely to know that a study was taking place, and it was not possible to blind them to their group allocation (Tu 2009; Ikkersheim 2013; Rinke 2015; Zhang 2016).

Incomplete outcome data

We judged 11/12 included studies to be at low risk of attrition bias, because these studies based their outcomes on routinely collected administrative data, e.g. electronic prescriptions or hospital referrals. Only Tu 2009 was judged to be at high risk of bias, because five randomised hospitals withdrew due to resource constraints; one after randomisation and four during follow‐up. Although only a small proportion (5.8%) of the hospitals randomised in this cluster‐randomised trial withdrew, it is plausible that poorly performing institutions would be more likely to withdraw than those with average or high performance.

Selective reporting

Only Tu 2009 registered a trial protocol with ClinicalTrials.gov (NCT00187460) in advance of undertaking the study. All outcomes described in this protocol were presented in the final report, which also included all‐cause mortality as an additional outcome. Therefore, we judged it to be at low risk of reporting bias. Although Zhang 2016 presented a trial protocol, this was published in March 2015, eighteen months after the intervention began in October 2013. None of the remaining ten studies registered a protocol in advance of randomisation (randomised and non‐randomised trials) or data analysis (interrupted time series and controlled before‐after series).

Other potential sources of bias

As outlined in the 'Assessment of risk of bias in included studies' section, the four cluster‐randomised trials (Farley 2002a; Tu 2009; Ikkersheim 2013; Zhang 2016), cluster‐non‐randomised trial (Farley 2002b), and controlled before‐after study (Rinke 2015), were assessed for bias in terms of baseline characteristics, baseline outcome measures, and protection against contamination. In addition, we assessed these sources of bias for the six interrupted time series studies: intervention is independent of other changes, shape of the intervention is prespecified, intervention is unlikely to affect data collection, and knowledge of the allocated interventions is adequately prevented during the study (Romano 2004; Jang 2011; Flett 2015; DeVore 2016; Joynt 2016; Liu 2017).

Baseline characteristics

We considered four studies to be at low risk of bias for baseline characteristics because the intervention and control groups were shown to be similar (Tu 2009; Ikkersheim 2013; Rinke 2015; Zhang 2016). Two studies did not report baseline characteristics, and we considered them to be at unclear risk of bias (Farley 2002a; Farley 2002b).

Baseline outcome measures

All six interrupted time series studies presented baseline outcome measures that differed between the intervention and control groups. However, all six also used appropriate statistical techniques, including multivariable regression (Farley 2002b; Tu 2009; Ikkersheim 2013; Rinke 2015; Zhang 2016), and difference‐in‐differences analyses (Tu 2009; Rinke 2015; Zhang 2016) to account for differences in baseline between the groups. They were therefore all considered to be at low risk of bias from this source.

Protection against contamination

We judged three studies to be at low risk of contamination, either because they randomised healthcare professionals (Ikkersheim 2013), or because their intervention was sent by post, and so unlikely to reach individuals in the control group (Farley 2002a; Farley 2002b).

We assessed two studies to be at high risk. The authors of Tu 2009 stated that several hospitals in the delayed feedback group reported that they also initiated quality improvement activities after becoming aware that performance measures were due to be released publicly. As this was not quantified, it was difficult to determine the degree to which hospitals in the control group modified their activities in anticipation of having to publicly release performance data. We also assessed Rinke 2015 at high risk because hospitals in states that did not mandate healthcare‐associated infection reporting might still have modified their practice, given that such laws were being introduced elsewhere in the USA.

We judged Zhang 2016 to be at unclear risk, because no specific efforts were taken to protect against contamination. However, it is not certain that their intervention (posters on bulletin boards in outpatient areas of intervention organisations) would necessarily have influenced behaviour in control institutions.

Intervention independent of other changes

In three interrupted time series studies, it was unclear whether the intervention occurred independently of other changes over time, or whether the outcome was influenced by other confounding variables and events during the study period (Romano 2004; Jang 2011; DeVore 2016). We judged the remaining three interrupted time series studies to be at low risk of bias. In the two studies that examined public reporting of healthcare‐associated infections, this was because they analysed data from a number of states that introduced legislation at different times (Flett 2015; Liu 2017). We judged Joynt 2016 to be at low risk, because they did not demonstrate a substantial change in the postintervention period, so this was unlikely to be attributable to other factors.

Shape of intervention effect prespecified

Two interrupted time series studies prespecified the shape of the intervention effect, so we assessed both to be at low risk of bias in this domain (Romano 2004; Jang 2011). The remaining four interrupted time series studies did not, and we judged them to be at high risk.

Knowledge of the allocated interventions adequately prevented during the study

All six interrupted time series studies reported objective outcome measures, so we judged them to be at low risk of bias for this domain.

Intervention unlikely to affect data collection

The intervention was unlikely to affect data collection in any of the six interrupted time series studies, as all were undertaken retrospectively, using routinely collected data. In all cases, the methods of data collection were the same before and after the intervention. Therefore, we judged all six studies to be at low risk of bias.

Effects of interventions

The studies included in this review used a wide range of different interventions, which are described in the 'Characteristics of included studies' tables. We presented the effect sizes reported by each outcome and study in Table 2, Table 3, Table 4, and Table 5, together with the relative effects, for ease of comparison between different study designs and outcome measures. We also provided a 'Summary of findings' table, together with our decisions on how we determined levels of certainty (summary of findings Table for the main comparison; Appendix 2).

| Intervention | Outcome | Study | Type of study | Absolute post‐intervention difference | Absolute pre‐intervention difference | Post‐intervention level in control group | Relative effect |

| Dissemination of consumer reports directly to consumers | Assigned to high‐rated HMO (2 choices) | cRT | 1.5 | 0 | 15.9 | 0.0943 | |

| Assigned to low‐rated HMO (2 options) | 0.4 | 0 | 25 | 0.0160 | |||

| Assigned to high‐rated HMO (1 option) | 1.3 | 0 | 29.5 | 0.0441 | |||

| Assigned to low‐rated HMO (1 option) | 0.1 | 0 | 23.7 | 0.0042 | |||

| Proportion choosing plan | cNRT | 0.01 | 0 | 0.69 | 0.0145 |

cluster‐randomised trial (cRT); cluster‐non‐randomised trial (cNRT); health maintenance organization (HMO)

| Intervention | Outcome | Study | Type of study | Absolute post‐intervention difference | Absolute pre‐intervention difference | Post‐intervention level in control group | Relative effect | ||

| Public reporting of injection prescribing rates in outpatient areas | Average expenditure per prescription | cRT | 3.4 | 2.2 | 41.2 | 0.0291 | |||

| Percentage of prescriptions requiring antibiotics | 4.6 | 6.1 | 62.8 | ‐0.0249 | |||||

| Percentage of prescriptions requiring combined antibiotics | 2.1 | 4.1 | 18.6 | ‐0.1083 | |||||

| Percentage of prescriptions requiring injections | 9.0 | 13.2 | 64.9 | ‐0.0643 | |||||

| Average expenditure per prescription | 7.2 | 6.9 | 44.3 | 0.0070 | |||||

| Mandatory public reporting of healthcare‐associated infections | Pediatric quality indicator per 1000 eligible discharges | CBA | 0.6 | 0.5 | 1.0 | 0.1000 | |||

| Intervention | Outcome | Study | Type of study | Absolute level effect (95% CI) | Relative change at 3 months (95% CI) | Relative change at 6 months (95% CI) | Relative change at 9 months (95% CI) | Relative change at 12 months (95% CI) | Relative change at 24 months (95% CI) |

| Repeated public release of hospital caesarean section rates | Caeserean section rate | ITS | ‐0.52 (‐0.77 to ‐0.26) | ‐0.04 (‐1.23 to 1.18) | ‐1.49 (‐2.55 to ‐0.40) | ‐2.92 (‐4.50 to 1.30) | ‐4.34 (‐6.61 to ‐1.95) | ‐ | |

| Mandatory public reporting of healthcare‐associated infections | PICU blood cultures | ITS | 7.48 (1.09 to 13.87) | 6.21 (‐2.84 to 17.10) | 9.90 (‐0.45 to 22.64) | 13.87 (1.42 to 29.82) | 18.17 (2.90 to 38.77) | 22.87 (4.11 to 49.86) | |

| PICU antibiotics | 7.29 (4.46 to 10.12) | ‐0.11 (‐2.03 to 1.89) | 1.61 (‐0.45 to 3.75) | 3.36 (0.96 to 5.87) | 5.15 (2.26 to 8.20) | 6.98 (2.50 to 10.70) | |||

| NICU antibiotics | ‐5.79 (‐9.17 to ‐2.42) | 8.12 (4.11‐12.46) | 6.06 (2.08 to 10.35) | 4.05 (‐0.35 to 8.85) | 1.90 (‐3.17 to 7.53) | ‐0.36 (‐6.25 to 6.33) | |||

| NICU blood cultures | ‐1.14 (‐1.90 to ‐0.39) | 2.49 (‐0.51 to 5.67) | 1.06 (‐2.07 to 4.39) | ‐0.42 (‐3.93 to 3.36) | ‐1.95 (‐6.02 to 2.49) | ‐3.53 (‐8.26 to 1.72) | |||

cluster‐randomised trial (cRT); controlled before‐after (CBA) study; 95% confidence interval (95% CI); interrupted time series (ITS) study; neonatal intensive care unit (NICU); paediatric intensive care unit (PICU)

| Intervention | Outcome | Study | Type of study | Absolute post‐intervention difference | Absolute pre‐intervention difference | Postintervention level in control group | Relative effect |

| Public release of a range of quality indicators | All AMI processes | cRT | 2.0 | 0.9 | 65.6 | 0.0168 | |

| Use of standard admission orders | 6.1 | 0.7 | 72.5 | 0.0745 | |||

| Left ventricular function assessment | 2.9 | 6.3 | 49.8 | ‐0.0683 | |||

| Lipid test < 24 hours arrival | 3.8 | 1.6 | 51.1 | 0.0431 | |||

| Fibrinolytics < 30 mins after arrival | 2.6 | 3.1 | 45.7 | ‐0.0109 | |||

| Fibrinolytics decided by ED physician | 2.0 | 4.4 | 84.3 | ‐0.0285 | |||

| Fibrinolytics prior to transfer to CCU | 3.8 | 2.9 | 95.7 | 0.0094 | |||

| Aspirin < 6 hours arrival | 5.5 | 3.1 | 82.6 | 0.0291 | |||

| B blockers < 12 hours arrival | 2.4 | 3.9 | 73.7 | ‐0.0204 | |||

| Aspirin at discharge | 0.9 | 0.0 | 84.0 | 0.0107 | |||

| B blockers at discharge | 0.6 | 0.0 | 85.6 | 0.0070 | |||

| ACEi, ARB for LV dysfunction | 4.7 | 3.4 | 81.7 | 0.0159 | |||

| Statin at discharge | 0.3 | 0.2 | 85.5 | 0.0012 | |||

| All CHF processes | 1.0 | 3.0 | 54.6 | ‐0.0366 | |||

| LVF assessment | 2.7 | 4.5 | 55.2 | ‐0.0326 | |||

| Daily weights recorded | 1.3 | 0.3 | 24.0 | 0.0417 | |||

| Counselling on > 1 aspect of CHF | 0.9 | 1.7 | 55.3 | ‐0.0145 | |||

| ACEi, ARB for LV dysfunction | 6.3 | 1.7 | 92.4 | 0.0498 | |||

| B blockers for LV dysfunction | 4.0 | 1.7 | 71.7 | 0.0321 | |||

| Warfarin for AF | 0.6 | 3.1 | 64.2 | ‐0.0389 |

atrial fibrillation (AF); acute myocardial infarction (AMI); angiotensin‐converting enzyme inhibitor (ACEi); angiotensin‐2 receptor blockers (ARB); beta‐adrenergic blocking agents (B blockers); cluster‐randomised trial (cRT); coronary care unit (CCU); congestive heart failure (CHF); emergency department (ED); left ventricular (LV); left ventricular failure (LVF); minutes (mins)

| Intervention | Outcome | Study | Type of study | Absolute postintervention difference | Absolute pre‐intervention difference | Postintervention level in control group | Relative effect | |

| Public release of a range of quality indicators | AMI 30‐day mortality | cRT | 2.4 | 0.5 | 9.8 | 0.1939 | ||

| AMI 1‐year mortality | 3.1 | 1 | 19.4 | 0.1082 | ||||

| STEMI 30‐day mortality | 3.1 | 0.4 | 8.3 | 0.3253 | ||||

| STEMI 1‐year mortality | 3.9 | 1.2 | 13.5 | 0.2000 | ||||

| NSTEMI 30‐day mortality | 2.3 | 0.3 | 10.5 | 0.1905 | ||||

| NSTEMI 1‐year mortality | 3 | 0.9 | 22.6 | 0.0929 | ||||

| CHF 30‐day mortality | 1 | 0.9 | 9.6 | 0.0104 | ||||

| CHF 1‐year mortality | 2.6 | 0.6 | 30.3 | 0.0660 | ||||

| CHF and LV dysfunction 30‐day mortality | 0.9 | 0.6 | 8.5 | 0.0353 | ||||

| CHF and LV dysfunction 1‐year mortality | 6.3 | 1.8 | 26.3 | 0.1711 | ||||

| Mandatory reporting of healthcare‐associated infections | Pediatric quality indicator per 1000 eligible discharges | CBA | 0.6 | 0.5 | 1 | 0.1000 | ||

| Intervention | Outcome | Study* | Type of study | Absolute level effect (95% CI) | Relative change at 4 months (95% CI) | Relative change at 8 months (95% CI) | Relative change at 12 months (95% CI) | Relative change at 24 months (95% CI) |

| Hospital quality process and outcome metrics reported on a public website | 30‐day risk‐adjusted mortality | ITS | 0.12 (0.03 to 0.21) | 1.57 (‐4.28 to 8.18) | ‐2.47 (‐8.20 to 4.03) | 3.71 (‐3.25 to 11.74) | 7.18 (‐1.91 to 18.13) | |

| Public reporting of risk‐standardised hospital re‐admission rates | 30‐day re‐admission (AMI) | ITS | 0.00 (0.00 to 0.00) | ‐2.04 (‐8.56 to 5.48) | ‐1.36 (‐7.92 to 6.20) | ‐0.69 (‐7.34 to 7.00) | 0.72 (‐6.32 to 8.90) | |

| 30‐day re‐admission (heart failure) | 0.00 (0.00 to 0.00) | ‐1.39 (‐4.17 to 1.56) | ‐1.84 (‐4.59 to 1.08) | ‐1.88 (‐4.68 to 1.10) | ‐2.78 (‐6.42 to 1.15) | |||

| 30‐day re‐admission (pneumonia) | 0.00 (0.00 to 0.00) | ‐4.44 (‐13.61 to 6.91) | ‐5.07 (‐14.17 to 6.20) | ‐5.69 (‐14.71 to 5.47) | ‐7.45 (‐18.10 to 6.37) | |||

| 30‐day re‐admission (COPD) | 0.00 (0.00 to 0.00) | ‐6.66 (‐11.42 to ‐1.37) | ‐0.76 (‐6.11 to 5.23) | ‐7.64 (‐12.31 to ‐2.44) | ‐9.06 (‐13.62 to ‐4.00) | |||

| 30‐day re‐admission (diabetes) | 0.00 (‐0.00 to 0.01) | ‐0.65 (‐13.66 to 16.96) | 0.00 (‐13.13 to 17.81) | 0.65 (‐12.44 to 18.35) | 1.98 (‐13.57 to 24.36) | |||

| 30‐day mortality (AMI) | 0.00 (0.00 to 0.00) | 34.38 (2.71 to 94.32) | 35.83 (2.79 to 100.17) | 37.38 (2.88 to 106.67) | 43.06 (3.20 to 133.08) | |||

| 30‐day mortality (heart failure) | 0.00 (0.00 to 0.00) | 6.04 (‐5.86 to 21.37) | 13.78 (‐0.56 to 32.94) | 9.98 (‐3.46 to 27.77) | 13.31 (‐0.54 to 31.64) | |||

| 30‐day mortality (pneumonia) | 0.00 (0.00 to 0.00) | ‐3.96 (‐23.10 to 27.85) | ‐3.72 (‐16.70 to 14.05) | 2.94 (‐18.04 to 19.00) | ‐3.84 (‐22.51 to 26.69) | |||

| 30‐day mortality (COPD) | 0.00 (0.00 to 0.00) | 20.89 (5.51 to 41.52) | 21.63 (5.68 to 43.24) | 20.99 (5.54 to 41.75) | 22.00 (5.77 to 44.13) | |||

| 30‐day mortality (diabetes) | 0.00 (0.00 to 0.00) | ‐14.73 (‐34.83 to 23.29) | ‐15.10 (‐35.48 to 24.12) | ‐14.78 (‐34.92 to 23.40) | ‐19.39 (‐42.65 to 35.66) |

Acute Myocardial Infarction (AMI); ST‐Elevation Myocardial Infarction (STEMI); Non‐ST‐Elevation Myocardial Infarction (NSTEMI); Congestive Heart Failure (CHF); Left Ventricular (LV); Chronic Obstructive Pulmonary Disease (COPD); Cluster Randomised Trial (cRT); Controlled Before‐After (CBA) study; Interrupted Time Series (ITS) study; 95% Confidence Interval (95% CI)

* Joynt 2016 and DeVore 2016 provided outcomes in quarters rather than months and so have been presented as 4‐ and 8‐months rather than the pre‐specified 3‐ and 6‐months.

Primary outcomes

Changes in healthcare utilisation by consumers

This review provided an indication of the likely effect of public release of performance data on healthcare utilisation by consumers. There was low‐certainty evidence from three studies that public release of performance data may make little or no difference to long‐term healthcare utilisation by consumers. Two studies included data from over 18,294 insurance beneficiaries (Farley 2002a; Farley 2002b), and it was unclear how many consumers were analysed by Romano 2004.

There was low‐certainty evidence from one study that public release of performance data can lead to small and transient effects on healthcare utilisation behaviour by consumers (Romano 2004). This study analysed hospital patient volumes following implementation of the California Hospital Outcomes Project, which classified acute hospitals as better, worse or neither better nor worse than expected, based on the adjusted‐mortality of patients with acute myocardial infarction, or undergoing diskectomy. They found that hospitals, which were high performing for adjusted mortality from acute myocardial infarction, received higher volumes of acute myocardial infarction than expected in the third and fourth quarters after publication of the California Hospital Outcomes Project, although there was no measurable effect in the early period following publication. Similarly, inconsistent trends were observed amongst diskectomy patients; the only reported association was between high performing (low complication) hospitals and volume of patients with lumbar diskectomy. However, this effect size was very small (less than one additional patient per month per hospital), and so may not have been an important effect. Performance data from New York was released as part of the Cardiac Surgery Reporting System. Romano 2004 analysed Cardiac Surgery Reporting System data from New York, and found that high performing (low mortality) hospitals received a higher number of cases in the month following publication of a report (74.5 actual cases versus 61.1 expected). In the six months following designation as a high performance outlier, hospitals admitted 24 (22%) additional patients for coronary artery bypass surgery, and within two months after designation as a low performance outlier, hospitals treated 11 (16%) fewer patients. However, all volume effects had disappeared within three months of data publication.

There was low‐certainty evidence that suggested that public release of performance data might effect the behaviour of specific subgroups. For example, Farley 2002b reported that the subgroup of enrollees who actually read the Consumer Assessment of Healthcare Providers and Systems report chose plans with higher standardised Consumer Assessment of Healthcare Providers and Systems ratings than those in the control group (2.58 versus 1.81, P < 0.01). Similarly, Romano 2004 found that the only detectable changes in hospital volume were among patients undergoing coronary artery bypass grafting in New York, and this change was entirely driven by patients who identified as 'white and other race'. They did not find evidence that black or Hispanic patient volumes were affected by designating a hospital as a high coronary artery bypass graft mortality outlier.

It is possible that restrictions on patient choice might act as an effect modifier (Aggarwal 2017; Moscelli 2017). However, the interventions in Farley 2002a and Farley 2002b were presented as 'true' choices, since new insurance beneficiaries should not have been limited by concerns around cost and distance. Similarly, Romano 2004 studied hospital choice amongst elective surgical populations seeking treatment at hospitals within a single city.

Changes in healthcare decisions taken by healthcare providers (professionals and organisations)

This review provides some indication of the likely effect of public release of performance data on decision making by healthcare professionals. There was low‐certainty evidence from four studies that public release of performance data may make little or no difference to decisions taken by healthcare professionals. These studies included three million births (Jang 2011), and 67 healthcare providers (Ikkersheim 2013; Flett 2015; Zhang 2016).

Two studies reported modest effects on some outcomes. Ikkersheim 2013 did not find any clear affect on referral patterns following public release of data about cataract surgery, or hip and knee replacement. However, there was a small effect on referrals for breast cancer, with general practitioners in the intervention group referring 1.0% more cases (P = 0.01) to hospitals per incremental percentage point on the report card scale of medical effectiveness. Similarly, Zhang 2016 found that the effect of displaying prescription performance data in outpatient areas varied across outcomes and disease groups. Public release of performance data did not change the number of prescriptions containing antibiotics in the bronchitis group, two or more antibiotics in the gastritis group, injections in the hypertension group, or antibiotic injections in the bronchitis and hypertension groups. Similarly, the average prescription cost did not change for patients with hypertension. However, public release of performance data did appear to reduce prescriptions containing antibiotics for gastritis (intervention effect ‐12.7%, P < 0.001), two or more antibiotics for gastritis (‐3.8%, P = 0.005), injections for gastritis (‐10.6%, P < 0.001), and antibiotic injections for gastritis (‐10.7%, P < 0.001). Average antibiotic prescription cost fell for patients with bronchitis (‐7.9%, P < 0.001) and gastritis (‐5.7%, P = 0.005). These mixed findings were also complicated by evidence that public release of prescribing data increased prescriptions containing antibiotics for patients with hypertension (intervention effect 2.0%, P = 0.08), and injections for bronchitis (2.0%, P = 0.012).

One study found that the first public release of hospital caesarean section rate data may have slightly reduced the number of patients undergoing this procedure (‐0.8%, P < 0.01), and that this persisted until the end of the study, 20 months later. However, further public releases of data did not exhibit any further effect on caesarean section rates (Jang 2011).

Finally, Flett 2015 did not find any evidence that mandatory public reporting of central line‐associated bloodstream infections had any effect on blood culture testing or antibiotic utilisation in paediatric and neonatal intensive care units in the United States.

Changes in the healthcare utilisation decisions of purchasers

We found no evidence on the effect of public release of performance data on this outcome.

Changes in provider performance

This review provides some indication of the likely effect of public release of performance data on healthcare provider performance. There was low‐certainty evidence from one study that public release of performance data may make little or no difference to objective measures of provider performance. Tu 2009 included data from 82 healthcare providers.

Tu 2009 found that a media campaign and release of hospital performance data online had no effect on 11 of 12 acute myocardial infarction process‐of‐care quality indicators. The twelfth acute myocardial infarction quality indicator (fibrinolytics given prior to transfer to the Coronary Care Unit or Intensive Care Unit) increased by 5.8% (P = 0.02), although no statistical correction was made for multiple hypothesis testing. Similarly, public release of performance data did not clearly effect five of six congestive heart failure quality indicators, although the sixth (Angiotensin‐Converting Enzyme (ACE) inhibitor or Angiotensin Receptor Blocker (ARB) for left ventricular dysfunction) increased by 5.9% (P = 0.02). Neither the acute myocardial infarction nor congestive heart failure composite process‐of‐care quality indicators improved following the public release of performance data.

The main outcomes in two studies described above, are sometimes considered evidence of provider performance (Jang 2011; Zhang 2016). However, as these outcomes (caesarean section and antibiotic prescribing) may be appropriate clinical decisions, they are not direct evidence of poor performance, so we have considered them under 'Changes in healthcare decisions taken by healthcare providers (professionals and organisations)' instead of 'Provider performance'.

Changes in patient outcome

Low‐certainty evidence from five studies suggested that public release of performance data may slightly improve patient outcomes. We graded the certainty as low, because the evidence was mixed, with two studies reporting improvements (Tu 2009; Liu 2017), and three finding no evidence of improved patient outcomes (Rinke 2015; DeVore 2016; Joynt 2016). These five studies included 7503 healthcare providers and 315,092 hospitalisations.

Two studies reported that patient outcomes were not changed by publication of hospital‐level quality metrics on Hospital Compare, which is a website run by the Centers for Medicare & Medicaid Services. DeVore 2016 did not find any evidence that publication of hospital re‐admission rates had an effect on 30‐day re‐admissions for patients with myocardial infarction, heart failure, or pneumonia. Similarly, Joynt 2016 reported a very small slowing in a pre‐existing trend (change 0.13% per quarter; 95% CI 0.12% to 0.14%) towards reduced 30‐day mortality following publication of mortality rates on Hospital Compare.

Rinke 2015 did not find any evidence that mandatory hospital reporting of central line‐associated blood stream infections had any effect on the rate of paediatric central line‐associated bloodstream infections. However, Liu 2017 reported a 34% reduction (incidence rate ratio 0.66, P < 0.001) in adult central line‐associated bloodstream infections after mandatory reporting, when compared with the 25‐month period before each state introduced legislation. This discrepancy between the findings of Rinke 2015 and Liu 2017 might reflect a genuine difference in terms of impact on children and adult central line‐associated bloodstream infection rates. Importantly, both studies found that central line‐associated bloodstream infection rates declined across the USA during their study period, including in states that did not introduce mandatory reporting. It is unclear whether public release of performance data in some states contributed to this national decline, even within states that did not introduce mandatory reporting.

Tu 2009 found that public release of hospital performance data online and through the media was associated with a 2.5% reduction in 30‐day mortality (P = 0.045) for patients with acute myocardial infarction, although no such effect was observed in patients with congestive heart failure.

Changes in staff morale

We found no evidence on the effect of public release of performance data on this outcome.

Secondary outcomes

Unintended and adverse effects or harms

We found no evidence on the effect of public release of performance data on this outcome.

Impact on equity

Low‐certainty evidence from one study suggested that public release of performance data may have different effects on advantaged and disadvantaged populations (Romano 2004). As described in 'Changes in healthcare utilisation by consumers', this study reported that patients who identified as white and other race in New York might have been influenced by publicly released hospital mortality rates when choosing a hospital in which to undergo coronary artery bypass grafting. However, this same effect was not observed in black or Hispanic patients undergoing the same procedure at hospitals in New York.

Other outcome measures

Two studies reported on awareness, knowledge of performance data, attitude, and cost data (Farley 2002b; Ikkersheim 2013). Farley 2002b reported secondary outcomes as a result of a survey, although this was disseminated using a 3:1 ratio, and the results were further complicated by low response rates. Ikkersheim 2013 undertook semi‐structured interviews with 17 GPs but these were largely focused on the specific intervention (report cards) and the findings were poorly reported. Therefore, we decided to exclude these results, and did not report these outcomes.

Discussion

Summary of main results

Changes in healthcare utilisation by consumers

Changes in healthcare utilisation are one of the two key ways in which public release of performance data might improve healthcare quality (Berwick 2003). However, only three studies addressed the impact on healthcare utilisation decisions by consumers (Farley 2002a; Farley 2002b; Romano 2004). We judged that they provided low‐certainty evidence of little or no effect. There were consistent results from two studies that showed some consumers may engage with published performance data, and change their healthcare choices accordingly; this group was too small to register an effect in the population as a whole (Farley 2002b; Romano 2004).

Changes in healthcare decisions taken by healthcare providers (professionals and organisations)

There was low‐certainty evidence with mixed findings from four studies, which reported either modest effects (Jang 2011; Ikkersheim 2013; Zhang 2016), or no effect (Flett 2015), on healthcare decisions taken by healthcare providers. Two studies found evidence that public release of performance data had modest effects on some of the healthcare decisions taken by healthcare providers, but not all of the decisions measured (Ikkersheim 2013; Zhang 2016). One study found that the first public release of data had a small but sustained effect on caesarean rates, but that subsequent releases did not affect the rate any further (Jang 2011).

Changes in provider performance

There was low‐certainty evidence from one study that informed conclusions about the effect of public release of performance data on provider performance. A single randomised trial addressed this question, and found that 2/18 (11.1%) of measured processes appeared to improve in the intervention hospitals (Tu 2009). However, as no correction was made for multiple hypothesis testing (Bender 2001), this did not provide convincing evidence that provider performance was affected by public release of performance data.

Changes in patient outcome

Low‐certainty evidence showed that five studies that included patient outcomes had inconsistent findings, with two reporting improvements (Tu 2009; Liu 2017), and three reporting no difference (Rinke 2015; DeVore 2016; Joynt 2016).

Impact on equity