Estrategias para la mejoría de la implementación de políticas o prácticas escolares dirigidas a los factores de riesgo de enfermedades crónicas

Resumen

Antecedentes

Varias políticas o prácticas escolares parecen ser efectivas para mejorar la dieta y la actividad física de los niños, y para prevenir el aumento de peso excesivo, el hábito de fumar o el consumo nocivo de alcohol. Las escuelas, sin embargo, con frecuencia no logran implementar dichas intervenciones basadas en evidencia.

Objetivos

Los objetivos primarios de la revisión son examinar la efectividad de las estrategias que procuran mejorar la implementación de las políticas, los programas o las prácticas escolares para considerar la dieta, la actividad física, la obesidad, el hábito de fumar o el consumo de alcohol en los niños.

Los objetivos secundarios de la revisión son: Examinar la eficacia de las estrategias de aplicación en lo que respecta al comportamiento en materia de salud (por ejemplo, el consumo de frutas y verduras) y los resultados antropométricos (por ejemplo, el índice de masa corporal, el peso); describir los efectos de esas estrategias en los conocimientos, las aptitudes o las actitudes del personal escolar que participa en la aplicación de políticas, programas o prácticas de promoción de la salud; describir el coste o el coste‐efectividad de esas estrategias; y describir todo efecto adverso no previsto de las estrategias en las escuelas, el personal escolar o los niños.

Métodos de búsqueda

Se realizaron búsquedas en todas las bases de datos electrónicas el 16 de julio de 2017 de los estudios publicados hasta el 31 de agosto de 2016. Se hicieron búsquedas en las siguientes bases de datos electrónicas: Biblioteca Cochrane, incluido el Registro Cochrane Central de Ensayos Controlados (Cochrane Central Register of Controlled Trials, CENTRAL); MEDLINE; MEDLINE In‐Process & Other Non‐Indexed Citations; Embase Classic y Embase; PsycINFO; Education Resource Information Center (ERIC); Cumulative Index to Nursing and Allied Health Literature (CINAHL); Disertaciones y tesis; y SCOPUS. Se examinaron las listas de referencias de todos los ensayos incluidos para obtener las citas de otros ensayos potencialmente relevantes. Se realizaron búsquedas manuales en todas las publicaciones entre 2011 y 2016 en dos revistas especializadas (Implementation Science y Journal of Translational Behavioral Medicine) y búsquedas en la Plataforma de Registro Internacional de Ensayos Clínicos de la OMS (ICTRP) (http://apps.who.int/trialsearch/), así como en el registro de los Institutos Nacionales de Salud de los Estados Unidos (https://clinicaltrials.gov). Se consultó con expertos en el tema para identificar otras investigaciones relevantes.

Criterios de selección

La “implementación” se definió como el uso de estrategias para adoptar e integrar las intervenciones de salud basadas en evidencia y cambiar los modelos de práctica dentro de contextos específicos. Se incluyó cualquier ensayo (aleatorizado o no aleatorizado) realizado a cualquier escala, con un grupo de control paralelo que comparara una estrategia para implementar las políticas o las prácticas con objeto de considerar la dieta, la actividad física, el sobrepeso o la obesidad, el hábito de fumar o el consumo de alcohol por parte del personal de la escuela versus “ninguna intervención”, la práctica “habitual” o una estrategia de implementación diferente.

Obtención y análisis de los datos

La búsqueda de citas, la extracción de datos y la evaluación del riesgo de sesgo fueron realizadas por los autores de la revisión en pares. Los desacuerdos entre los autores de la revisión se resolvieron por consenso, o si fue necesario, con un tercer autor. La heterogeneidad considerable de los ensayos impidió el metanálisis. Los hallazgos de los ensayos se resumieron de manera narrativa mediante la descripción del tamaño del efecto de la medida de resultado primaria para la implementación de la política o la práctica (o la mediana de dichas medidas cuando no se especificaba un único resultado primario).

Resultados principales

Se incluyeron 27 ensayos, 18 de los cuales se realizaron en los EE. UU. Diecinueve estudios emplearon diseños de ensayos controlados aleatorizados (ECA). Quince ensayos probaron estrategias para implementar políticas, prácticas o programas de alimentación saludable; seis ensayos probaron estrategias dirigidas a políticas o prácticas de actividad física; y tres ensayos se centraron en políticas o prácticas de tabaco. Tres ensayos se dirigieron a una combinación de los factores de riesgo. Ninguno de los ensayos incluidos procuró aumentar la implementación de las intervenciones para retrasar la iniciación ni reducir el consumo de alcohol. Todos los ensayos examinaron estrategias de implementación multiestratégicas y no hubo dos ensayos que examinaran las mismas combinaciones de las estrategias de implementación. Las estrategias de aplicación más comunes incluían materiales educativos, divulgación educativa y reuniones educativas. Para todos los resultados, la calidad general de la evidencia fue muy baja y el riesgo de sesgo fue alto para la mayoría de los ensayos en cuanto al sesgo de detección y de realización.

Entre los 13 ensayos que informaron sobre resultados dicotómicos de la aplicación ‐la proporción de escuelas o personal escolar (por ejemplo, clases) que aplican una política o una práctica específicas‐, la mediana de los tamaños del efecto no ajustado (mejora) varió entre el 8,5% y el 66,6%. De siete ensayos que informaron el porcentaje de una práctica, programa o política que se había ejecutado, la mediana del efecto no ajustado (mejoría), en relación con el control, varió de ‐8% a 43%. El efecto, en relación con el control, informado en dos ensayos que evaluaron el impacto de las estrategias de implementación en el tiempo por semana que los profesores dedicaron a proporcionar las políticas o las prácticas proyectadas varió de 26,6 a 54,9 minutos por semana. Entre los ensayos que informaron sobre otros resultados continuos de la implementación, los resultados fueron contradictorios. Se realizaron cuatro ensayos de las estrategias que procuraban lograr la implementación “a escala”, o sea, a través de muestras de al menos 50 escuelas, de las cuales se informaron mejorías en la implementación en tres ensayos.

El impacto de las intervenciones en el comportamiento de salud de los estudiantes o el estado del peso fue contradictorio. Tres de los ocho ensayos con resultados de la actividad física no informaron mejorías significativas. Dos ensayos informaron reducciones en el consumo de tabaco entre la intervención en relación con el control. Siete de nueve ensayos no informaron diferencias entre los grupos en el sobrepeso, la obesidad ni la adiposidad de los estudiantes. En general se informaron mejorías positivas en la ingesta alimentaria de los niños entre los ensayos que informaron dichos resultados. Tres ensayos evaluaron el impacto de las estrategias de implementación en las actitudes del personal de la escuela y encontraron efectos contradictorios. Dos ensayos especificaron una evaluación de los posibles efectos adversos no intencionales en los métodos de estudio, de los cuales, no informaron ninguno. Un ensayo informó que el apoyo a la implementación no aumentó significativamente los ingresos ni los gastos de la escuela, y otro realizó una evaluación económica formal, e informó que la intervención fue coste‐efectiva. La heterogeneidad de los ensayos, y la ausencia de terminología consistente en cuanto a la descripción de las estrategias de implementación, fueron limitaciones importantes de la revisión.

Conclusiones de los autores

Debido a la calidad muy baja de la evidencia disponible, se desconoce si las estrategias evaluadas mejoran la implementación de las políticas o las prácticas escolares proyectadas, los comportamientos de salud de los estudiantes o el conocimiento o las actitudes del personal de la escuela. Tampoco se conoce si las estrategias para mejorar la implementación son efectivas en función de los costes ni si dan lugar a consecuencias adversas no intencionales. Se necesita investigación adicional para guiar los esfuerzos con objeto de facilitar el traslado de la evidencia a la práctica en este contexto.

PICOs

Resumen en términos sencillos

Mejoría de la implementación de las políticas y prácticas escolares para mejorar la salud de los estudiantes

Pregunta de la revisión: La revisión procuró evaluar cuán efectivas fueron las estrategias para apoyar la implementación de políticas y prácticas escolares con objeto de considerar la dieta, la actividad física, el aumento de peso excesivo, el hábito de fumar o el consumo de alcohol de los estudiantes. También se evaluó si estas estrategias dieron lugar a mejorías en estos comportamientos de salud o en el estado de peso de los estudiantes, mejoraron las actitudes del personal de la escuela o su conocimiento con respecto a la implementación, tuvieron algún efecto adverso y si fueron coste‐efectivas.

Antecedentes: La investigación ha identificado un rango de políticas y prácticas escolares que pueden ser potencialmente efectivas para mejorar los comportamientos de salud de los estudiantes. A pesar de lo anterior, dichas políticas y prácticas suelen no implementarse en las escuelas, incluso en las circunstancias en las que es obligatorio hacerlo. A menos que se ejecuten políticas y prácticas basadas en evidencia, no pueden otorgar beneficios en la salud pública.

Características de los estudios: Se incluyeron 27 ensayos, 18 de los cuales se realizaron en los EE. UU. Quince ensayos probaron estrategias para implementar políticas, prácticas o programas de alimentación saludable; seis ensayos probaron estrategias dirigidas a políticas o prácticas de actividad física; y tres ensayos se centraron en políticas o prácticas de tabaco. Tres ensayos se dirigieron a una combinación de los comportamientos de salud. Ninguno de los ensayos incluidos procuró aumentar la implementación de las intervenciones para retrasar la iniciación ni reducir el consumo de alcohol. Los ensayos evaluaron un rango de estrategias de apoyo a la implementación, e incluyeron material didáctico, reuniones educacionales, uso de líderes de opinión, financiamiento externo, procesos de consenso local e intervenciones adaptadas.

Fecha de la búsqueda: La evidencia está actualizada hasta el 31 de agosto de 2016.

Resultados clave: No se conoce si las estrategias evaluadas mejoran la implementación de las políticas o las prácticas escolares proyectadas, los comportamientos de salud de los estudiantes ni el conocimiento o las actitudes del personal de la escuela. Tampoco se conoce si las estrategias evaluadas dan lugar a efectos adversos no intencionales ni si son efectivas en función de los costes.

Limitaciones: La heterogeneidad de los ensayos, y la ausencia de una terminología consistente que describiera las estrategias de implementación fueron limitaciones importantes de la revisión.

Calidad de la evidencia: La calidad general de la evidencia se consideró muy baja para todos los resultados que incluyeron los efectos informados por los ensayos.

Authors' conclusions

Summary of findings

| Strategies for enhancing the implementation of school‐based policies or practices targeting risk factors for chronic disease | |||

| Patient or population: School aged children (5 ‐ <18 years) Settings: School Intervention: Any strategy (e.g. educational materials, educational meetings, audit and feedback, opinion leaders, education outreach visits) with the intention of improving the implementation of health promoting policies, programs or practices for physical activity, healthy eating, obesity prevention, tobacco use prevention or alcohol use prevention in schools Comparison: No intervention or usual practice (22 trials), alternate intervention (2 trials) or minimal support comparison group (3 trials) | |||

| Outcomes | Impact | Number of Participants | Quality of the evidence |

| Implementation of school‐based policies, practices or programs that aim to promote healthy or reduce unhealthy behaviours relating to child diet, physical activity, obesity, or tobacco or alcohol use | We are uncertain whether strategies improve the implementation of school‐based policies, practices or programs that aim to promote healthy or reduce unhealthy behaviours relating to child diet, physical activity, obesity, or tobacco or alcohol use. Among 13 trials reporting dichotomous implementation outcomes—the proportion of schools or school staff (e.g. classes) implementing a targeted policy or practice—the median unadjusted (improvement) effect sizes ranged from 8.5% to 66.6%. Of seven trials reporting the percentage of a practice, program or policy that had been implemented, the median unadjusted effect (improvement), relative to the control ranged from ‐8% to 43%. The effect, relative to control, reported in two trials assessing the impact of implementation strategies on the time per week teachers spent delivering targeted policies or practices ranged from 26.6 to 54.9 minutes per week. | 1599 schools (27 trials) | Very lowa,b |

| Measures of student physical activity, diet, weight status, tobacco or alcohol use | We are uncertain whether strategies to improve the implementation of school‐based policies, practices or programs targeting risk factors for chronic disease impact on measures of student physical activity, diet, weight status, tobacco or alcohol use | 29,181 studentsf (21 trials) | Very lowa,b,c |

| Knowledge, skills or attitudes of school staff involved regarding the implementation of health promoting policies, or practices | We are uncertain whether strategies to improve the implementation of school‐based policies, practices or programs targeting risk factors for chronic disease impact on the knowledge, skills or attitudes of school staff | 1347 stakeholders (3 trials) | Very lowa,b |

| Cost or cost‐effectiveness of strategies to improve the implementation | We are uncertain whether strategies to improve the implementation of school‐based policies, practices or programs targeting risk factors for chronic disease are cost‐effective | 42 schools (1 trial) 473 students (1 trial)g | Very lowa,b,d |

| Unintended adverse effects of strategies to improve implementation on schools, school staff or children | We are uncertain whether strategies to improve the implementation of school‐based policies, practices or programs targeting risk factors for chronic disease result in unintended adverse effects or consequences | 68 schools and 4603 studentsh (2 trials) | Very lowb,c |

| High quality: Further research is very unlikely to change our confidence in the estimate of effect. | |||

| aDowngraded one level due to limitations in the design. bDowngraded one level due to unexplained heterogeneity. cDowngraded one level due to indirectness. dDowngraded one level due to imprecision. eGRADE Working Group grades of evidence fTwo trials measured student behaviour through the use of non‐student data (e.g. purchases) and did not provide student sample sizes. gOne trial reported on the impact of an intervention on school level revenue. One trial reported on cost‐effectiveness. hOne trial measured adverse events through the use of non‐student data (i.e. canteen profits) and did not provide student sample sizes. | |||

Background

Description of the condition

Five health risks: physical inactivity, poor diet, tobacco smoking, risky alcohol consumption and obesity are the most common modifiable causes of chronic disease (Lim 2012). These risk factors, all among the top 20 risk factors contributing to global death and disability, each account for a significant proportion of the total global disease burden: physical inactivity (2.8%), dietary risks (9.2%), tobacco smoking (5.5%), alcohol use (3.8%), and high body‐mass index (BMI) (3.8%) (IHME 2013). Together, they were responsible for more than 580 million years lived with disability and 24 million deaths in 2010 (IHME 2013). As a consequence, reducing the impact of these modifiable health risks in the community has been identified as a public health priority (WHO 2011).

Targeting health risks in children is an important chronic disease prevention strategy, as heath behaviours established in childhood are likely to track into adulthood (Swinburn 2011). Schools are an attractive setting for the implementation of child‐focused chronic disease‐prevention initiatives, as they offer continuous and intensive contact with children for prolonged periods (WHO 2012). Furthermore, evidence from systematic reviews support a range of benefits from school‐based health programs (Dobbins 2013; Dusenbury 2003; Foxcroft 2011; Jaime 2009; Kahn 2002; Thomas 2013; Waters 2011). For instance, comprehensive physical activity interventions can improve child activity during the school day, their movement skill proficiency and knowledge for lifetime physical activity (Kahn 2002). A Cochrane review of school‐based programs for smoking found interventions (> one year in duration) that aimed to prevent smoking uptake, reduced smoking rates by up to 12% (Thomas 2013). Similarly, Cochrane reviews of obesity and alcohol prevention programs include examples of interventions that have positive protective effects on child BMI and alcohol misuse (Dusenbury 2003; Foxcroft 2011; Waters 2011). Finally, systematic review evidence also suggests when implemented, school food policies are generally effective in improving the food environment and dietary intake of school students (Jaime 2009).

Despite such evidence, the implementation of policies, intervention programs or recommended practices to reduce these health risks in usual community contexts is poor (AONSW 2012; De Silva‐Sanigorski 2011; Downs 2012; Gabriel 2009; Nathan 2011). Research conducted in Brazil, Canada and Australia for example, suggests that less than 10% of schools are compliant with legislation, policy or nutrition guidelines regarding the sale and promotion of healthy foods in schools (De Silva‐Sanigorski 2011; Downs 2012; Gabriel 2009). In Australia, a recent report highlighted that around 30% of schools did not provide recommended planned physical activity to children (AONSW 2012). Further, in the USA, less than 17% of schools effectively implement substance misuse prevention programs including those related to tobacco and alcohol use (Ennett 2003). The failure to implement evidence‐based programs in the community, denies the public the benefits such health research is intended to deliver. Improving the translation of research findings, characterised by the transition of evidence regarding an intervention to its application in the real world, represents a significant challenge for 21st century medicine (Wolfenden 2015).

Description of the intervention

Research about a treatment or intervention can not lead to health outcomes if health systems, organisations, or professionals do not use interventions with known health benefits (Eccles 2009). The process of research translation, is however, complex. As a conceptual guide, the US National Institute of Health have described five phases of the translation process (T0 – T4) from research discovery to population health impact (Glasgow 2012; Khoury 2010). Earlier phases (T0 – T2) focus on basic science, epidemiology and testing the efficacy of health interventions. Translation Phase 3, known as 'T3', is dedicated to research designed to increase the implementation of evidence‐based interventions, practices, policies or programs in practice (Glasgow 2012). This is achieved through 'implementation strategies'—techniques designed to change practice patterns within specific settings to improve the 'implementation' of evidence‐based health interventions (Glasgow 2012; Rabin 2008). There are a range of potential implementation strategies that can improve the likelihood of schools' implementation of policies and practices to promote student health and reduce the risk of future disease including those listed in the Cochrane Effective Practice and Organisation of Care (EPOC) taxonomy (EPOC 2015). Such strategies might include continuous quality improvement processes, educational materials, performance monitoring, local consensus processes and educational outreach visits.

Why it is important to do this review

Studying the effectiveness of 'implementation strategies', and why these strategies succeed or fail, provides important information for future implementation research and informs decisions of policy makers and practitioners interested in ensuring evidence‐based chronic disease prevention programs are sufficiently implemented to yield health benefits. A number of systematic reviews have been conducted describing the effectiveness of strategies to implement practice guidelines and improve professional practice of clinicians in clinical settings, such as audit and feedback (Ivers 2012), reminders (Arditi 2017), education meetings and workshops (Forsetlund 2009), and incentives (Scott 2011). However, implementation research in non‐clinical community settings has largely been overlooked (Buller 2010). To our knowledge, few systematic reviews concerning implementation of community interventions have been conducted; only one has examined strategies to implement chronic disease prevention programs in schools (Rabin 2010), and another within childcare settings (Wolfenden 2016). The school's review included studies investigating cancer prevention strategies and only identified nine school‐based implementation strategies. Moreover, the review only included studies published until the beginning of 2008. To guide optimal implementation of school‐based health initiatives, further synthesis of evidence is warranted to ensure the inclusion of all relevant studies within the school setting. By doing so, this review aims to provide evidence for how health promotion practitioners and education systems can design and optimally implement policies, programs and practices in the school setting to promote healthy behaviours of children.

Objectives

The primary aims of the review are to examine the effectiveness of strategies aiming to improve the implementation of school‐based policies, programs or practices to address child diet, physical activity, obesity, tobacco or alcohol use.

Secondary objectives of the review are to:

-

examine the effectiveness of implementation strategies on health behaviour (e.g. fruit and vegetable consumption) and anthropometric outcomes (e.g. BMI, weight);

-

describe the impact of such strategies on the knowledge, skills or attitudes of school staff involved in implementing health‐promoting policies, programs or practices;

-

describe the cost or cost‐effectiveness of such strategies; and

-

describe any unintended adverse effects of strategies on schools, school staff or children.

Methods

Criteria for considering studies for this review

Types of studies

Strategies to improve the implementation of policies, programs or practices are often complex in nature and have been evaluated with a wide variety of methods and designs. While results of randomised controlled trials (RCTs) are considered more robust, using this study design is often impractical or inappropriate for complex public health interventions (Glasgow 1999). We are aware of an ongoing RCT evaluating implementation strategies in schools; however, we envisaged that there would be a paucity of completed trials of this kind. To overcome this, we included any trial (randomised or non‐randomised) with a parallel control group published in any language including the following trial designs:

-

RCTs and cluster‐RCTs;

-

quasi‐RCTs and cluster quasi‐RCTs; and

-

controlled before and after studies (CBAs), cluster‐CBAs.

Studies assessing any strategy aiming to improve the implementation of policies, programs or practices in a school setting which target healthy eating, physical activity, obesity prevention, tobacco or alcohol prevention (or combination of) were eligible. To be included trials were required to report the impact of a defined implementation strategy on an implementation outcome between experimental groups.

Types of participants

We included studies set in schools (e.g. elementary, primary, secondary, middle, high and central schools) where the age of students was typically between five and 18 years. Study participants could be any stakeholders who may influence the uptake, implementation or sustainability of the target health‐promoting policy, practice or program in schools, including teachers, managers, cooks or other staff of schools and education departments. Study participants may also include administrators, officials or representatives of school services, or other health, education, government or non‐government personnel responsible for encouraging or enforcing the implementation of health promoting programs, policies or practices in schools. Studies or arms of trials assessing implementation performed by research staff were excluded.

Types of interventions

We included studies that compared school‐based strategies with the intention of improving the implementation of health‐promoting policies, programs or practices for physical activity, healthy eating, obesity prevention, tobacco use prevention or alcohol use prevention to either 1) other implementation strategies, 2) no implementation strategy or 3) 'usual' practice. For trials that did not describe the comparison conditions, but reported the findings against a comparison group, we assumed that the comparison was usual practice.

To be eligible for inclusion, studies had to include strategies to improve implementation by those involved in the delivery, uptake or use of policies, programs or practices in schools. Strategies could include quality improvement initiatives, education and training, performance feedback, prompts and reminders, implementation resources (e.g. manuals), financial incentives, penalties, communication and social marketing strategies, professional networking, the use of opinion leaders, implementation consensus processes or other strategies. Strategies could be singular or multi‐component and could be directed at individuals, classes or whole schools.

Types of outcome measures

The review examined a range of primary and secondary outcomes of school policy, program or practice implementation. 'Implementation' was defined as the use of strategies to adopt and integrate evidence‐based health interventions and to change practice patterns within specific settings (Glasgow 2012). To be included, outcomes were required to report an action undertaken by a school or school personnel (e.g. proportion of schools implementing canteen services consistent with dietary guidelines or mean number of lessons of teaching curricula implemented). Measures of individual child behaviour (e.g. proportion of children who were moderately or vigorously physically active) were not considered implementation outcomes. Implementation could have occurred at any scale (local, national or international). We included trials reporting only follow‐up data of an implementation outcome (i.e. no baseline data) in instances where the trial utilised a randomised design as baseline values were assumed to have been equivalent (or differ only due to chance), or if the baseline values of implementation outcomes were assumed to be zero, for example, the implementation of a curricula resource not available to schools at baseline.

Primary outcomes

-

Any objectively or subjectively (self‐reported) assessed measure of school policy, program or practice implementation.

Measures relating to successful implementation including uptake, partial/complete uptake (e.g. consistent with protocol/design), or routine use were included. Such data may be obtained from audits of school records, questionnaires or surveys of staff, direct observation or recordings, examination of routinely collected information from government departments (such as compliance with food standards or breaches of department regulations) or other sources.

Secondary outcomes

Data on secondary outcomes were only extracted for measures corresponding to implementation outcomes. For example, in a trial of an intervention targeting physical activity and healthy eating, but where an implementation strategy and implementation outcome data were only reported for healthy eating policies or practices, only data on secondary trial outcomes only related to diet (foods or beverages consumed by students or student BMI) were extracted. Secondary outcomes could be measured objectively or subjectively (self‐reported) and included:

-

measures of health behaviours or risk factors relevant to policies, programs, or practices being implemented (i.e. diet; physical activity; tobacco or alcohol use; or measures of excessive weight gain);

-

any measure of school staff knowledge, skills or attitudes related to the implementation of policies, programs or practices supportive of diet, physical activity, or healthy weight, or tobacco or alcohol use prevention;

-

estimates of absolute costs or any assessment of the cost‐effectiveness of strategies to improve implementation of policies, programs or practices in schools; and

-

any reported unintended adverse consequences of a strategy to improve implementation of policies, programs or practices in schools; these could include adverse impacts on child health (e.g. unintended changes in other risk factors, injury), school operation or staff attitudes (e.g. impacts on staff motivation or cohesion following implementation), or the displacement of other key programs, curricula or practice.

We summarise data for all relevant risk factors targeted by the review. Where there were differences in published information between peer‐reviewed and grey literature for the same trial, we preferentially used data from peer‐reviewed publications.

Search methods for identification of studies

We performed a comprehensive search for both published and unpublished research studies across a broad range of information sources to reflect the cross‐disciplinary nature of the topic. Articles published in any language were eligible and there were no restrictions regarding article publication dates.

Electronic searches

We searched the following electronic databases:

-

Cochrane Library including the Cochrane Central Register of Controlled Trials (CENTRAL) (up to Sept 1st 2016);

-

MEDLINE (up to Sept 1st 2016);

-

MEDLINE In‐Process & Other Non‐Indexed Citations (up to Sept 1st 2016);

-

Embase Classic and Embase (up to Sept 1st 2016);

-

PsycINFO (up to Sept 1st 2016);

-

Education Resource Information Center (ERIC) (up to Sept 1st 2016);

-

Cumulative Index to Nursing and Allied Health Literature (CINAHL) (up to Sept 1st 2016);

-

Dissertations and Theses (up to Sept 1st 2016); and

-

SCOPUS (up to Sept 1st 2016).

We adapted the MEDLINE search strategy for each database using database‐specific subject headings, where available (Appendix 1). We included filters used in other systematic reviews for research design (Waters 2011), population (Guerra 2014), physical activity and healthy eating (Dobbins 2013; Guerra 2014; Jaime 2009), obesity (Waters 2011), tobacco use prevention (Thomas 2013), and alcohol misuse (Foxcroft 2011). A search filter for intervention (implementation strategies) was developed based on previous reviews (Wolfenden 2016), and common terms in implementation and dissemination research (Rabin 2008).

Searching other resources

We screened reference lists of all included trials for citations of potentially relevant studies and contacted authors of included studies for other potentially relevant trials. We handsearched all publications between July 2011 and July 2016 in the journals: Implementation Science and Journal of Translational Behavioral Medicine. We also conducted searches of the WHO International Clinical Trials Registry Platform (ICTRP) (http://apps.who.int/trialsearch/) as well as the US National Institutes of Health registry (https://clinicaltrials.gov). One study identified in these searches which had not been published was listed in the 'Characteristics of ongoing studies' table. We consulted with experts in the field to identify other relevant research. To identify companion papers of identified eligible trails we also conducted Google Scholar searches of the first 100 citations identified by a search of the trial name or title.

Data collection and analysis

Selection of studies

Initially, one review author (CW) screened the titles and abstracts retrieved from the literature search to exclude duplicate records and clearly‐ineligible articles (i.e. studies of non‐humans or inappropriate settings). The remaining titles and abstracts were then screened independently by two review authors (AF, AG, LW, NN, RS, RW, SY, or TD). We obtained full texts of all remaining potentially relevant or unclear articles and authors independently reviewed these against our inclusion criteria, in duplicate (AF, AG, LW, NN, RS, RW, RH, SY, or TD). We used Google translate for abstracts or obtained translation from non‐English speaking collaborators. At each stage, disagreements were resolved by discussion between the two review authors and, where required, by consulting a third review author (CW or LW). We recorded reasons for exclusion of studies in the 'Characteristics of excluded studies' table.

Data extraction and management

Two review authors (CW, NN, PB, RS, RW, SY, RH, BP or TD) independently extracted data using a data extraction form adapted from the Cochrane Public Health Group Methods Manual (CPHG 2011). Any disagreements in data extraction were resolved by discussion or by consulting a third author (LW), where required.

Where key data were missing from the study reports, we attempted to contact the authors to obtain the information. Where multiple reports of the same trial were published, we extracted data from those deemed the most applicable. We extracted data comprehensively to cover all relevant outcomes and methods reported across studies.

We extracted and reported the following study characteristics:

-

information regarding study eligibility as well as the study design, date of publication, school type, country, participant/school demographic/socioeconomic characteristics, number of experimental conditions, as well as information to allow assessment of risk of study bias;

-

information describing the characteristics of the implementation strategy, including the duration, and intervention (policy, program, practice), the theoretical underpinning of the strategy (if noted in the study), information to allow classification against the EPOC Group 'Taxonomy of Interventions', as well as data describing consistency of the execution of the strategy with a planned delivery protocol (EPOC 2015);

-

information on trial primary and secondary outcomes, including the data collection method, validity of measures used, effect size and measures of outcome variability, costs and adverse outcomes; and

-

information on the source(s) of research funding and potential conflicts of interest.

Assessment of risk of bias in included studies

Assessment of risk of bias considered study design and reporting characteristics relevant to the implementation outcomes of the included studies only. For included trials, we used Cochrane's tool for assessing risk of bias, which includes assessments based on domains (selection bias, performance bias, detection bias, attrition bias and reporting bias) (Higgins 2011). We also included additional criteria for cluster‐RCTs including 'recruitment to cluster', 'baseline imbalance', 'loss of clusters', 'incorrect analysis', 'contamination' and 'compatibility with individually RCTs'. We included an additional criterion 'potential confounding' for the assessment of the risk of bias in non‐randomised trial designs. We assessed studies as having 'low', 'high', or 'unclear' risk of bias in accordance with the Cochrane Handbook for Systematic Reviews of Interventions (Higgins 2011).

Two pairs of authors (FT, TCM and AG, AF) assessed risk of bias independently for each study. Any disagreement was resolved by discussion, or if required, by involving an additional author (LW).

Measures of treatment effect

Considerable differences in study measures and primary and secondary outcomes reported by included studies precluded the use of summary statistics to describe treatment effects. As such, we synthesised study findings narratively based on the outcomes reported in the included trials. For dichotomous implementation outcomes, these included absolute differences in the proportion of schools or teachers implementing a policy, practice or program. Continuous outcomes were reported as absolute, non‐standardised differences (mean difference) for measures including an implementation score, the percentage of policy or program implementation, or the frequency or time in which a policy, practice or program implementation occurred.

Unit of analysis issues

We examined cluster trials for unit of analysis errors and identified trials with such errors in the 'Risk of bias' summary.

Dealing with missing data

When outcomes, methods, or results of the studies were missing or unclear, we contacted the corresponding authors of the published trial to supply the data. Any information provided was incorporated into the review as appropriate. Any evidence of potential selective reporting or incomplete reporting of trial data was documented in the 'Risk of bias' tables.

Assessment of heterogeneity

We were unable to examine heterogeneity quantitatively through the use of I2 statistic or forest plots given considerable differences in the implementation strategies, outcomes, measures and comparators that precluded pooling of data. Clinical heterogeneity of the included studies was therefore described narratively.

Assessment of reporting biases

We compared published reports with information in trial registers and protocols to assess reporting bias where such information was available. Where we suspected reporting bias (via assessment of risk of bias in included studies), we attempted to contact study authors and ask them to provide missing outcome data. Instances of potential reporting bias were recorded in the 'Risk of bias' summary.

Data synthesis

Primarily, trial heterogeneity precluded meta‐analysis. The target population in trials varied, including teaching staff, school food service staff and principals. No two trials employed the same implementation strategies. Included studies compared implementation strategies with a different strategy, minimal support control or usual practice. Substantial heterogeneity was particularly evident for trial outcomes in terms of assessment methods and measures, which often occurred at multiple levels (at a school level and/or teacher/class level). The availability of data to pool was further limited by reporting of dichotomous and continuous outcomes which were not able to be combined. Further, the review identified studies with randomised and non‐randomised designs. Pooling data across such trial designs is not recommended (Higgins 2011). Finally, meta‐analysis with a small number of studies (< five) is problematic and can produce imprecise estimates of effect given the underlying assumptions of random‐effects models (Higgins 2008).

As such, and consistent with the approach of a previous Cochrane review of implementation strategies in the childcare setting (Wolfenden 2016), we narratively synthesised trial findings based on the outcomes reported. As trial heterogeneity precluded meta‐analysis, we described the effects of interventions for individual trials by reporting the absolute effect size of the primary outcome measure for policy, practice or program implementation for each study. We focused on specified primary outcomes where available as the intervention (implementation strategy) was designed to directly influence this outcome, the trial (should be) powered to detect meaningful effects on these measures, and as pre‐specified primary (as opposed to secondary) outcomes are considered most appropriate for hypothesis testing. We calculated the effect size by subtracting the change from baseline on the primary implementation outcome for the control (or comparison) group from the change from baseline in the experimental or intervention group. For trials with multiple follow‐up periods, we used data from the final follow‐up period reported. If data to enable calculation of change from baseline were unavailable, we used the differences between groups post‐intervention. Where there were two or more primary implementation outcome measures, we used the median effect size of the primary outcomes and also reported the range. Where the primary outcome measure was not identified by the study authors in the published manuscripts, we used the implementation outcome on which the trial sample size calculation was based or, in its absence, we took the median effect size of all measures judged to be implementation outcomes reported in a manuscript and also reported the range. Such an approach was previously used in the Cochrane review of the effects of audit and feedback on professional practices published by the Cochrane EPOC Group (Ivers 2012), and in our previous review of implementation strategies in the childcare setting (Wolfenden 2016). In instances where subscales of an overall implementation score were reported, in addition to a total scale score, we used the total score as the primary outcome to provide a more comprehensive measure of implementation. We reverse‐scored implementation measures that did not represent an improvement (e.g. the proportion of schools without a healthy menu) in the calculation of median effects. In instances where there were self‐reported, and observed data assessing the same implementation outcome, observational measures were extracted in place of self‐report given observation represents a more objective measure of implementation.

We present the effects of interventions grouped according to the outcome data (continuous or dichotomous) and implementation measure reported. For individual studies where there is no single primary implementation outcome, we describe the median as well as report the range of effects across all comparable measures (description of within‐trial effects). To characterise the effects of interventions across studies (description of between‐study effects), we report an unadjusted median and range of the absolute effects across included trials. The median and range for between‐study effects were calculated using the absolute effect size of the primary implementation outcome of individual trials, or the median of such measures where a single primary outcome was not reported. Such synthesis is intended for descriptive, rather than interpretative purposes, as it does not consider the trial characteristics (e.g. variance) for which trial weights are applied in formal meta‐analysis.

A 'Summary of findings' table was generated to present the key findings of included studies (summary of findings Table for the main comparison for the main comparison), based on recommendations of the Cochrane EPOC group and the Cochrane Handbook for Systematic Reviews of Interventions and included a list of primary and secondary outcomes in the reviews, a description of the intervention effect, the number of participants and studies addressing the outcome, and a grade for the overall quality of evidence. We used the GRADE system to assess the quality of the body of evidence through consideration of study limitations, consistency of effect, imprecision, indirectness and publication bias. Two review authors assessed the overall quality of evidence using the GRADE system (LW + RH) and consulted a third review author (CW) where consensus on any issues arising could not be reached. The quality of the body of evidence for each individual outcome was graded accordingly from 'High' to 'Very Low' in accordance with the Cochrane Handbook for Systematic Reviews of Interventions (Higgins 2011). Given the variability in the denominator for various implementation outcomes across and within included trials, we report the total number of schools providing data in the 'Summary of findings' table as all trials allocated schools to experimental groups.

Subgroup analysis and investigation of heterogeneity

Quantitative examination of heterogeneity could not be conducted as we were unable to pool outcome data from trials. However, clinical and methodological heterogeneity of studies is described narratively based on participant, intervention, outcome and study design characteristics. In order to investigate the impact of implementation strategies in improving implementation of policies, practices or programs at scale (defined as targeting implementation in 50 or more schools), we performed a narrative synthesis on a subgroup of studies where implementation occurred at scale.

Sensitivity analysis

We did not carry out a sensitivity analysis by removing studies with a high risk of bias from the meta‐analysis as no quantitative synthesis was conducted.

Results

Description of studies

See Characteristics of included studies; Characteristics of excluded studies; Characteristics of ongoing studies.

Results of the search

Full details of each of the included trials are presented in the Characteristics of included studies table. The reasons for excluding trials are reported in the Characteristics of excluded studies table. One ongoing study was identified and details are presented in the Characteristics of ongoing studies table. The electronic search, conducted to 31 August 2016, yielded 22,056 citations (Figure 1). We identified an additional 3125 records from handsearching key journals, checking reference lists of included trials and Google Scholar searches. Through our contact with authors of included trials or, experts in the field, we identified two additional articles. One was in‐press and the other was published later than our search dates; both contained eligible trials. The information obtained through contact with trial authors was incorporated into the Characteristics of included studies table and used in assessments of risk of bias, and trial outcomes. Following screening of titles and abstracts, we obtained the full texts of 385 manuscripts for further review, of which we included 81 manuscripts describing 27 individual trials.

Study flow diagram.

Included studies

Types of studies

Of the 27 included trials 18 were conducted in the USA (Alaimo 2015; Cunningham‐Sabo 2003; Delk 2014; French 2004; Gingiss 2006; Heath 2002; Hoelscher 2010; Lytle 2006; McCormick 1995; Mobley 2012; Perry 1997; Perry 2004; Sallis 1997; Saunders 2006; Simons‐Morton 1988; Story 2000; Whatley Blum 2007; Young 2008), with the remaining trials undertaken in India (Mathur 2016; Saraf 2015), Australia (Nathan 2012; Nathan 2016; Sutherland 2017; Wolfenden 2017; Yoong 2016), Canada (Naylor 2006), and South Africa (De Villiers 2015). Trials were conducted between 1985 (Simons‐Morton 1988), and 2015 (Nathan 2016). In the assessment of implementation outcomes, eight studies employed randomised controlled trial (RCT) designs (Cunningham‐Sabo 2003; De Villiers 2015; Lytle 2006; Mobley 2012; Nathan 2016; Saunders 2006; Wolfenden 2017; Yoong 2016), 11 used cluster‐RCT designs (Delk 2014; French 2004; Mathur 2016; McCormick 1995; Naylor 2006; Perry 1997; Perry 2004; Saraf 2015; Story 2000; Sutherland 2017; Young 2008), and eight were conducted using non‐RCT designs. Trial designs used to evaluate implementation outcomes differed at times from those used to assess behavioural trial outcomes. For example, Saunders and colleagues assessed school level implementation outcomes (RCT design) as well as the impact of intervention implementation on individual student outcomes located within schools (cluster‐RCT). There was considerable variability in the types of participants, implementation strategies and outcomes reported.

Participants

Trials recruited samples of between four (Simons‐Morton 1988), and 828 (Nathan 2012) schools. In four trials, 50 or more schools were allocated to the intervention group to receive implementation support (Alaimo 2015; Gingiss 2006; Nathan 2012; Perry 1997). The majority of trials were conducted in elementary (or primary) schools catering for children between five years and 12 years (Cunningham‐Sabo 2003; De Villiers 2015; Heath 2002; Hoelscher 2010; Nathan 2012; Nathan 2016; Naylor 2006; Perry 1997; Perry 2004; Sallis 1997; Simons‐Morton 1988; Story 2000; Sutherland 2017; Wolfenden 2017; Yoong 2016). Six were conducted in middle schools where children are aged between 11 years and 14 years (Alaimo 2015; Delk 2014; Lytle 2006; Mobley 2012; Saraf 2015; Young 2008), two in both middle and high schools (Gingiss 2006; McCormick 1995), and four were conducted in high schools only typically catering for children aged 13 or 14 years to 18 years of age (French 2004; Mathur 2016; Saunders 2006; Whatley Blum 2007). A number of trials reported they were conducted in low‐income regions or in schools with students from predominantly low‐income households (Alaimo 2015; De Villiers 2015; Heath 2002; Hoelscher 2010; Lytle 2006; Mathur 2016; Mobley 2012; Nathan 2012; Sutherland 2017).

Interventions

There was considerable heterogeneity in the implementation strategies employed. All trials examined multi‐strategic implementation strategies with the most common implementation strategies being educational materials, educational outreach and educational meetings. No two trials examined the same combinations of implementation strategies (Table 1). The EPOC taxonomy descriptors of the implementation strategies employed by included trials are described in Table 2. In the trial reported by McCormick and colleagues, the duration of implementation support ranged from four months to more than four years. Seven trials did not report the use of any theory or theoretical frameworks. Eight trials used explicit implementation or dissemination theories and frameworks including the Charter and Jones Framework (institutional commitment, structural context, role performance, learning activities) (Simons‐Morton 1988), the Theoretical Domains Framework (TDF) (Nathan 2016; Wolfenden 2017), consolidated frameworks for practice change (Nathan 2012), social‐ecological theory (Sutherland 2017), diffusion of innovation and/or organisational change (McCormick 1995; Young 2008), and control theory (Yoong 2016). While other trials reported the use of operant learning theory (Young 2008), Social Contextual Model of Health Behavior Change (Mathur 2016), social‐ecological models (De Villiers 2015, Hoelscher 2010; Mobley 2012; Naylor 2006; Saunders 2006; Young 2008), social cognitive theory (Hoelscher 2010; Lytle 2006; Perry 2004; Story 2000; Young 2008), and social learning theory and/or organisational change (Cunningham‐Sabo 2003; Heath 2002; Perry 1997; Story 2000), often in the description of intervention content rather than a framework to guide an implementation strategy.

| Trial | Audit and feedback | Clinical practice guidelines | Continuous quality improvements | Distribution of supplies | External funding | Education games | Education materials | Education meeting | Education outreach visits | Inter‐ professional education | Length of consultation | Local consensus process | Local opinion leader | Managerial supervision | Monitoring performance of delivery | Pay for performance | Tailored intervention | The use of communication technology | Other |

| X | X | X | X | X | X | ||||||||||||||

| X | X | X | X | ||||||||||||||||

| X | X | X | X | ||||||||||||||||

| X | X | X | X | X | X | ||||||||||||||

| X | X | X | X | ||||||||||||||||

| X | X | X | X | ||||||||||||||||

| X | X | X | |||||||||||||||||

| X | X | X | X | X | X | X | |||||||||||||

| X | X | X | X | ||||||||||||||||

| X | X | X | X | X | |||||||||||||||

| X | X | X | |||||||||||||||||

| X | X | X | X | X | X | X | X | ||||||||||||

| X | X | X | X | X | X | X | |||||||||||||

| X | X | X | X | X | X | X | |||||||||||||

| X | X | X | X | X | X | ||||||||||||||

| X | X | X | X | ||||||||||||||||

| X | X | X | X | X | |||||||||||||||

| X | X | X | X | X | |||||||||||||||

| X | X | X | X | X | X | X | |||||||||||||

| X | X | X | X | X | X | ||||||||||||||

| X | X | X | X | X | X | X | |||||||||||||

| X | X | ||||||||||||||||||

| X | X | X | X | X | X | ||||||||||||||

| X | X | X | X | X | X | X | X | ||||||||||||

| X | X | X | X | X | X | X | X | X | |||||||||||

| X | X | X | X | ||||||||||||||||

| X | X | X | X | X | X |

| EPOC subcategory | Definition |

| Audit and feedback | A summary of health workers’ performance over a specified period of time, given to them in a written, electronic or verbal format. The summary may include recommendations for clinical action. |

| Clinical practice guidelines | Clinical guidelines are systematically developed statements to assist healthcare providers and patients to decide on appropriate health care for specific clinical circumstances'(US IOM). |

| Educational materials | Distribution to individuals, or groups, of educational materials to support clinical care, i.e. any intervention in which knowledge is distributed. For example this may be facilitated by the Internet, learning critical appraisal skills; skills for electronic retrieval of information, diagnostic formulation; question formulation. |

| Educational meetings | Courses, workshops, conferences or other educational meetings. |

| Educational outreach visits, or academic detailing | Personal visits by a trained person to health workers in their own settings, to provide information with the aim of changing practice. |

| External funding | Financial contributions such as donations, loans, etc. from public or private entities from outside the national or local health financing system. |

| Inter‐professional education | Continuing education for health professionals that involves more than one profession in joint, interactive learning. |

| Length of consultation | Changes in the length of consultations. |

| Local consensus processes | Formal or informal local consensus processes, for example agreeing a clinical protocol to manage a patient group, adapting a guideline for a local health system or promoting the implementation of guidelines. |

| Local opinion leaders | The identification and use of identifiable local opinion leaders to promote good clinical practice. |

| Managerial supervision | Routine supervision visits by health staff. |

| Monitoring the performance of the delivery of healthcare | Monitoring of health services by individuals or healthcare organisations, for example by comparing with an external standard. |

| Other | Strategies were classified as other if they did not clearly fit within the standard subcategories. |

| Pay for performance – target payments | Transfer of money or material goods to healthcare providers conditional on taking a measurable action or achieving a predetermined performance target, for example incentives for lay health workers. |

| Procurement and distribution of supplies | Systems for procuring and distributing drugs or other supplies. |

| Tailored interventions | Interventions to change practice that are selected based on an assessment of barriers to change, for example through interviews or surveys. |

| The use of information and communication technology | Technology based methods to transfer healthcare information and support the delivery of care. |

Fifteen trials tested strategies to implement healthy eating policies, programs or practices (Alaimo 2015; Cunningham‐Sabo 2003; De Villiers 2015; French 2004; Heath 2002; Lytle 2006; Mobley 2012; Nathan 2012; Nathan 2016; Perry 2004; Simons‐Morton 1988; Story 2000; Whatley Blum 2007; Wolfenden 2017; Yoong 2016), six tested strategies targeting physical activity policies or practices (Delk 2014; Naylor 2006; Sallis 1997; Saunders 2006; Sutherland 2017; Young 2008), and three targeted tobacco policies and practices (Gingiss 2006; Mathur 2016; McCormick 1995). Three trials targeted a combination of health behaviours, with two examining implementation of healthy eating and physical activity policies or practices (Hoelscher 2010; Perry 1997), and one trial examining policies or practices to improve implementation of tobacco control, healthy eating and physical activity initiatives (Saraf 2015). None of the included trials sought to increase the implementation of interventions to delay initiation or reduce the consumption of alcohol.

Outcomes

Implementation outcome follow‐up data were collected six months post‐baseline in one trial (Sutherland 2017), 12 to 14 months in 11 trials (Alaimo 2015; Hoelscher 2010; Mathur 2016; Nathan 2016; Saraf 2015; Saunders 2006; Simons‐Morton 1988; Story 2000; Whatley Blum 2007; Wolfenden 2017; Yoong 2016), while another seven trials collected follow‐up data between 16 months and two years post‐baseline (Cunningham‐Sabo 2003; French 2004; Gingiss 2006; Nathan 2012; Naylor 2006; Perry 2004; Young 2008), with the remaining eight trials collecting data between two and a half and four years post‐baseline (Delk 2014; De Villiers 2015; Heath 2002; Lytle 2006; McCormick 1995; Mobley 2012; Perry 1997; Sallis 1997). Four trials used observation‐based measures to assess implementation outcomes (Perry 2004; Sallis 1997; Story 2000; Whatley Blum 2007). A further three trials used school records or documents (Mobley 2012; Nathan 2016; Wolfenden 2017). While one trial used a combination of observation methods and school records (Lytle 2006). In contrast, 13 trials relied on instruments to assess reported policy or practice implementation including surveys, questionnaires, semi‐structured interviews or teacher/staff completion of log‐books (Alaimo 2015; Cunningham‐Sabo 2003; De Villiers 2015; Delk 2014; Gingiss 2006; Heath 2002; Hoelscher 2010; Mathur 2016; McCormick 1995; Nathan 2012; Naylor 2006; Saunders 2006; Simons‐Morton 1988). Only one trial using these measures reported the instrument had been validated (Nathan 2012). A further six trials used both objective (direct observation or school records) and self‐report (staff completion of log‐books, surveys, questionnaires or interview) techniques for implementation outcome assessment (Perry 1997; French 2004; Saraf 2015; Sutherland 2017; Yoong 2016; Young 2008). Only one of these trials reported the self‐report measures had been validated (Young 2008).

Eight trials assessed student physical activity (Hoelscher 2010; Naylor 2006; Perry 1997; Sallis 1997; Saraf 2015; Saunders 2006; Sutherland 2017; Young 2008). Physical activity behaviours were assessed using accelerometer (Sallis 1997; Sutherland 2017; Young 2008), pedometer (Naylor 2006), student questionnaire (Perry 1997; Naylor 2006; Perry 1997; Saraf 2015; Saunders 2006), observations (Perry 1997; Hoelscher 2010; Sallis 1997) and fitness‐tests (Perry 1997; Naylor 2006; Sallis 1997). Fourteen trials assessed child nutritional intake or food selection using questionnaires (Alaimo 2015; Cunningham‐Sabo 2003; De Villiers 2015; French 2004; Hoelscher 2010; Lytle 2006; Mobley 2012; Perry 1997; Saraf 2015; Simons‐Morton 1988; Story 2000; Whatley Blum 2007), observation (Cunningham‐Sabo 2003; Perry 2004; Story 2000; Wolfenden 2017), or sales data (French 2004). Anthropometric measures, assessed objectively, were collected from participants in nine trials (Cunningham‐Sabo 2003; Heath 2002; Hoelscher 2010; Mobley 2012; Naylor 2006; Perry 1997; Sallis 1997; Saunders 2006; Young 2008). Tobacco use was assessed in two trials using questionnaires (Saraf 2015) and observation (Mathur 2016). No trials assessed student alcohol use. Two trials included a measure that was specified in the study methods as an assessment of potential unintended adverse effects (Mobley 2012; Wolfenden 2017), and two trials reported cost analyses (Heath 2002; Mobley 2012). While, three trials reported on the knowledge, skills or attitudes of school staff regarding implementation (Delk 2014; Gingiss 2006; McCormick 1995).

Types of comparisons

The predominance of trials (n = 22) compared implementation strategies against usual practice or waiting‐list control (Alaimo 2015; Cunningham‐Sabo 2003; French 2004; Gingiss 2006; Heath 2002; Lytle 2006; Mathur 2016; Mobley 2012; Nathan 2016; Naylor 2006; Perry 1997; Perry 2004; Sallis 1997; Saraf 2015; Saunders 2006; Simons‐Morton 1988; Story 2000; Sutherland 2017; Whatley Blum 2007; Wolfenden 2017; Young 2008; Yoong 2016), while two compared against different interventions (Delk 2014; Hoelscher 2010) and three trials used a minimal support comparison group (De Villiers 2015; McCormick 1995; Nathan 2012). Among trials using a minimal support control group, all schools in the study by Nathan and colleagues, including those allocated to control could have received support from a non‐government agency to assist with implementation of a fruit and vegetable break if they sought out such support. In the trial by McCormick and colleagues, control schools received curricula in the mail and technical assistance upon request. Finally in the trial by De Villiers and colleagues, Principals at schools in the control arm received a booklet with “tips” for healthy schools and a guide to resources that could be accessed to assist in creating a healthier school environment. Seven trials did not describe the comparison condition and so we assumed that the comparison was usual practice (Cunningham‐Sabo 2003; Gingiss 2006; Heath 2002; Saraf 2015; Simons‐Morton 1988; Story 2000; Young 2008).

Five trials included more than two trial arms (Alaimo 2015; Delk 2014; Naylor 2006; Perry 1997; Sallis 1997). The School Nutrition Advances Kids (SNAK) study included four conditions, three interventions and one control (Alaimo 2015). The three intervention conditions all sought to improve the implementation of nutrition policies and practices of schools. In all intervention conditions, implementation support included local consensus processes (convened by a coordinated school health team), educational outreach (visit from a trained facilitator), external funding ($1000 to implement aspects of the intervention) and tailored intervention (individualised action plans). In the second intervention group, such support was more intensive, for example, more frequent contact with the trained facilitator and additional funding ($400) for implementation. In the third group, the more intensive implementation support was also offered but schools were asked to implement additional changes to their cafeteria à la carte lines and were provided with a further $1500 (Alaimo 2015). The implementation outcomes reported in the paper combine all intervention conditions into a single group for comparison against the control group, and is reported accordingly in this review.

The Action Schools! British Columbia (BC) program randomised schools into three conditions (Naylor 2006). Two groups received implementation support. The implementation strategies utilised were identical in these two intervention groups, however, in one group post‐training support was provided directly to school teachers via a school facilitator, while in the second group, post‐training support was provided to a designated champion who was asked to activate and support their teacher colleagues (Naylor 2006). The third group served as a usual practice control. For this trial, we combined intervention groups by calculating, relative to the control, the unadjusted median effect (and range) across intervention conditions.

In the Child and Adolescent Trial for Cardiovascular Health (CATCH) trial, schools were randomly assigned to either an intervention condition or a control condition (Perry 1997). Of the 56 schools assigned to intervention, 28 schools were randomly assigned to an intervention arm targeting the same implementation outcomes and including the same implementation strategies, but were also asked to implement programs targeting families. Implementation data for the two intervention groups were combined in the reporting of the paper and the combined data used in this review.

The Sports, Play, and Active Recreation for Kids (SPARK) trial randomised schools to two intervention and one control condition (Sallis 1997). Data were only extracted for two of the three assigned groups; the control group where implementation support was not provided and an experimental group where implementation support was provided to usual teaching staff to implement the intervention. The third group, where physical activity practices were implemented by external specialist physical education teachers hired by the research team was excluded as per review inclusion criteria.

Finally, in the trial by Delk and colleagues, 30 schools were randomised into three conditions. As each condition contained different implementation strategies data were extracted and reported across all conditions.

Other study design characteristics

In a number of trials, decisions regarding study inclusion and data extraction were particularly complex. The SPARK trial included post‐intervention implementation outcome data only, however they used random assignment for six of the seven included schools, randomly allocating them to one of three conditions (Sallis 1997). The remaining school was allocated by the researchers to the control group. Despite the lack of baseline implementation data, given the use of random assignment, and similarity of other trial sample characteristics, the trial was retained in the review. The Texas Tobacco Initiative was also a non‐randomised trial that did not report baseline data for the implementation outcomes, however, was included as the authors state that there were no differences between groups at baseline on these measures (Gingiss 2006). Similarly, for the SPARK program, no single primary implementation outcome was reported. Implementation outcomes included measures of lesson context (management, general knowledge, fitness knowledge, fitness activity, skill drills and game play), measures of teacher behaviour (promotes fitness, demonstrates fitness, instructs generally, manages, observes and off task), as well as measures of lesson duration and frequency (Sallis 1997). However, only for lesson duration and frequency was the desired quantity or direction of effect specified in the published reports (three lessons per week of 30 minutes duration each). While improving lesson context and teacher behaviour was an objective of the support strategy, the desired direction of effect for each measure was not clear. For example, it was unclear if teachers were supported to reduce time spent managing or observing children during class time and more time in promoting fitness. As such, both frequency and duration of lessons were only extracted as outcomes for this trial. Identical measures of lesson context were also reported in the Coordinated Approach to Child Health (CATCH) project (Perry 1997), as well as the El Paso CATCH program (Heath 2002), and were similarly excluded.

A variety of outcomes pertaining to program implementation were reported across the published reports of the CATCH intervention (Perry 1997). At times there was inconsistency in the reported key implementation policies and practices targeted by the program. Given this, implementation outcome data were extracted from the study published by Perry and colleagues as the objective of this paper was specifically to report on program implementation and measures including intervention 'fidelity'. The median effects of these outcomes are reported as no single primary outcome was identified.

Finally, in the Lifestyle Education for Activity Program (LEAP) trial (Saunders 2006), implementation of targeted policies and practices in the experimental group was presented in subgroups of 'high' and 'low' implementers and could not be combined into a single group. As such, effect size estimates for outcomes reported in this trial between groups were unable to be reported.

Excluded studies

Following screening of titles and abstracts, we obtained the full texts of 385 papers for further assessment of eligibility (Figure 1). Of these, 305 papers were considered ineligible. Primary reasons for exclusion included inappropriate: participants n = 17; intervention n = 7; comparator n = 30; and outcomes n = 233. Studies were excluded based on 'inappropriate outcomes' if they: did not report any implementation outcomes; did not report implementation outcomes for both intervention and control groups; or did not report between‐group differences in implementation outcomes. We also excluded 11 papers that did not report the results of a trial; and a further seven studies that were non‐randomised and did not report comparability of implementation outcomes between groups at baseline (i.e. it could not be assumed that differences between groups were zero) (Donnelly 1996; Harvey‐Berino 1998; Hoelscher 2003; Hoelscher 2004; Kelder 2003; O’Brien 2010; Osganian 2003).

Risk of bias in included studies

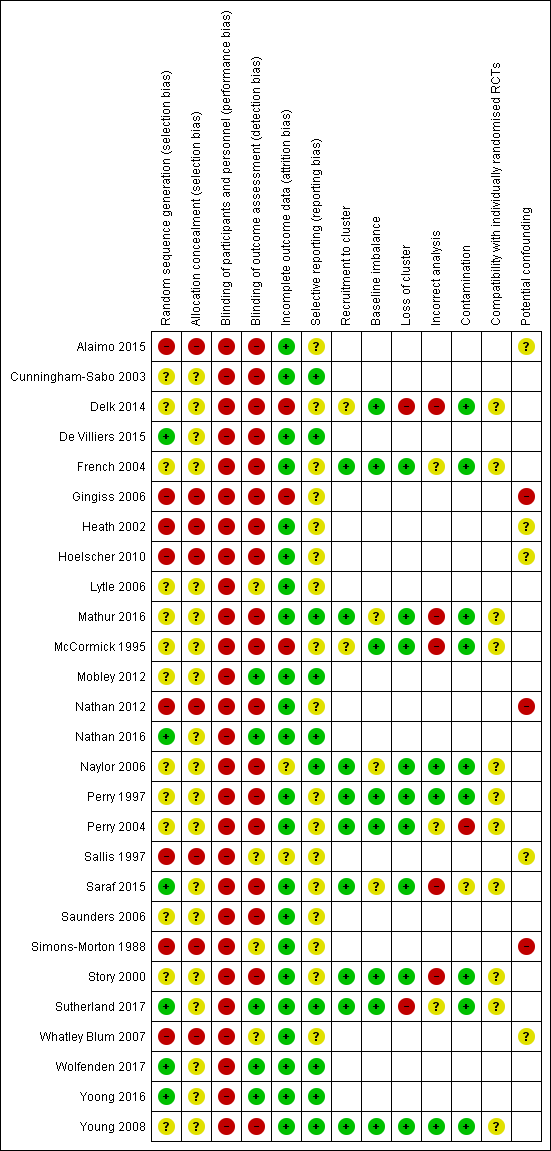

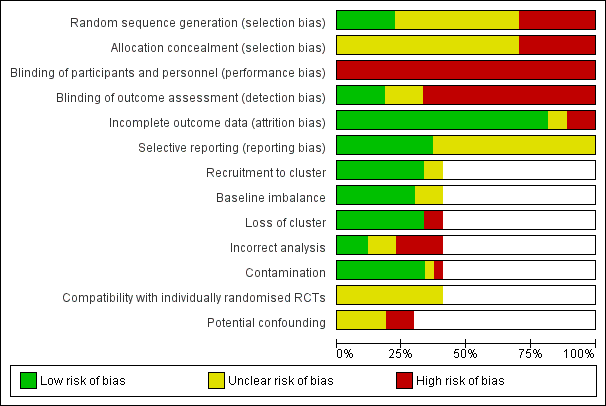

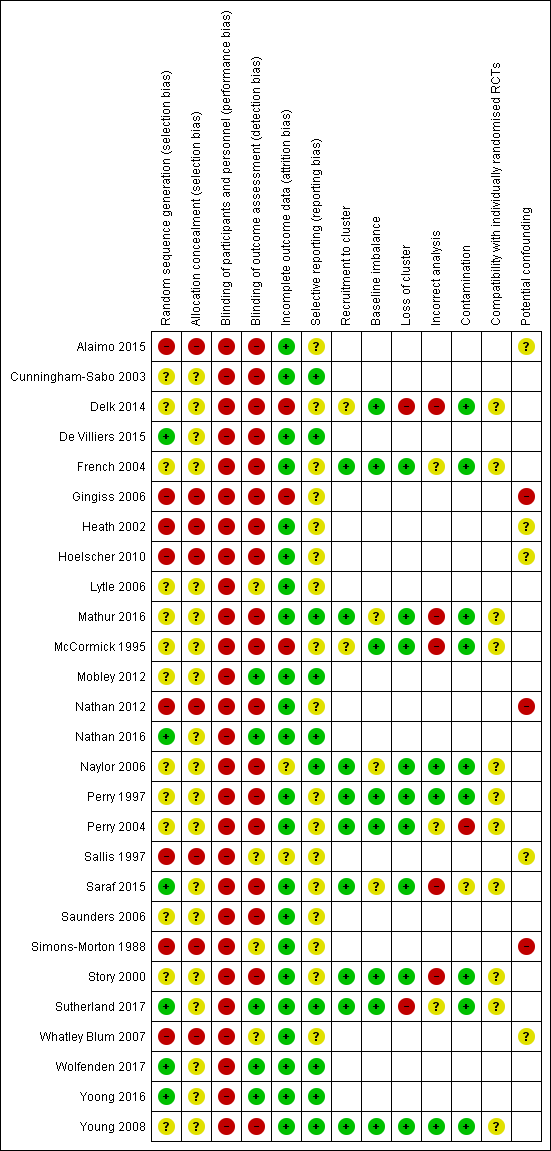

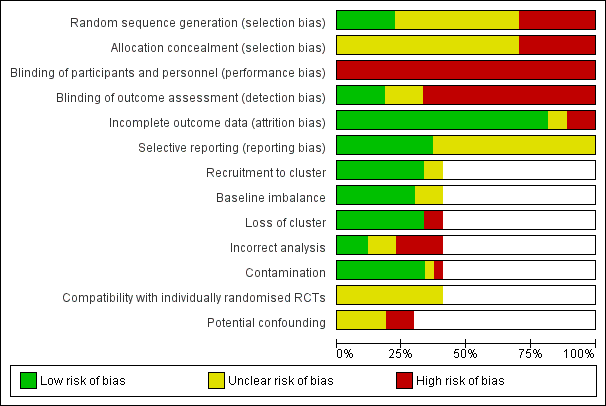

Assessment of risk of bias considered study design and reporting characteristics relevant to the implementation outcomes of the included studies (Figure 2; Figure 3).

Risk of bias summary: review authors' judgements about each risk of bias item for each included study.

Risk of bias graph: review authors' judgements about each risk of bias item presented as percentages across all included studies.

Allocation

Risk of selection bias differed across the 27 trials. All of the eight non‐randomised trials were considered to have a high risk of selection bias for both random sequence generation and concealment of allocation (Alaimo 2015; Gingiss 2006; Heath 2002; Hoelscher 2010; Nathan 2012; Sallis 1997; Simons‐Morton 1988; Whatley Blum 2007). For the 11 trials with cluster‐RCT designs, only two were considered low risk for random sequence generation (Saraf 2015; Sutherland 2017), using the drawing of lots or computerised random number function to determine allocation to intervention or control groups. While four of the eight trials using RCT designs were considered low risk for random sequence generation (De Villiers 2015; Nathan 2016; Wolfenden 2017; Yoong 2016). The bias for concealment was unclear for all RCTs (Cunningham‐Sabo 2003; De Villiers 2015; Lytle 2006; Mobley 2012; Nathan 2016; Saunders 2006; Wolfenden 2017; Yoong 2016) and cluster‐RCTs (Delk 2014; French 2004; Mathur 2016; McCormick 1995; Nathan 2016; Naylor 2006; Perry 1997; Perry 2004; Saraf 2015; Story 2000; Young 2008).

Blinding

All 27 studies were considered to have high risk of performance bias, due to participants and research personnel not being blind to group allocation. Only four studies had a low risk for implementation outcome assessment, as this was conducted by staff who were blind to group allocation (Mobley 2012; Nathan 2016; Wolfenden 2017; Yoong 2016). Of the remaining 23 studies, detection bias was high for 17 studies primarily due to the use of self‐report measures (Cunningham‐Sabo 2003; Delk 2014; De Villiers 2015; French 2004; Gingiss 2006; Heath 2002; Hoelscher 2010; Mathur 2016McCormick 1995; Nathan 2012; Naylor 2006; Perry 1997; Perry 2004; Saraf 2015; Saunders 2006; Story 2000; Young 2008). For three studies, the risk of detection bias was unclear (Lytle 2006; Sallis 1997; Whatley Blum 2007), and for the remaining three studies, the risk of detection bias was high, low or unclear across one or more outcome measures (Alaimo 2015; Simons‐Morton 1988; Sutherland 2017).

Incomplete outcome data

For the majority of studies, the risk of attrition bias was low, as either all or most schools were still participating in the study at follow‐up, and their data included in the analyses. Two studies had a high attrition bias (Delk 2014; Gingiss 2006). In particular, Gingiss and colleagues reported 25 (19%) of schools were lost for the Principal survey and 50 (37%) schools were lost for the Health Coordinator survey. For the remaining studies, the risk of attrition bias was high, low or unclear for some (McCormick 1995; Sutherland 2017), or unclear for all (Naylor 2006; Sallis 1997), of the reported outcome data.

Selective reporting

Seventeen trials did not have a published protocol paper or trial registration record and therefore it was unclear whether reporting bias had occurred. The risk of reporting bias was low for the remaining 10 studies as protocols, design papers, or reports were available, and all a priori determined outcomes were reported (Cunningham‐Sabo 2003; De Villiers 2015; Mathur 2016; Mobley 2012; Nathan 2016; Naylor 2006; Sutherland 2017; Wolfenden 2017; Yoong 2016; Young 2008).

Other potential sources of bias

Eleven studies used a cluster‐RCT design (Delk 2014; French 2004; Mathur 2016; McCormick 1995; Naylor 2006; Perry 1997; Perry 2004; Saraf 2015; Story 2000; Sutherland 2017; Young 2008). Therefore we assessed the potential risk of additional biases for this group. For the potential risk of recruitment (to cluster) bias, two studies had an unclear risk (Delk 2014; McCormick 1995), while nine studies were low risk as randomisation to groups occurred either post‐recruitment or post‐baseline assessment (French 2004; Mathur 2016; Naylor 2006; Perry 1997; Perry 2004; Saraf 2015; Story 2000; Sutherland 2017; Young 2008). Regarding risk of bias due to baseline imbalances, three studies were at unclear risk while the remaining eight studies had a low risk due to the random allocation of schools to experimental groups, stratification by school characteristics, or adjustments for baseline differences being made within the analyses (Delk 2014; French 2004; McCormick 1995; Perry 1997; Perry 2004; Story 2000; Sutherland 2017; Young 2008). All studies except Delk 2014 and Sutherland 2017 had a low risk for loss of clusters. Only three studies had a low risk for incorrect analysis as the appropriate statistical analysis was undertaken to allow for clustering within groups (Naylor 2006; Perry 1997; Young 2008). Five studies were judged as having a high risk for incorrect analysis (Delk 2014; Mathur 2016; McCormick 1995; Saraf 2015Story 2000), while for three studies the analysis performed was unclear (French 2004; Perry 2004; Sutherland 2017). The risk of contamination was judged as high for one trial (Perry 2004). All 11 cluster‐RCTs were at unclear risk for compatibility with individually‐randomised trials as we were unable to determine whether a herd effect existed.

For the eight studies with non‐randomised designs, three studies were considered to have a high risk of bias due to potentially confounding factors (Gingiss 2006; Nathan 2012; Simons‐Morton 1988). For the remaining studies (n = 5) it was unclear whether confounders were adequately adjusted for.

Effects of interventions

See: Summary of findings for the main comparison

The majority of included trials reported significant improvements in at least one implementation outcome measure (Table 3). All primary implementation outcomes in nine trials (Delk 2014; French 2004; Mathur 2016; Nathan 2012; Nathan 2016; Naylor 2006; Sallis 1997; Story 2000; Wolfenden 2017), were significant, as were the majority of outcomes reported across implementation measures in a further five trials (Gingiss 2006; Hoelscher 2010; Perry 1997; Saraf 2015; Whatley Blum 2007). In three trials there was no significant improvements in implementation on any primary implementation outcomes (Alaimo 2015; McCormick 1995; Yoong 2016), and in six trials there was improvement in 50% or less of implementation outcomes reported (Cunningham‐Sabo 2003; Heath 2002; Lytle 2006; Perry 2004; Sutherland 2017; Young 2008). Four trials did not report significance testing of between‐group comparisons of implementation outcomes (De Villiers 2015; Mobley 2012; Saunders 2006; Simons‐Morton 1988).

| Trial | Trial name | Targeted risk factor | Implementation strategies | Comparison | Primary Implementation outcome and measures | Effect size | P < 0.05 |

| School Nutrition Advances Kids (SNAK) | Nutrition | Clinical practice guidelines, educational materials, educational outreach visits, external funding, local consensus processes, tailored interventions | Usual practice or waiting‐list control | Continuous: i) Nutrition policy score and ii) Nutrition education and/or practice score (2 measures) | Median (range) 0.65 (0.2 to 1.1) | 0/2 | |

| Pathways | Nutritionc | Clinical practice guidelines, educational materials, educational meetings, educational outreach visits | Usual practice | Continuous: Nutrient content of school meals % of calories from fat breakfast/lunch (2 measures) | Median (range) ‐3% (‐3.3% to ‐2.7%) | 1/2 | |

| HealthKick | Nutritionc | Local opinion leaders, educational materials, educational outreach visits, education meetings | Minimal support control | Dichotomous: % implementing a variety of policies and practices (3 measures) | Median (range) 25% (12.5% to 29.5%) | Not reported | |

| No trial name | Physical activity | Local consensus process, educational meetings, clinical practice guidelines, educational outreach visits, tailored interventions, other | Different implementation strategy | Continuous: % of teachers that conducted activity breaks weekly (1 measure 2 comparisons) Dichotomous: % implementing a variety of policies and practices (2 measures 4 comparisons) | Median (range) 13.3% (11.1% to 15.4%) Median (range) 26.5% (19.4% to 31.9%) | 6/6 | |

| Trying Alternative Cafeteria Options in Schools (TACOS) | Nutrition | Local consensus processes, tailored intervention, educational meetings, pay for performance | Usual practice or waiting‐list control | Continuous % of program implementation (5 measures) | Median (range) 33% (11% to 41%) | 5/5 | |

| Texas Tobacco Prevention Initiative | Tobacco | Educational meetings, educational outreach visits, external funding, local consensus processes | Usual practice | Dichotomous: % implementing a variety of policies and practices (10 measures) | Median (range) 18.5% (‐1% to 59%) | 7/10 | |